2015-02-03 // IBM Storwize V3700 out of Memory

Under certain conditions it is possible to inadvertently run into an out of memory situation on IBM Storwize V3700 systems, by simply running a Download Support Package procedure or the respective CLI command. This will – of course – bring all I/O on the affected system to a grinding halt.

A few days ago, the Nagios monitoring plugin introduced in “Nagios Monitoring - IBM SVC and Storwize” reported a failed PSU on one of our IBM Storwize V3700 systems. After raising a PMR with IBM in order to get the seemingly defective PSU replaced, i was told to simply reseat the PSU. According to IBM support this would usually fix this – apparently known – issue.

This was the first WTF moment and it turned out to not be the last one. So either IBM produces and sells subpar components – in this case the PSU – which need to be given a boot – yes, PSUs nowadays have their own firmware too – in order to be persuaded to cooperate again. Or it means IBM produces and sells subpar software which is not at all able to properly detect a component failure and distinguish between a faulty and a good PSU. Or perhaps its an unfortunate combination of both.

In any case, the procdure to reseat the PSU was carried out, which fixed the PSU issue. During the course of the fix procedure the system would become unreachable via TCP/IP for a rather long time, though. Definately over two minutes, but i haven't had a chance for an exact measurement. After the system was reachable again i followed this strange behaviour up and had a look at the systems event log. There were quite a lot of “Error Code: 1370, Error Code Text: SCSI ERP occurred” messages, so i decided to bother the IBM support again and send them a support collection in order to get an analysis with regard to the reachablilty issue as well as the 1370 errors.

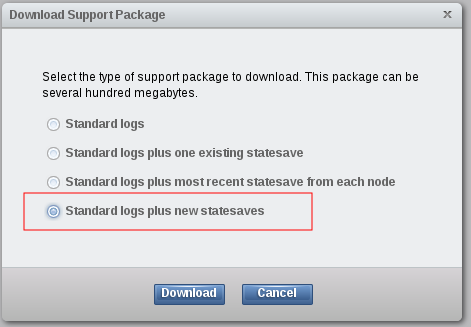

From previous occasions i knew that the IBM support would most likely request a support collection which was run with the “svc_livedump” CLI command or with the “Standard logs plus new statesaves” option from the WebUI. The latter one is marked red in the following screenshot example:

So i decided to pull a support collection with this option. After some time into the support collection process, the SVC sitting in front of the V3700 and other storage systems, started to show very high latencies (~60 sec.) on the primary VDisks backed by the V3700. On other VDisks which “only” had their secondary VDisk-Mirror located on MDiskGroups of the V3700, the latency peak was less dramatic, but still very noticable. Eventually degraded paths to the MDisks located on the V3700 started showing up on the SVC. After the support collection process finished the situation went back to normal. The latency on both primary and secondary VDisks instantly dropped down to the usual values and after running the fix procedures on the SVC, the degraded paths came back online.

The performance issues were in magnitude and duration severe enough to affect several applications pretty badly. Although the immediate issue was resolved, i still needed an analysis and written statement from IBM support for an action plan on how to prevent this kind of situation in the future and for compliance reasons as well. Here is the digest of what – according to IBM – happened:

During the procedure to reseat the reportedly defective PSU, one or more power surges occured.

These power surges apparently caused issues on the internal disk buses, which lead to the 1370 errors to be logged.

The power surges or the resulting 1370 errors are probably the cause for a failover of the config node too. Hence the connectivity issues with the CLI and the WebUI via TCP/IP.

More 1370 errors were logged during the runtime of the subsequent “Standard logs plus new statesaves” support collection process.

The issue of very high latency and MDisk paths becoming degraded was caused by the “Standard logs plus new statesaves” support collection process using up all of the memory – yes, including the data cache – on the V3700 system. This behaviour is specific and limited to the V3700 systems with “only” 4GB of memory.

As one can imagine, the last item was my second WTF moment. Apparently there are no programmatical safeguards to prevent the support collection process at a “Standard logs plus new statesaves” level from using up all of the systems memory. This would normally not be that bad at all, if the “Standard logs plus new statesaves” wasn't the particular level which IBM support would usually request on support cases concerning SVC and Storwize systems. On the phone the IBM support technician admitted that this common practice is in general probably a bad idea. But he also mentioned that he up to now hadn't heard of the known side effects actually occuring, like in this case.

The suggestion on how to prevent this kind of situation in the future was to either upgrade the V3700 systems from 4GB to 8GB memory – a solution i would gladly take provided it came free of charge – or to only run the support collection process only with the “svc_snap” CLI command or the “Standard logs” option from the WebUI. Since the memory upgrade for free isn't likely to happen, i'll stick with the second suggestion for now.

Incidently a third option came up over the last weekend. Looking at the IBM System Storage SAN Volume Controller V7.3.0.9 Release Note, it could be construed that someone at IBM SVC and Storwize development came to the realization that the issue could also be addressed in software, by altering the resource utilization of the support collection process:

HU00636 Livedump prepare fails on V3500 & V3700 systems with 4GB

memory when cache partition fullness is less than 35%

Fingers crossed, this fix really addresses and resolves the issue described above.