2017-10-23 // Experiences with Dell PowerConnect Switches

This blog post is going to be about my recent experiences with Broadcom FASTPATH based Dell PowerConnect M-Series M8024-k and M6348 switches. Especially with their various limitations and – in my opinion – sometimes buggy behaviour.

Recently i was given the opportunity to build a new and central storage and virtualization environment from ground up. This involved a set of hardware systems which – unfortunately – were chosen and purchased previously, before i came on board with the project.

System environment

Specifically those hardware components were:

Multiple Dell PowerEdge M1000e blade chassis

Multiple Dell PowerEdge M-Series blade servers, all equipped with Intel X520 network interfaces for LAN connectivity through fabric A of the blade chassis. Servers with additional central storage requirements were also equipped with QLogic QME/QMD8262 or QLogic/Broadcom BCM57810S iSCSI HBAs for SAN connectivity through fabric B of the blade chassis.

Multiple Dell PowerConnect M8024-k switches in fabric A of the blade chassis forming the LAN network. Those were configured and interconnected as a stack of switches. Each stack of switches had two uplinks, one to each of two carrier grade Cisco border routers. Since the network edge was between those two border routers on the one side and the stack of M8024-k switches on the other side, the switch stack was also used as a layer 3 device and was thus running the default gateways of the local network segments provided to the blade servers.

Multiple Dell PowerConnect M6348 switches, which were connected through aggregated links to the stack of M8024-k switches described above. These switches were exclusively used to provide a LAN connection for external, standalone servers and devices through their external 1 GBit ethernet interfaces. The M6348 switches were located in the slots belonging to fabric C of the blade chassis.

Multiple Dell PowerConnect M8024-k switches in fabric B of the blade chassis forming the SAN network. In contrast to the M8024-k LAN switches, the M8024-k SAN switches were configured and interconnected as individual switches. Since there as no need for outside SAN connectivity, the M8024-k switches in fabric B ran a flat layer 2 network without any layer 3 configuration.

Initially all PowerConnect switches – M8024-k both LAN and SAN and M6348 – ran the firmware version 5.1.8.2.

Multiple Dell EqualLogic PS Series storage systems, providing central block storage capacity for the PowerEdge M-Series blade servers via iSCSI over the SAN mentioned above. Some blade chassis based PS Series models (PS-M4110) were internally connected to the SAN formed by the M8024-k switches in fabric B. Other standalone PS Series models were connected to the same SAN utilizing the external ports of the M8024-k switches.

Multiple Dell EqualLogic FS Series file server appliances, providing central NFS and CIFS storage capacity over the LAN mentioned above. In the back-end those FS Series file server appliances also used the block storage capacity provided by the PS Series storage systems via iSCSI over the SAN mentioned above. Both LAN and SAN connections of the EqualLogic FS Series were made through the external ports of the M8024-k switches.

There were multiple locations with roughly the same setup composed of the hardware components described above. Each location had two daisy-chained Dell PowerEdge M1000e blade chassis systems. The layer 2 LAN and SAN networks stretched over the two blade chassis. The setup at each location is shown in the following schematic:

All in all not an ideal setup. Instead, i would have preferred a pair of capable – both functionality and performance-wise – central top-of-rack switches to which the individual M1000e blade chassis would have been connected. Preferrably a seperate pair for LAN an SAN connectivity. But again, the mentioned components were already preselected and pre-purchased.

During the implementation and later the operational phase several limitations and issues surfaced with regard to the Dell PowerConnect switches and the networks build with them. The following – probably not exhaustive – list of limitations and issues i've encountered is in no particular order with regard to their occurrence or severity.

Limitations

While the Dell PowerConnect switches support VRRP as a redundancy protocol for layer 3 instances, there is only support for VRRP version 2, described in RFC 3768. This limits the use of VRRP to IPv4 only. VRRP version 3 described in RFC 5798, which is needed for the implementation of redundant layer 3 instances for both IPv4 and IPv6, is not supported by Dell PowerConnect switches. Due to this limitation and the need for full IPv6 support in the whole environment, the design decision was made to run the Dell PowerConnect M8024-k switches for the LAN as a stack of switches.

Limited support of routing protocols. There is only support for the routing protocols OSPF and RIP v2 in Dell PowerConnect switches. In this specific setup and triggered by the design decision to run the LAN switches as layer 3 devices, BGP would have been a more suitable routing protocol. Unfortunately there were no plans to implement BGP on the Dell PowerConnect devices.

Limitation in the number of secondary interface addresses. Only one IPv4 secondary address is supported per interface on a layer 3 instance running on the Dell PowerConnect switches. Opposed to e.g. Cisco based layer 3 capable switches this was a limitation that caused, in this particular setup, the need for a lot more (VLAN) interfaces than would otherwise have been necessary.

No IPv6 secondary interface addresses. For IPv6 based layer 3 instances there is no support at all for secondary interface addresses. Although this might be a fundamental rather than product specific limitation.

For layer 3 instances in general there is no support for very small IPv4 subnets (e.g. /31 with 2 IPv4 addresses) which are usually used for transfer networks. In setups using private IPv4 address ranges this is no big issue. In this case though, official IPv4 addresses were used and in conjunction with the excessive need for VLAN interfaces this limitation caused a lot of wasted official IPv4 addresses.

The access control list (ACL) feature is very limited and rather rudimentary in Dell PowerConnect switches. There is no support for port ranges, no statefulness and each access list has a hard limit of 256 access list entries. All three – and possibly even more – limitations in combination make the ACL feature of Dell PowerConnect switches almost useless. Especially if there are seperate layer 3 networks on the system which are in need of fine-grained traffic control.

From the performance aspect of ACLs i have gotten the impression, that especially IPv6 ACLs are handled by the switches CPU. If IPv6 is used in conjunction with extensive ACLs, this would dramatically impact the network performance of IPv6-based traffic. Admittedly i have no hard proof to support this suspicion.

The out-of-band (OOB) management interface of the Dell PowerConnect switches does not provide a true out-of-band management. Instead it is integrated into the switch as just as another IP interface – although one with a special purpose. Due to this interaction of the OOB with the IP stack of the Dell PowerConnect switch there are side-effects when the switch is running at least one layer 3 instance. In this case, the standard IP routing table of the switch is not only used for routing decisions of the payload traffic, but instead it is also used to determine the destination of packets originating from the OOB interface. This behaviour can cause an asymmetric traffic flow when the systems connecting to the OOB are covered by an entry in the switches IP routing table. Far from ideal when it comes to true OOB management, not to mention the issuses arising when there are also stateful firewall rules involved.

I addressed this limitation with a support case at Dell and got the following statement back:

FASTPATH can learn a default gateway for the service port, the network port,

or a routing interface. The IP stack can only have a single default gateway.

(The stack may accept multiple default routes, but if we let that happen we may

end up with load balancing across the network and service port or some other

combination we don't want.) RTO may report an ECMP default route. We only give

the IP stack a single next hop in this case, since it's not likely we need to

additional capacity provided by load sharing for packets originating on the

box.

The precedence of default gateways is as follows:

- via routing interface

- via service port

- via network port

As per the above precedence, ip stack is having the default gateway which is

configured through RTO. When the customer is trying to ping the OOB from

different subnet , route table donesn't have the exact route so,it prefers the

default route and it is having the RTO default gateway as next hop ip. Due to

this, it egresses from the data port.

If we don't have the default route which is configured through RTO then IP

stack is having the OOB default gateway as next hop ip. So, it egresses from

the OOB IP only.In my opinion this just confirms how the OOB management of the Dell PowerConnect switches is severely broken by design.

Another issue with the out-of-band (OOB) management interface of the Dell PowerConnect switches is that they support only a very limited access control list (ACL) in order to protect the access to the switch. The management ACL only supports one IPv4 ACL entry. IPv6 support within the management ACL protecting the OOB interface is missing altogether.

The Dell PowerConnect have no support for Shortest Path Bridging (SPB) as defined in the IEEE 802.1aq standard. On layer 2 the traditional spanning-tree protocols STP (IEEE 802.1D), RSTP (IEEE 802.1w) or MSTP (IEEE 802.1s) have to be used. This is particularly a drawback in the SAN network shown in the schematic above, due to the protocol determined inactivity of one inter-switch link. With the use of SPB, all inter-switch links could be equally utilizied and a traffic interruption upon link failure and spanning-tree (re)convergence could be avoided.

Another SAN-specific limitation is the incomplete implementation of Data Center Bridging (DCB) in the Dell PowerConnect switches. Although the protocols Priority-based Flow Control (PFC) according to IEEE 802.1Qbb and Congestion Notification (CN) according to IEEE 802.1Qau are supportet, the third needed protocol Enhanced Transmission Selection (ETS) according to IEEE 802.1Qaz is missing in Dell PowerConnect switches. The Dell EqualLogic PS Series storage systems used in the setup shown above explicitly need ETS if DCB should be used on layer 2. Since ETS is not implemented in Dell PowerConnect switches, the traditional layer 2 protocols had to be used in the SAN.

Issues

Not per se an issue, but the baseline CPU utilization on Dell PowerConnect M8024-k switches running layer 3 instances is significantly higher compared to those running only as layer 2 devices. The following CPU utilization graphs show a direct comparison of a layer 3 (upper graph) and a layer 2 (lower graph) device:

The CPU utilization is between 10 and 15% higher once the tasks of processing layer 3 traffic are involved. What kind of switch function or what type of traffic is causing this additional CPU utilization is completely intransparent. Documentation on such in-depth subjects or details on how the processing within the Dell PowerConnect switches works is very scarce. It would be very interesting to know what kind of traffic is sent to the switches CPU for processing instead of being handled by the hardware.

The very high CPU utilization plateau on the right hand side of the upper graph (approximately between 10:50 - 11:05) was due to a bug in processing of IPv6 traffic on Dell PowerConnect switches. This issue caused IPv6 packets to be sent to the switchs CPU for processing instead of doing the forwarding decision in the hardware. I narrowed down the issue by transferring a large file between two hosts via the SCP protocol. In the first case and determined by preferred name resolution via DNS a IPv6 connection was used:

user@host1:~$ scp testfile.dmp user@host2:/var/tmp/ testfile.dmp 8% 301MB 746.0KB/s 1:16:05 ETA

The CPU utilization on the switch stack during the transfer was monitored on the switches CLI:

stack1(config)# show process cpu Memory Utilization Report status bytes ------ ---------- free 170642152 alloc 298144904 CPU Utilization: PID Name 5 Secs 60 Secs 300 Secs ----------------------------------------------------------------- 41be030 tNet0 27.05% 30.44% 21.13% 41cbae0 tXbdService 2.60% 0.40% 0.09% 43d38d0 ipnetd 0.40% 0.11% 0.11% 43ee580 tIomEvtMon 0.40% 0.09% 0.22% 43f7d98 osapiTimer 2.00% 3.56% 3.13% 4608b68 bcmL2X.0 0.00% 0.08% 1.16% 462f3a8 bcmCNTR.0 1.00% 0.87% 1.04% 4682d40 bcmTX 4.20% 5.12% 3.83% 4d403a0 bcmRX 9.21% 12.64% 10.35% 4d60558 bcmNHOP 0.80% 0.21% 0.11% 4d72e10 bcmATP-TX 0.80% 0.24% 0.32% 4d7c310 bcmATP-RX 0.20% 0.12% 0.14% 53321e0 MAC Send Task 0.20% 0.19% 0.40% 533b6e0 MAC Age Task 0.00% 0.05% 0.09% 5d59520 bcmLINK.0 5.41% 2.75% 2.15% 84add18 tL7Timer0 0.00% 0.22% 0.23% 84ca140 osapiWdTask 0.00% 0.05% 0.05% 84d3640 osapiMonTask 0.00% 0.00% 0.01% 84d8b40 serialInput 0.00% 0.00% 0.01% 95e8a70 servPortMonTask 0.40% 0.09% 0.12% 975a370 portMonTask 0.00% 0.06% 0.09% 9783040 simPts_task 0.80% 0.73% 1.40% 9b70100 dtlTask 5.81% 7.52% 5.62% 9dc3da8 emWeb 0.40% 0.12% 0.09% a1c9400 hapiRxTask 4.00% 8.84% 6.46% a65ba38 hapiL3AsyncTask 1.60% 0.45% 0.37% abcd0c0 DHCP snoop 0.00% 0.00% 0.20% ac689d0 Dynamic ARP Inspect 0.40% 0.10% 0.05% ac7a6c0 SNMPTask 0.40% 0.19% 0.95% b8fa268 dot1s_timer_task 1.00% 0.78% 2.74% b9134c8 dot1s_task 0.20% 0.07% 0.04% bdb63e8 dot1xTimerTask 0.00% 0.03% 0.02% c520db8 radius_task 0.00% 0.02% 0.05% c52a0b0 radius_rx_task 0.00% 0.03% 0.03% c58a2e0 tacacs_rx_task 0.20% 0.06% 0.15% c59ce70 unitMgrTask 0.40% 0.10% 0.20% c5c7410 umWorkerTask 1.80% 0.27% 0.13% c77ef60 snoopTask 0.60% 0.25% 0.16% c8025a0 dot3ad_timer_task 1.00% 0.24% 0.61% ca2ab58 dot3ad_core_lac_tas 0.00% 0.02% 0.00% d1860b0 dhcpsPingTask 0.20% 0.13% 0.39% d18faa0 SNTP 0.00% 0.02% 0.01% d4dc3b0 sFlowTask 0.00% 0.00% 0.03% d6a4448 spmTask 0.00% 0.13% 0.14% d6b79c8 fftpTask 0.40% 0.06% 0.01% d6dcdf0 tCkptSvc 0.00% 0.00% 0.01% d7babe8 ipMapForwardingTask 0.40% 0.18% 0.29% dba91b8 tArpCallback 0.00% 0.04% 0.04% defb340 ARP Timer 2.60% 0.92% 1.29% e1332f0 tRtrDiscProcessingT 0.00% 0.00% 0.11% 12cabe30 ip6MapLocalDataTask 0.00% 0.03% 0.01% 12cb5290 ip6MapExceptionData 11.42% 12.95% 9.41% 12e1a0d8 lldpTask 0.60% 0.17% 0.30% 12f8cd10 dnsTask 0.00% 0.00% 0.01% 140b4e18 dnsRxTask 0.00% 0.03% 0.03% 14176898 DHCPv4 Client Task 0.00% 0.01% 0.02% 1418a3f8 isdpTask 0.00% 0.00% 0.10% 14416738 RMONTask 0.00% 0.20% 0.42% 144287f8 boxs Req 0.20% 0.09% 0.21% 15c90a18 sshd 0.40% 0.07% 0.07% 15cde0e0 sshd[0] 0.20% 0.05% 0.02% ----------------------------------------------------------------- Total CPU Utilization 89.77% 92.50% 77.29%

In second case a IPv4 connection was deliberately choosen:

user@host1:~$ scp testfile.dmp user@10.0.0.1:/var/tmp/ testfile.dmp 100% 3627MB 31.8MB/s 01:54

Not only was the transfer rate of the SCP copy process significantly higher – and the transfer time subsequently much lower – in the second case using a IPv4 connection. But the CPU utilization on the switch stack during the transfer using a IPv4 connection was also much lower:

stack1(config)# show process cpu Memory Utilization Report status bytes ------ ---------- free 170642384 alloc 298144672 CPU Utilization: PID Name 5 Secs 60 Secs 300 Secs ----------------------------------------------------------------- 41be030 tNet0 0.80% 23.49% 21.10% 41cbae0 tXbdService 0.00% 0.17% 0.08% 43d38d0 ipnetd 0.20% 0.14% 0.12% 43ee580 tIomEvtMon 0.60% 0.26% 0.24% 43f7d98 osapiTimer 2.20% 3.10% 3.08% 4608b68 bcmL2X.0 4.20% 1.10% 1.22% 462f3a8 bcmCNTR.0 0.80% 0.80% 0.99% 4682d40 bcmTX 0.20% 3.35% 3.59% 4d403a0 bcmRX 4.80% 9.90% 10.06% 4d60558 bcmNHOP 0.00% 0.11% 0.10% 4d72e10 bcmATP-TX 1.00% 0.30% 0.32% 4d7c310 bcmATP-RX 0.00% 0.14% 0.15% 53321e0 MAC Send Task 0.80% 0.39% 0.42% 533b6e0 MAC Age Task 0.00% 0.12% 0.10% 5d59520 bcmLINK.0 1.80% 2.38% 2.14% 84add18 tL7Timer0 0.00% 0.11% 0.20% 84ca140 osapiWdTask 0.00% 0.05% 0.05% 84d3640 osapiMonTask 0.00% 0.00% 0.01% 84d8b40 serialInput 0.00% 0.00% 0.01% 95e8a70 servPortMonTask 0.20% 0.09% 0.11% 975a370 portMonTask 0.00% 0.06% 0.09% 9783040 simPts_task 3.20% 1.54% 1.49% 9b70100 dtlTask 0.20% 5.47% 5.45% 9dc3da8 emWeb 0.40% 0.13% 0.09% a1c9400 hapiRxTask 0.20% 6.46% 6.30% a65ba38 hapiL3AsyncTask 0.40% 0.37% 0.35% abcd0c0 DHCP snoop 0.00% 0.02% 0.18% ac689d0 Dynamic ARP Inspect 0.40% 0.15% 0.07% ac7a6c0 SNMPTask 0.00% 1.32% 1.12% b8fa268 dot1s_timer_task 7.21% 2.99% 2.97% b9134c8 dot1s_task 0.00% 0.03% 0.03% bdb63e8 dot1xTimerTask 0.00% 0.01% 0.02% c520db8 radius_task 0.00% 0.01% 0.04% c52a0b0 radius_rx_task 0.00% 0.03% 0.03% c58a2e0 tacacs_rx_task 0.20% 0.21% 0.17% c59ce70 unitMgrTask 0.60% 0.20% 0.21% c5c7410 umWorkerTask 0.20% 0.17% 0.12% c77ef60 snoopTask 0.20% 0.18% 0.15% c8025a0 dot3ad_timer_task 2.20% 0.80% 0.68% d1860b0 dhcpsPingTask 1.80% 0.58% 0.45% d18faa0 SNTP 0.00% 0.00% 0.01% d4dc3b0 sFlowTask 0.20% 0.03% 0.03% d6a4448 spmTask 0.20% 0.15% 0.14% d6b79c8 fftpTask 0.00% 0.02% 0.01% d6dcdf0 tCkptSvc 0.00% 0.00% 0.01% d7babe8 ipMapForwardingTask 0.20% 0.19% 0.28% dba91b8 tArpCallback 0.00% 0.06% 0.05% defb340 ARP Timer 4.60% 1.54% 1.36% e1332f0 tRtrDiscProcessingT 0.40% 0.14% 0.12% 12cabe30 ip6MapLocalDataTask 0.00% 0.01% 0.01% 12cb5290 ip6MapExceptionData 0.00% 8.60% 8.91% 12cbe790 ip6MapNbrDiscTask 0.00% 0.02% 0.00% 12e1a0d8 lldpTask 0.80% 0.24% 0.29% 12f8cd10 dnsTask 0.00% 0.00% 0.01% 140b4e18 dnsRxTask 0.40% 0.07% 0.04% 14176898 DHCPv4 Client Task 0.00% 0.00% 0.02% 1418a3f8 isdpTask 0.00% 0.00% 0.09% 14416738 RMONTask 1.00% 0.44% 0.44% 144287f8 boxs Req 0.40% 0.16% 0.21% 15c90a18 sshd 0.20% 0.06% 0.06% 15cde0e0 sshd[0] 0.00% 0.03% 0.02% ----------------------------------------------------------------- Total CPU Utilization 43.28% 78.79% 76.50%

Comparing the two above output samples by per process CPU utilization showed that the major share of the higher CPU utilization in the case of a IPv6 connection is allotted to the processes

tNet0,bcmTX,bcmRX,bcmLINK.0,dtlTask,hapiRxTaskandip6MapExceptionData. In a process by process comparison, those seven processes used 60.3% more CPU time in case of a IPv6 connection compared to the case using a IPv4 connection. Unfortunately the documentation on what the individual processes are exactly doing is very sparse or not available at all. In order to further analyze this issue a support case with the collected information was opened with Dell. A fix for the described issue was made availible with firmware version 5.1.9.3The LAN stack of several Dell PowerConnect M8024-k switches showed sometimes erratic behaviour. There were several occasions, where the switch stack would suddenly show a hugely increased latency in packet processing or where it would just stop passing certain types of traffic altogether. Usually a reload of the stack would restore its operation and the increased latency or the packet drops would disappear with the reload as suddenly as they had appeared. The root cause of this was unfortunately never really found. Maybe it was the combination of functions (layer 3, dual stack IPv4 and IPv6, extensive ACLs, etc.) that were running simultaneously on the stack in this setup.

During both planned and unplanned failovers of the master switch in the stack, there is a time period of up to 120 seconds where no packets are processed by the switch stack. This occurs even with continuous forwarding enabled. I've had a strong suspicion that this issue was related to the layer 3 instances running on the switch stack. A comparison between a pure layer 2 stack and a layer 3 enabled stack in a controlled test environment confirmed this. As soon as at least one layer 3 instance was added, the described delay occured on switch failovers. The fact that migrating layer 3 instances from the former master switch to the new one takes some time makes sense to me. What's unclear to me is why this seems to also affect the layer 2 traffic going over the stack.

There were several occasions where the hardware- and software MAC table of the Dell PowerConnect switches got out of sync. While the root cause (hardware defect, bit flip, power surge, cosmic radiation, etc.) of this issue is unknown, the effect was a sudden reboot of affected switch. Luckily we had console servers in place, which were storing a console output history from the time the issue occured. After raising a support case with Dell with the information from the console output, we got a firmware update (v5.1.9.4) in which the issue would not trigger a sudden reboot anymore, but instead log an appropriate message to the switches log. With this fix the out of sync MAC tables will still require a reboot of the affected switch, but this can now be done in a controlled fashion. Still, a solution requiring no reboot at all would have been much more preferrable.

While querying the Dell PowerConnect switches with the SNMP protocol for monitoring purposes, obscure and confusing messages containing the string

MGMT_ACALwould reproducibly be logged into the switches log. See the article Check_MK Monitoring - Dell PowerConnect Switches - Global Status in this blog for the gory details.With a stack of Dell PowerConnect M8024-k switches the information provided via the SNMP protocol would occasionally get out of sync with the information available from the CLI. E.g. the temperature values from the stack

stack1of LAN switches compared to the standalone SAN switchesstandalone{1,2,3,4,5,6}:user@host:# for HST in stack1 standalone1 standalone2 standalone3 stack2 standalone4 standalone5 standalone6; do echo "$HST: "; for OID in 4 5; do echo -n " "; snmpbulkwalk -v2c -c [...] -m '' -M '' -Cc -OQ -OU -On -Ot $HST .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.${OID}; done; done stack1: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4 = No Such Object available on this agent at this OID .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5 = No Such Object available on this agent at this OID standalone1: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 0 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 40 standalone2: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 0 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 37 standalone3: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 0 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 32 stack2: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.2.0 = 0 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 42 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.2.0 = 41 standalone4: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 39 standalone5: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 39 standalone6: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 35

At the same time the CLI management interface of the switch stack showed the correct temperature values:

stack1# show system System Description: Dell Ethernet Switch System Up Time: 89 days, 01h:50m:11s System Name: stack1 Burned In MAC Address: F8B1.566E.4AFB System Object ID: 1.3.6.1.4.1.674.10895.3041 System Model ID: PCM8024-k Machine Type: PowerConnect M8024-k Temperature Sensors: Unit Description Temperature Status (Celsius) ---- ----------- ----------- ------ 1 System 39 Good 2 System 39 Good [...]

Only after a reboot of the switch stack, the information provided via the SNMP protocol:

user@host:# for HST in stack1 standalone1 standalone2 standalone3 stack2 standalone4 standalone5 standalone6; do echo "$HST: "; for OID in 4 5; do echo -n " "; snmpbulkwalk -v2c -c [...] -m '' -M '' -Cc -OQ -OU -On -Ot $HST .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.${OID}; done; done stack1: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.2.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 37 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.2.0 = 37 standalone1: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 0 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 39 standalone2: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 37 standalone3: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 32 stack2: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 0 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.2.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 41 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.2.0 = 41 standalone4: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 38 standalone5: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 38 standalone6: .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.4.1.0 = 1 .1.3.6.1.4.1.674.10895.5000.2.6132.1.1.43.1.8.1.5.1.0 = 34

would again be in sync with the information available from the CLI:

stack1# show system System Description: Dell Ethernet Switch System Up Time: 0 days, 00h:05m:32s System Name: stack1 Burned In MAC Address: F8B1.566E.4AFB System Object ID: 1.3.6.1.4.1.674.10895.3041 System Model ID: PCM8024-k Machine Type: PowerConnect M8024-k Temperature Sensors: Unit Description Temperature Status (Celsius) ---- ----------- ----------- ------ 1 System 37 Good 2 System 37 Good [...]

Conclusion

Although the setup build with the Dell PowerConnect switches and the other hardware components was working and providing its basic, intended functionality, there were some pretty big and annoying limitations associated with it. A lot of these limitations would have not been that significant to the entire setup if certain design descisions would have been made more carefully. For example if the layer 3 part of the LAN would have been implemented in external network components or if a proper fully meshed, fabric-based SAN would have been favored over what can only be described as a legacy technology. From the reliability, availability and serviceability (RAS) points of view, the setup is also far from ideal. By daisy-chaining the Dell PowerEdge M1000e blade chassis, stacking the LAN switches, stretching the LAN and SAN over both chassis and by connecting external devices through the external ports of the Dell PowerConnect switches, there are a lot of parts in the setup that are depending on each other. This makes normal operations difficult at best and can have disastrous effects in case of a failure.

In retrospect, either using pure pass-through network modules in the Dell PowerEdge M1000e blade chassis in conjunction with capcable 10GE top-of-rack switches or using the much more capable Dell Force10 MXL switches in the Dell PowerEdge M1000e blade chassis seem to be better solutions. The uptick for Dell Force10 MXL switches of about €2000 list price per device compared to the Dell PowerConnect switches seems negligible compared to the costs that arose through debugging, bugfixing and finding workarounds for the various limitations of the Dell PowerConnect switches. In either case a pair of capable, central layer 3 devices for gateway redundancy, routing and possibly fine-grained traffic control would be advisable.

For simpler setups, without some of the more special requirements of this particular setup, the Dell PowerConnect switches still offer a nice price-performance ratio. Especially with regard to their 10GE port density.

2016-05-16 // Check_MK Monitoring - Dell PowerConnect Switches

Dell PowerConnect and Dell PowerConnect M-Series switches can – with regard to their most important aspects like CPU, fans, PSU and temperature – already be monitored with the standard Check_MK distribution. This article introduces an enhanced version and additional Check_MK service checks to monitor additional aspects of Dell PowerConnect switches. It is targeted mainly towards the Dell PowerConnect M-Series switches used in Dell PowerEdge M1000e blade chassis, but can probably be used on standalone Dell PowerConnect switches as well.

For the impatient and TL;DR here is the Check_MK package of the enhanced version of the Dell PowerConnect monitoring checks:

Enhanced version of the Dell PowerConnect monitoring checks (Compatible with Check_MK versions 1.2.6 and earlier)

Enhanced version of the Dell PowerConnect monitoring checks (Compatible with Check_MK versions 1.2.8 and later)

The sources are to be found in my Check_MK repository on GitHub

The Dell PowerConnect M-Series switches to be used in Dell PowerEdge M1000e blade chassis – and possibly some newer Dell PowerConnect standalone switches too – are based on Broadcom FASTPATH silicon. While this hardware base introduces a plethora of other issues to be covered in detail in a separate article, it also introduces the possibility of breaking backwards compatibility with older Dell PowerConnect models from a monitoring point of view. Therefore, the new checks to cover the Broadcom FASTPATH based hardware were moved to a entirely new namespace. The file names of the new checks now use the prefix dell_powerconnect_bcm_ in contrast to the already existing stock Check_MK checks with their prefix dell_powerconnect_. Another difference to the stock Check_MK checks is the use of the FASTPATH Enterprise MIBs, which are specific to devices based on Broadcom silicon. The only exemptions are the checks dell_powerconnect_bcm_global_status and the dell_powerconnect_bcm_dnsstats, both monitor items which are not covered by the FASTPATH Enterprise MIBs.

All checks have been verified to work with the firmware versions 5.1.8.x and 5.1.9.x. For the newly introduced check dell_powerconnect_bcm_global_status the firmware version 5.1.9.4 or later is needed in order to avoid spurious error messages in the switch event log. See the section Additional Checks below for a more detailed explanation.

The discontinued, modified and additional checks are described in greater detail in the following three respective sections:

Discontinued Checks

The two service checks dell_powerconnect_fans and dell_powerconnect_psu provided by the standard Check_MK distribution have become redundant for the Dell PowerConnect M-Series switches. The items to be monitored by both are not present in those devices, since the Dell PowerEdge M1000e blade chassis provides both central cooling and power supply facilities. Accordingly, the cooling and power supply facilities should be monitored via the Dell Chassis Managment Controller.

Modified Checks

The two service checks dell_powerconnect_cpu and dell_powerconnect_temp have been renamed to dell_powerconnect_bcm_cpu and dell_powerconnect_bcm_temp respectively. They both have been modified to use the new Dictionary based parameters and factory settings for the CPU and temperature warning and critical levels. A SNMP example output for all OIDs used has been added to both service checks for documentation purposes. Manual pages, PNP4Nagios templates, WATO and Perf-O-Meter plugins have also been added for both service checks. With the added WATO plugins it is now possible to configure the CPU and temperature warning and critical levels through the WATO WebUI. The configuration options for the CPU levels can be found under:

-> Host & Service Parameters

-> Parameters for discovered services

-> Operating System Resources

-> Dell PowerConnect CPU usage

-> Create rule in folder ...

[x] The levels for the overall CPU usage on Dell PowerConnect switches

The configuration options for the temperature levels can be found under:

-> Host & Service Parameters

-> Parameters for discovered services

-> Temperature, Humidity, Electrical Parameters, etc.

-> Dell PowerConnect temperature

-> Create rule in folder ...

[x] Temperature levels for Dell PowerConnect switches

The following image shows a status output example for the dell_powerconnect_bcm_cpu service check from the WATO WebUI:

Three average CPU utilization values for the time sample intervals 5, 60 and 300 seconds are checked. The Perf-O-Meter is split accordingly into three sections in order to be able to display all three average CPU utilization values at once.

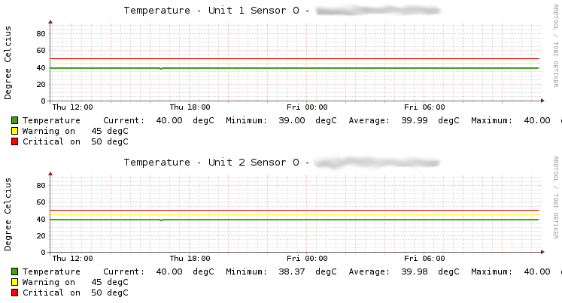

The following image shows a status output example for the dell_powerconnect_bcm_temp service check from the WATO WebUI:

This example shows the status and the current values of the temperature sensors in a switch stack with two switch members.

The following two images show examples of PNP4Nagios graphs for both service checks:

Additional Checks

Overview

The following table shows a condensed overview of the additional Check_MK service checks and their available components.

| Service check name | Description | Alarm | Manpage | PNP4Nagios template | Perf-O-Meter plugin | WATO plugin |

|---|---|---|---|---|---|---|

dell_powerconnect_bcm_arp_cache | Checks the current number of entries in the ARP cache against default or configured warning and critical threshold values. | yes | yes | yes | yes | yes |

dell_powerconnect_bcm_cos_queue | Determines the number of packets dropped at each CoS queue for the CPU. | yes | yes | |||

dell_powerconnect_bcm_cpu_proc | Monitors the CPU utilization on a per process level. | yes | yes | yes | yes | |

dell_powerconnect_bcm_dnsstats | Determines the number of DNS queries (total and several error states defined by RFC 1035) of the systems resolver. | yes | yes | |||

dell_powerconnect_bcm_global_status | Determines the global status of the “product”, via a Dell-specific SNMP OID. | yes | yes | |||

dell_powerconnect_bcm_ip_conflict | Determines if an IP address conflict has been detected on the switch. | yes | yes | |||

dell_powerconnect_bcm_logstats | Determines the number of log messages (total, dropped, relayed to syslog hosts) generated on the system. | yes | yes | |||

dell_powerconnect_bcm_mbuf | Determines the number of memory/message buffer allocations – or failures thereof – for packets arriving at the systems CPU. | yes | yes | |||

dell_powerconnect_bcm_memory | Monitors the current memory usage. | yes | yes | yes | yes | yes |

dell_powerconnect_bcm_sntp | Checks the current status of the SNTP client on the switch. | yes | yes | yes | yes | |

dell_powerconnect_bcm_ssh_sessions | Checks the number of currently active SSH sessions against the default limit of five allowed SSH sessions. | yes | yes | yes | yes | yes |

The first two columns should be pretty self-explanatory.

The Alarm column shows which checks will generate alarms based on the particular parameters monitored. Checks without an entry in the Alarm column are designed purely for long-term trends via their respective PNP4Nagios templates. All checks with an entry in the Alarm column use the new Dictionary based parameters and factory settings for their respective warning and critical levels. Where reasonable, those warning and critical levels are configurable through the WATO WebUI via an appropriate WATO plugin. See the last column, titled WATO plugin for the checks this applies to.

Manual pages are provided for each service check for documentation purposes. A SNMP example output is provided as a comment within the check script for all the OIDs used in the service check.

For all checks with an entry in the PNP4Nagios template column, a PNP4Nagios templates is provided in order to properly display the performance data delivered by the service check. Perf-O-Meter plugins are provided where reasonable, in order to display selected performance metrics in the service check overview of a host.

The specifics of each additional Check_MK service check are described in greater detail in the following sections.

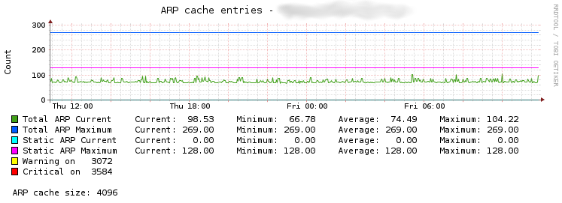

ARP Cache

The Check_MK service check dell_powerconnect_bcm_arp_cache monitors the current total number of entries in the ARP cache on Dell PowerConnect switches. This number is compared to either the default or configured warning and critical threshold values, and an alarm is raised accordingly. With the added WATO plugin it is possible to configure the warning and critical levels through the WATO WebUI and thus override the default values (warning: 3072; critical: 3584). The configuration options for the ARP cache levels can be found under:

-> Host & Service Parameters

-> Parameters for discovered services

-> Operating System Resources

-> Dell PowerConnect ARP cache

-> Create rule in folder ...

[x] The levels for the number of ARP cache entries on Dell PowerConnect switches

The following image shows a status output example for the dell_powerconnect_bcm_arp_cache service check from the WATO WebUI:

This example shows the current number of entries in the ARP cache along with the warning and critical threshold values.

In addition to the already mentioned total number of entries in the ARP cache, several other metrics are also collected as performance data. These are the overall ARP cache size, the number of static ARP entries and the peak values for both the current and the static number of ARP entries. The following image shows an example of the PNP4Nagios graph for the service check:

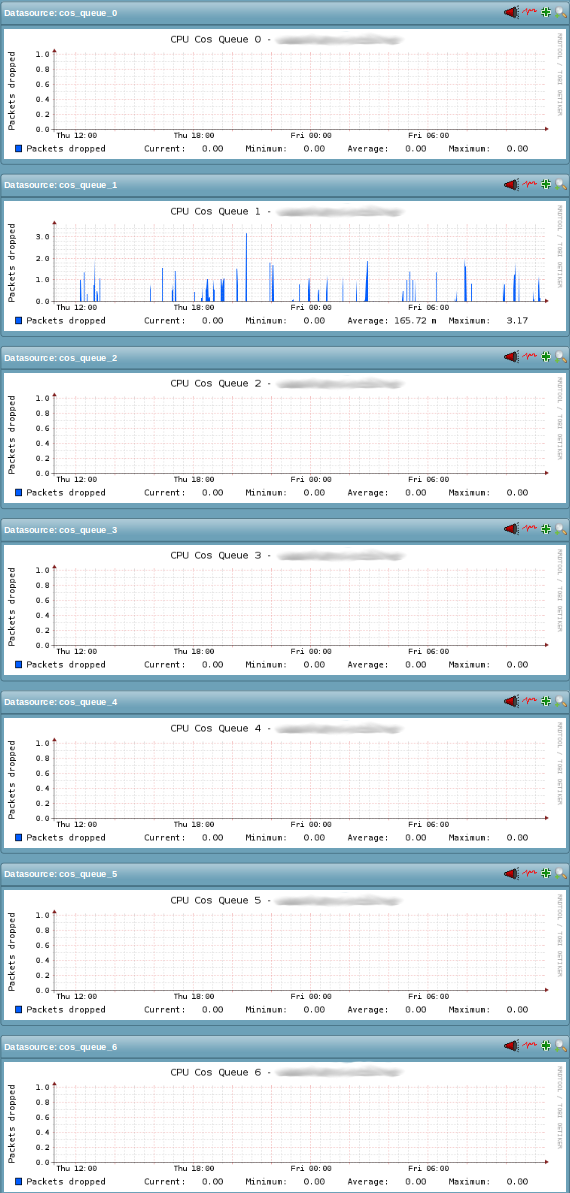

CoS Queue

The Check_MK service check dell_powerconnect_bcm_cos_queue monitors the number of packets dropped at each CoS queue for the CPU (quoted from the FASTPATH Enterprise MIB). Unfortunately the only other description available in the FASTPATH Enterprise MIBs is almost as cryptic as the first one: Number of packets dropped at this CPU CoS queue because the queue was full. The metric probably relates to the switches Class of Service (CoS) feature in a Quality of Service (QoS) setup. Currently, the dell_powerconnect_bcm_cos_queue service check is used purely for long-term trends via its respective PNP4Nagios template and thus only gathers its metrics as performance data.

The following image shows a status output example for the dell_powerconnect_bcm_cos_queue service check from the WATO WebUI:

The following image shows an example of the PNP4Nagios graph for the service check:

Process CPU Usage

The Check_MK service check dell_powerconnect_bcm_cpu_proc monitors the same CPU utilization metrics as the previously described dell_powerconnect_bcm_cpu service check, but on a more detailed, per process level. The three average CPU utilization values for the time sample intervals 5, 60 and 300 seconds are for each process compared to either the default or configured warning and critical threshold values and an alarm is raised accordingly. There is currently the limitation in the checks logic that warning and critical threshold values apply globally to all processes. Individual warning and critical threshold values for each process are currently not supported. With the added WATO plugin it is possible to configure the warning and critical levels through the WATO WebUI and thus override the default values (warning: 80%; critical: 90%) for the average CPU utilization. The configuration options for the per process CPU utilization levels can be found under:

-> Host & Service Parameters

-> Parameters for discovered services

-> Operating System Resources

-> Dell PowerConnect CPU usage (per process)

-> Create rule in folder ...

[x] The levels for the per process CPU usage on Dell PowerConnect switches

The following image shows a status output example for the dell_powerconnect_bcm_cpu_proc service check from the WATO WebUI:

The following image shows only four examples of PNP4Nagios graphs for the service checks:

The selected example graphs show the average CPU utilization over the 5, 60 and 300 seconds time sample intervals for the processes SNMPTask, bcmRX, dot1s_timer_task and osapiTimer. Mind though that this is only a small selection of the various processes that can be found running on the Broadcom FASTPATH based Dell PowerConnect switches. Some processes are always to be found, others appear only after a specific feature – covered by appropriate processs – is enabled on the switch. Unfortunately i've not been able to find a complete list of the possible processes nor a good and comprehensive description of the purpose of each process. Sometimes – like in the case of the process SNMPTask – the purpose can be guessed from process name. So overall i'd say the per process CPU utilization metric is probably best used as a metric for long-term trends in conjunction with support from Broadcom or Dell, when dealing with a specific issue on the switch or an unusually high CPU utilization of a specific process.

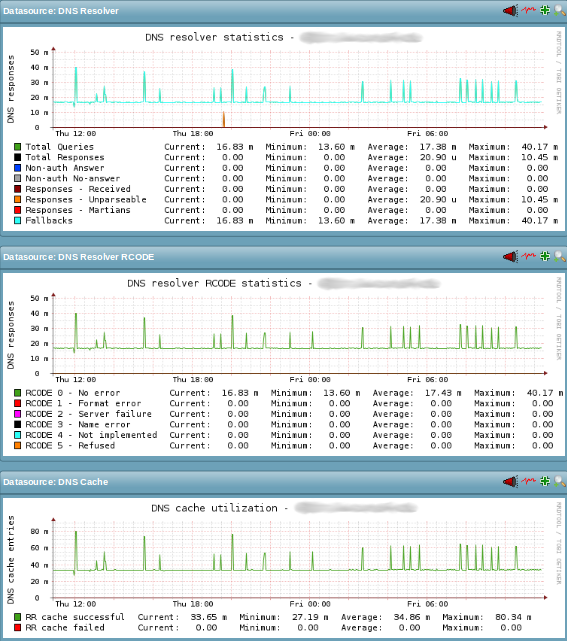

DNS Statistics

The Check_MK service check dell_powerconnect_bcm_dnsstats monitors various aspects and metrics of the switches local DNS resolver. The metrics gathered can be grouped into three categories:

DNS Resolver: The number of DNS resolver queries and the number of DNS responses to those queries. For the DNS responses the number of responses in each response category. The response categories are: Non-auth Answers, Non-auth No-answer, Received Responses, Unparsable Responses, Martians Responses and Fallbacks.

DNS Resolver RCODE: The number of DNS resolver responses by resonse code. See 1035 for the details on DNS response codes.

DNS Cache: The number of DNS resouce records that have been successfully added or have failed to be added to the DNS resolver cache.

See 1612, 1035 and the service checks man page for a detailed description of the metrics covered by the dell_powerconnect_bcm_dnsstats service check. Currently, the dell_powerconnect_bcm_dnsstats service check is used purely for long-term trends via its respective PNP4Nagios template and thus only gathers its metrics as performance data.

The following image shows a status output example for the dell_powerconnect_bcm_dnsstats service check from the WATO WebUI:

The following image shows an example of the three PNP4Nagios graphs for the service check:

Global Status

The Check_MK service check dell_powerconnect_bcm_global_status monitors just one metric, the productStatusGlobalStatus from the Dell Vendor MIB for PowerConnect devices. As the name of the metric suggests, it represents an aggregated global status for a Dell PowerConnect device. The global status can assume one of the three values, shown in the following table:

| Numeric Value | Textual Value | Description |

|---|---|---|

| 3 | OK | “If fans and power supplies are functioning and the system did not reboot because of a HW watchdog failure or a SW fatal error condition.” |

| 4 | Non-critical | “If at least one power supply is not functional or the system rebooted at least once because of a HW watchdog failure or a SW fatal error condition.” |

| 5 | Critical | “If at least one fan is not functional, possibly causing a dangerous warming up of the device.” |

While the information about the fan and PSU status is redundant for the Dell PowerConnect M-Series switches, the information about hard- and software error conditions might be quite valueable.

When we first implemented the enhanced version of the Dell PowerConnect monitoring checks, we noticed spurious error messages suddenly appearing in the switch event log and subsequently in our syslog servers. The messages showing up looked like the following example:

<189> OCT 14 12:48:50 <Management IP address>-1 MGMT_ACAL[251047504]: macal_api.c(873) 38462 %% macalRuleActionGet(): List does not exist.

Disabling one check after another, we narrowed the source of this error message down to the dell_powerconnect_bcm_global_status service check. Logging a support case with Dell eventually lead to the following explaination from Dell PowerConnect engineering:

Hi Frank,

I got an update from our engineering team and they can see the problem

when snmpwalk is executed against switch but issue is not seen if snmpget

is executed on all OIDs.

They are working on a fix.

Once fix is available it will be included in the next FW patch release

for this switch. […]

At the time we were running the newest available firmware, which back then was version 5.1.9.3. After updating to the firmware version 5.1.9.4 which was released later on, the above error messages stopped showing up.

IP Address Conflict Detection

The Check_MK service check dell_powerconnect_bcm_ip_conflict monitors the status of the built-in IP address conflict detection feature of a Dell PowerConnect switch. If an IP address conflict is detected, an alarm with the status warning is raised. In addition to the alarm status, the service check will also report the conflicting IP, (if available) the MAC address of the device causing the conflict and the date and time the conflict was detected. The last bit of information is relative to the switches date and time settings. Needless to say, a properly configured date and time or a time synchronisation via NTP on the siwtch is quite helpful in such a case.

Once an IP address conflict is detected by a Dell PowerConnect switch, this status will not resolve itself automatically or time out in any way. The issue has to be acknowledged manually on the Dell PowerConnect switch. This can be achieved e.g. on the switchs' CLI with the following commands:

switch> enable switch# clear ip address-conflict-detect

Log Statistics

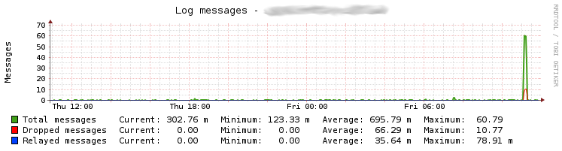

The Check_MK service check dell_powerconnect_bcm_logstats monitors several metrics of the logging facility on Dell PowerConnect switches. These are the:

total number of log messages received by the log process, including dropped and ignored messages.

number of dropped log messages, which could not be processed by the log process due to an error or lack of resources.

number of relayed log messages. These are log messages which have been forwarded to a remote syslog host by the log process. If multiple remote syslog hosts are configured, each message is counted multiple times, once for each of the configured syslog hosts.

Currently, the dell_powerconnect_bcm_logstats service check is used purely for long-term trends via its respective PNP4Nagios template and thus only gathers its metrics as performance data.

The following image shows a status output example for the dell_powerconnect_bcm_logstats service check from the WATO WebUI:

The following image shows an example of the PNP4Nagios graph for the service check:

Unfortunately the information as to why log messages might have been erroneous or which resources (CPU cycles, free memory, etc.) were missing at the time of processsing the log message is scarce. The metrics about the logging facility are therefore – again – probably best used as metrics for long-term trends in conjunction with support from Broadcom or Dell, when dealing with a specific issue on the switch.

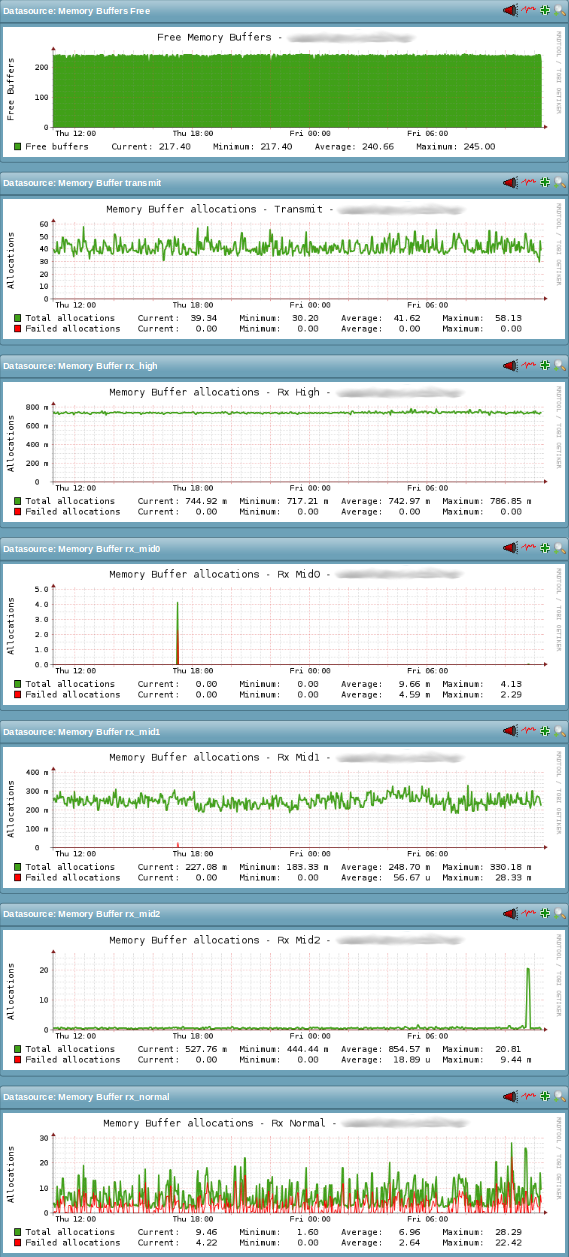

Memory Buffers

The Check_MK service check dell_powerconnect_bcm_mbuf monitors two groups of metrics regarding the memory or message buffers on Dell PowerConnect switches. The first group is the overall number of currently available memory or message buffers on the switch. This group consists of just one metric. The second group is the number of total and the number of failed memory or message buffer allocation attempts for packets arriving at the switches CPU. Those two metrics are gathered for each of memory or message buffer classes. The names of the currently available memory or message buffer classes are “Transmit”, “Rx High”, “Rx Mid0”, “Rx Mid1”, “Rx Mid2” and “Rx Normal”.

The dell_powerconnect_bcm_mbuf service check is currently used only for long-term trends via its respective PNP4Nagios template and thus only gathers its metrics as performance data.

The following image shows a status output example for the dell_powerconnect_bcm_mbuf service check from the WATO WebUI:

The following image shows an example of the seven PNP4Nagios graphs for the service check. One graph for the overall available memory or message buffers and one graph for the allocation attempts on each of the six memory or message buffer classes:

Similarly to the process names described in the previous section Process CPU Usage, i've also not been able to find a good and comprehensive description of the memory or message buffer classes defined on the Broadcom FASTPATH based Dell PowerConnect switches. Some meaning can again be derived from the name of the particular memory or message buffer class, but it is much more limited than in case of the process names. Beyond that, questions like the following – but not limited to – immediately come to mind:

which type of packets are forwarded to the CPU instead of being directly processed by the switching silicon of the device?

why are there several receive classes (“Rx …”) but only one transmit class?

what is the difference between the multiple receive classes and by what algorithm are packets assigned to a specific receive class?

what is likely the root cause of a failed memory or message buffer allocation attempt?

what are the effects of a failed memory or message buffer allocation attempt. Are packets going to be dropped due to this, or is the allocation attempt retried?

what design, implementation and configuration options should be taken into consideration in order to avoid failed memory or message buffer allocation attempts?

Unfortunately they remain unanswered due to the lack of comprehensive documentation. The metrics regarding the memory or message buffers are therefore – again – probably best used for long-term trends in conjunction with support from Broadcom or Dell, when dealing with a specific issue on the switch.

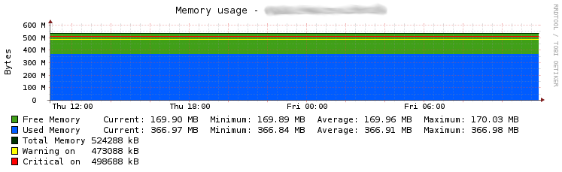

Memory Usage

The Check_MK service check dell_powerconnect_bcm_memory monitors the current memory (RAM) usage on Dell PowerConnect switches. The amount of currently free memory is compared to either the default or configured warning and critical threshold values, and an alarm is raised accordingly. With the added WATO plugin it is possible to configure the warning and critical levels through the WATO WebUI and thus override the default values (warning: 51200 KBytes; critical: 25600 KBytes of free memory). The configuration options for the free memory levels can be found under:

-> Host & Service Parameters

-> Parameters for discovered services

-> Operating System Resources

-> Dell PowerConnect memory usage

-> Create rule in folder ...

[x] The levels for the amount of free memory on Dell PowerConnect switches

The following image shows a status output example for the dell_powerconnect_bcm_memory service check from the WATO WebUI:

This example shows the current amount of free memory and the total memory size both measured in kilobytes.

The following image shows an example of the PNP4Nagios graph for the service check:

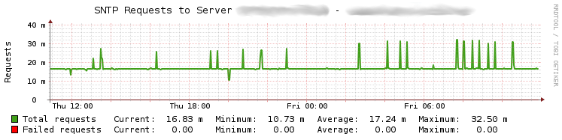

SNTP Statistics

The Check_MK service check dell_powerconnect_bcm_sntp monitors the current status of the SNTP client on Dell PowerConnect switches. In order to achieve this, the check iterates over the list of SNTP servers configured as time references for the SNTP client on the switch. For each configured SNTP server, the status of the last connection attempt from the SNTP client on the switch to that particular SNTP server is evaluated. The overall number of SNTP servers with a connection status equal to success is counted and this number is compared to either the default or configured warning and critical threshold values, and an alarm is raised accordingly. With the added WATO plugin it is possible to configure the warning and critical levels through the WATO WebUI and thus override the default values (warning: 1 ; critical: 0 servers successfully connected). The configuration options for the levels of successful SNTP server connections can be found under:

-> Host & Service Parameters

-> Parameters for discovered services

-> Applications, Processes & Services

-> Dell PowerConnect SNTP status

-> Create rule in folder ...

[x] Successful SNTP server connections on Dell PowerConnect switches

The following image shows a status output example for the dell_powerconnect_bcm_sntp service check from the WATO WebUI:

This example shows the current status of the SNTP client which has successfully connected the one configured SNTP server.

In addition to the aggregated current connection status of the SNTP client to all configured SNTP servers, two other metrics are – for each SNTP server – collected as performance data. These are the overall number of SNTP requests – including retries – and the number of failed SNTP requests the client made to a particular SNTP server. The following image shows an example of the PNP4Nagios graph for the service check:

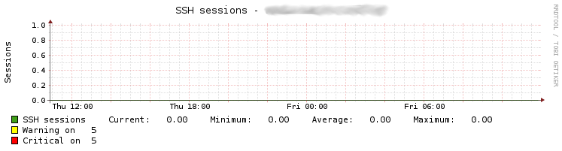

SSH Sessions

The Check_MK service check dell_powerconnect_bcm_ssh_sessions monitors just one metric, the number of currently active SSH sessions on the Dell PowerConnect device. This number is compared to either the default or configured warning and critical threshold values, and an alarm is raised accordingly. With the added WATO plugin it is possible to configure the warning and critical levels through the WATO WebUI and thus override the default values (warning: 5; critical: 5 active SSH sessions). The configuration options for the number of active SSH sessions can be found under:

-> Host & Service Parameters

-> Parameters for discovered services

-> Applications, Processes & Services

-> Dell PowerConnect SSH sessions

-> Create rule in folder ...

[x] Active SSH sessions on Dell PowerConnect switches

The following image shows a status output example for the dell_powerconnect_bcm_ssh_sessions service check from the WATO WebUI:

This example shows the current number of active SSH sessions along with the warning and critical threshold values.

The following image shows an example of the PNP4Nagios graph for the service check:

Conclusion

Adding the enhanced version of the Dell PowerConnect monitoring checks to your Check_MK server enables you to monitor various additional aspects of your Dell PowerConnect devices. New Dell PowerConnect devices should pick up the additional service checks immediately. Existing Dell PowerConnect devices might need a Check_MK inventory to be run explicitly on them in order to pick up the additional service checks.

Along with the built-in Check_MK monitoring of interfaces of network equipment, the monitoring of services (SSH, HTTP and HTTPS) and the status of certificates, as well as the previously described monitoring of RMON Interface Statistics, this enhanced version of the Dell PowerConnect monitoring checks enables you to create a complete monitoring solution for your Dell PowerConnect M-Series switches.

I hope you find the provided new and enhanced checks useful and enjoyed reading this blog post. Please don't hesitate to drop me a note if you have any suggestions or run into any issues with the provided checks.

2016-02-05 // Check_MK Monitoring - RMON Interface Statistics

Check_MK provides the check rmon_stats to collect and monitor Remote Network MONitoring (RMON) statistics for interfaces of network equipment. While this stock check probably works fine with Cisco network devices, it does – out of the box – not work with network equipment from other vendors like e.g. Dell PowerConnect switches. This article shows the modifications necessary to make the rmon_stats check work with non-Cisco network equipment. Along the way, other shortcomings of the stock rmon_stats are discussed and the necessary modifications to address those issues are shown.

For the impatient and TL;DR here are the enhanced versions of the rmon_stats check here along with a slightly beautified version of the accompanying PNP4Nagios template:

Enhanced version of the rmon_stats check

Slightly beautified version of the rmon_stats PNP4Nagios template

The limitation of the rmon_stats check to Cisco network devices is due to the fact that the Check_MK inventory is being limited to vendor specific OIDs in the checks snmp_scan_function. The following code snippet shows the respective lines:

- rmon_stats

check_info["rmon_stats"] = { 'check_function' : check_rmon_stats, 'inventory_function' : inventory_rmon_stats, 'service_description' : 'RMON Stats IF %s', 'has_perfdata' : True, 'snmp_info' : ('.1.3.6.1.2.1.16.1.1.1', [ # '1', # etherStatsIndex = Item '6', # etherStatsBroadcastPkts '7', # etherStatsMulticastPkts '14', # etherStatsPkts64Octets '15', # etherStatsPkts65to127Octets '16', # etherStatsPkts128to255Octets '17', # etherStatsPkts256to511Octets '18', # etherStatsPkts512to1023Octets '19', # etherStatsPkts1024to1518Octets ]), # for the scan we need to check for any single object in the RMON tree, # we choose netDefaultGateway in the hope that it will always be present 'snmp_scan_function' : lambda oid: ( oid(".1.3.6.1.2.1.1.1.0").lower().startswith("cisco") \ or oid(".1.3.6.1.2.1.1.2.0") == ".1.3.6.1.4.1.11863.1.1.3" \ ) and oid(".1.3.6.1.2.1.16.19.12.0") != None, }

By replacing the snmp_scan_function lines at the bottom of the code above with the following lines:

- rmon_stats

# if at least one interface within the RMON tree is present (the previous # "netDefaultGateway" is not present in every device implementing the RMON # MIB 'snmp_scan_function' : lambda oid: oid(".1.3.6.1.2.1.16.1.1.1.1.1") > 0,

the check will now be able to successfully inventorize the RMON representation of interfaces even on non-Cisco network equipment.

To actually execute such an inventory, there first needs to be a Check_MK configuration rule in place, which enables the appropriate code in the inventory_rmon_stats function of the check. The following code snippet shows the respective lines:

- rmon_stats

def inventory_rmon_stats(info): settings = host_extra_conf_merged(g_hostname, inventory_if_rules) if settings.get("rmon"): [...]

I guess this is supposed to be sort of a safeguard, since the number of interfaces can grow quite large in RMON and querying them can put a lot of strain on the service processor of the network device.

The configuration option to enable RMON based checks is neatly tucked away in the WATO WebGUI at:

-> Host & Service Parameters

-> Parameters for discovered services

-> Inventory - automatic service detection

-> Network Interface and Switch Port Discovery

-> Create rule in folder ...

-> Value

[x] Collect RMON statistics data

[x] Create extra service with RMON statistics data (if available for the device)

After creating a global or folder specific configuration rule, the next run of the Check_MK inventory should discover a – possibly large – number of new interfaces and create the individual service checks, one for each new interface. If not, the network devices probably has disabled the RMON statistics feature by default or has no such feature at all. Since the procedure to enable the collection and presentation of RMON statistics via SNMP on a network device is different for each vendor, one has to check with the corresponding vendor documentation.

Taking a look at the list of newly discovered interfaces and the resulting service checks reveals two other issues of the rmon_stats check.

One is, the check is rather simple in the way that it indiscriminately collects RMON interface statistics on all interfaces of a network device, regardless of the actual link state of each interface. While the RMON-MIB lacks direct information about the link state of an interface, it fortunately carries a reference to the IF-MIB for each interface. The IF-MIB in turn provides the information about the link state (ifOperStatus), which can be used to determine if RMON statistics should be collected for a particular interface.

The other issue is, the checks use of the RMON interface index (etherStatsIndex) as a base for the name of the associated service. E.g. RMON Stats IF <etherStatsIndex> in the following example output:

OK RMON Stats IF 1 OK - bcast=0 mcast=0 0-63b=5 64-127b=124 128-255b=28 256-511b=8 512-1023b=2 1024-1518b=94 octets/sec OK RMON Stats IF 10 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 100 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 101 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 102 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 103 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 104 OK - bcast=0 mcast=0 0-63b=0 64-127b=11 128-255b=3 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 105 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 106 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 107 OK - bcast=0 mcast=1 0-63b=1 64-127b=8 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 108 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 109 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 11 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 110 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 111 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Stats IF 112 OK - bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec [...]

Unfortunately in RMON the values of the interface index etherStatsIndex are not guaranteed to be consistent across reboots of the network device, much less the addition of new or the removal of existing interfaces. The interface numbering definition according to the original MIB-II on the other hand is much stricter in this regard. Although subsequent RFCs like the IF-MIB (see section “3.1.5. Interface Numbering”) have weakened the original definition to some degree.

Another drawback of the pure RMON interface index number as an interface identifier in the service check name is that it is less descriptive than e.g. the interface description ifDescr from the IF-MIB. This makes the visual mapping of the service check to the respective interface of the network device rather tedious and error-prone.

An obvious solution to both issues would be to use the already mentioned reference to the IF-MIB for each interface and – in the process of the Check_MK inventory – check for the interface link state (ifOperStatus). The Check_MK inventory process would return only those interfaces with a value of up(1) in the ifOperStatus variable. For those particular interfaces it would also return the interface description ifDescr to be used as a service name, instead of the previsously used RMON interface index.

As a side effect, this approach unfortunately breaks the necessary mapping from the service name – now based on the IF-MIB interface description (ifDescr) instead of the previously used RMON interface index (etherStatsIndex) – back to the RMON MIB where the interface statistic values are stored. This is mainly due to the fact that the IF-MIB itself does not provide a native reference to the RMON MIB. Without such a back reference, the statistics values collected from the RMON MIB can – during normal service check runs – not distinctly be assigned to the appropriate interface. It is therefore necessary to manually implement such a back reference within the service check. In this case the solution was to store the value of the RMON interface index etherStatsIndex in the parameters section (params) of each interface service checks inventory entry. The following excerpt shows an example of such a new inventory data structure for several RMON interface service checks:

[ [...] ('rmon_stats', 'Link Aggregate 1', {'rmon_if_idx': 89}), ('rmon_stats', 'Link Aggregate 16', {'rmon_if_idx': 104}), ('rmon_stats', 'Link Aggregate 19', {'rmon_if_idx': 107}), ('rmon_stats', 'Link Aggregate 2', {'rmon_if_idx': 90}), ('rmon_stats', 'Link Aggregate 31', {'rmon_if_idx': 119}), ('rmon_stats', 'Link Aggregate 32', {'rmon_if_idx': 120}), ('rmon_stats', 'Link Aggregate 7', {'rmon_if_idx': 95}), ('rmon_stats', 'Link Aggregate 8', {'rmon_if_idx': 96}), ('rmon_stats', 'Unit: 1 Slot: 0 Port: 1 10G - Level', {'rmon_if_idx': 1}), ('rmon_stats', 'Unit: 1 Slot: 0 Port: 10 10G - Level', {'rmon_if_idx': 10}), ('rmon_stats', 'Unit: 1 Slot: 0 Port: 16 10G - Level', {'rmon_if_idx': 16}), ('rmon_stats', 'Unit: 1 Slot: 0 Port: 19 10G - Level', {'rmon_if_idx': 19}), ('rmon_stats', 'Unit: 1 Slot: 0 Port: 2 10G - Level', {'rmon_if_idx': 2}), ('rmon_stats', 'Unit: 1 Slot: 0 Port: 20 10G - Level', {'rmon_if_idx': 20}), ('rmon_stats', 'Unit: 1 Slot: 0 Port: 7 10G - Level', {'rmon_if_idx': 7}), ('rmon_stats', 'Unit: 1 Slot: 0 Port: 8 10G - Level', {'rmon_if_idx': 8}), ('rmon_stats', 'Unit: 1 Slot: 0 Port: 9 10G - Level', {'rmon_if_idx': 9}), ('rmon_stats', 'Unit: 1 Slot: 1 Port: 3 10G - Level', {'rmon_if_idx': 23}), ('rmon_stats', 'Unit: 1 Slot: 1 Port: 4 10G - Level', {'rmon_if_idx': 24}), ('rmon_stats', 'Unit: 2 Slot: 0 Port: 1 10G - Level', {'rmon_if_idx': 25}), ('rmon_stats', 'Unit: 2 Slot: 0 Port: 10 10G - Level', {'rmon_if_idx': 34}), ('rmon_stats', 'Unit: 2 Slot: 0 Port: 16 10G - Level', {'rmon_if_idx': 40}), ('rmon_stats', 'Unit: 2 Slot: 0 Port: 19 10G - Level', {'rmon_if_idx': 43}), ('rmon_stats', 'Unit: 2 Slot: 0 Port: 2 10G - Level', {'rmon_if_idx': 26}), ('rmon_stats', 'Unit: 2 Slot: 0 Port: 20 10G - Level', {'rmon_if_idx': 44}), ('rmon_stats', 'Unit: 2 Slot: 0 Port: 7 10G - Level', {'rmon_if_idx': 31}), ('rmon_stats', 'Unit: 2 Slot: 0 Port: 8 10G - Level', {'rmon_if_idx': 32}), ('rmon_stats', 'Unit: 2 Slot: 0 Port: 9 10G - Level', {'rmon_if_idx': 33}), ('rmon_stats', 'Unit: 2 Slot: 1 Port: 3 10G - Level', {'rmon_if_idx': 47}), ('rmon_stats', 'Unit: 2 Slot: 1 Port: 4 10G - Level', {'rmon_if_idx': 48}), ('rmon_stats', 'Unit: 3 Slot: 0 Port: 1 10G - Level', {'rmon_if_idx': 49}), ('rmon_stats', 'Unit: 3 Slot: 0 Port: 2 10G - Level', {'rmon_if_idx': 50}), ('rmon_stats', 'Unit: 3 Slot: 0 Port: 9 10G - Level', {'rmon_if_idx': 57}), ('rmon_stats', 'Unit: 4 Slot: 0 Port: 1 10G - Level', {'rmon_if_idx': 69}), ('rmon_stats', 'Unit: 4 Slot: 0 Port: 2 10G - Level', {'rmon_if_idx': 70}), ('rmon_stats', 'Unit: 4 Slot: 0 Port: 9 10G - Level', {'rmon_if_idx': 77}), [...] ]

The changes to the rmon_stats check, necessary to implement the features described above are shown in the following patch:

- rmon_stats.patch

--- rmon_stats.orig 2015-12-21 13:41:46.000000000 +0100 +++ rmon_stats 2016-02-02 11:18:56.789188249 +0100 @@ -34,20 +34,43 @@ settings = host_extra_conf_merged(g_hostname, inventory_if_rules) if settings.get("rmon"): inventory = [] - for line in info: - inventory.append((line[0], None)) + for line in info[1]: + rmon_if_idx = int(re.sub('.1.3.6.1.2.1.2.2.1.1.','',line[1])) + for iface in info[0]: + if int(iface[0]) == rmon_if_idx and int(iface[2]) == 1: + params = {} + params["rmon_if_idx"] = int(line[0]) + inventory.append((iface[1], "%r" % params)) return inventory -def check_rmon_stats(item, _no_params, info): - bytes = { 1: 'bcast', 2: 'mcast', 3: '0-63b', 4: '64-127b', 5: '128-255b', 6: '256-511b', 7: '512-1023b', 8: '1024-1518b' } - perfdata = [] +def check_rmon_stats(item, params, info): + bytes = { 2: 'bcast', 3: 'mcast', 4: '0-63b', 5: '64-127b', 6: '128-255b', 7: '256-511b', 8: '512-1023b', 9: '1024-1518b' } + rmon_if_idx = str(params.get("rmon_if_idx")) + if_alias = '' infotext = '' + perfdata = [] now = time.time() - for line in info: - if line[0] == item: + + for line in info[0]: + ifIndex, ifDescr, ifOperStatus, ifAlias = line + if item == ifDescr: + if_alias = ifAlias + if_index = int(ifIndex) + + for line in info[1]: + if line[0] == rmon_if_idx: + if_mib_idx = int(re.sub('.1.3.6.1.2.1.2.2.1.1.','',line[1])) + if if_mib_idx != if_index: + return (3, "RMON interface index mapping to IF interface index mapping changed. Re-run Check_MK inventory.") + + if item != if_alias and if_alias != '': + infotext = "[%s, RMON: %s] " % (if_alias, rmon_if_idx) + else: + infotext = "[RMON: %s] " % rmon_if_idx + for i, val in bytes.items(): octets = int(re.sub(' Packets','',line[i])) - rate = get_rate("%s-%s" % (item, val), now, octets) + rate = get_rate("%s-%s" % (rmon_if_idx, val), now, octets) perfdata.append((val, rate, 0, 0, 0)) infotext += "%s=%.0f " % (val, rate) infotext += 'octets/sec' @@ -58,10 +81,18 @@ check_info["rmon_stats"] = { 'check_function' : check_rmon_stats, 'inventory_function' : inventory_rmon_stats, - 'service_description' : 'RMON Stats IF %s', + 'service_description' : 'RMON Interface %s', 'has_perfdata' : True, - 'snmp_info' : ('.1.3.6.1.2.1.16.1.1.1', [ # + 'snmp_info' : [ + ( '.1.3.6.1.2.1', [ # + '2.2.1.1', # ifNumber + '2.2.1.2', # ifDescr + '2.2.1.8', # ifOperStatus + '31.1.1.1.18', # ifAlias + ]), + ('.1.3.6.1.2.1.16.1.1.1', [ # '1', # etherStatsIndex = Item + '2', # etherStatsDataSource = Interface in the RFC1213-MIB '6', # etherStatsBroadcastPkts '7', # etherStatsMulticastPkts '14', # etherStatsPkts64Octets @@ -70,10 +101,11 @@ '17', # etherStatsPkts256to511Octets '18', # etherStatsPkts512to1023Octets '19', # etherStatsPkts1024to1518Octets - ]), - # for the scan we need to check for any single object in the RMON tree, - # we choose netDefaultGateway in the hope that it will always be present - 'snmp_scan_function' : lambda oid: ( oid(".1.3.6.1.2.1.1.1.0").lower().startswith("cisco") \ - or oid(".1.3.6.1.2.1.1.2.0") == ".1.3.6.1.4.1.11863.1.1.3" \ - ) and oid(".1.3.6.1.2.1.16.19.12.0") != None, + ]), + ], + # if at least one interface within the RMON tree is present (the previous + # "netDefaultGateway" is not present in every device implementing the RMON + # MIB + 'snmp_scan_function' : lambda oid: oid(".1.3.6.1.2.1.16.1.1.1.1.1") > 0, + }

The following output shows examples of the new service check names. The ifAlias and the etherStatsIndex have been added as auxiliary information to the service check output. The ifAlias entries in the examples have – in order to protect the innocent – been redacted with xxxxxxxx though.

OK RMON Interface Link Aggregate 1 OK - [xxxxxxxx, RMON: 89] bcast=0 mcast=0 0-63b=85 64-127b=390 128-255b=136 256-511b=25 512-1023b=20 1024-1518b=407 octets/sec OK RMON Interface Link Aggregate 16 OK - [xxxxxxxx, RMON: 104] bcast=0 mcast=0 0-63b=1 64-127b=11 128-255b=3 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec UNKN RMON Interface Link Aggregate 19 UNKNOWN - RMON interface index mapping to IF interface index mapping changed. Re-run Check_MK inventory. OK RMON Interface Link Aggregate 2 OK - [xxxxxxxx, RMON: 90] bcast=0 mcast=0 0-63b=111 64-127b=480 128-255b=207 256-511b=44 512-1023b=41 1024-1518b=484 octets/sec [...] OK RMON Interface Unit: 1 Slot: 0 Port: 1 10G - Level OK - [xxxxxxxx, RMON: 1] bcast=0 mcast=0 0-63b=49 64-127b=232 128-255b=57 256-511b=14 512-1023b=4 1024-1518b=185 octets/sec OK RMON Interface Unit: 1 Slot: 0 Port: 10 10G - Level OK - [xxxxxxxx, RMON: 10] bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 1 Slot: 0 Port: 16 10G - Level OK - [xxxxxxxx, RMON: 16] bcast=0 mcast=0 0-63b=0 64-127b=2 128-255b=1 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 1 Slot: 0 Port: 19 10G - Level OK - [xxxxxxxx, RMON: 19] bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 1 Slot: 0 Port: 2 10G - Level OK - [xxxxxxxx, RMON: 2] bcast=0 mcast=0 0-63b=62 64-127b=293 128-255b=89 256-511b=28 512-1023b=9 1024-1518b=252 octets/sec OK RMON Interface Unit: 1 Slot: 0 Port: 20 10G - Level OK - [xxxxxxxx, RMON: 20] bcast=0 mcast=0 0-63b=75 64-127b=1640 128-255b=217 256-511b=41 512-1023b=79 1024-1518b=2096 octets/sec OK RMON Interface Unit: 1 Slot: 0 Port: 7 10G - Level OK - [xxxxxxxx, RMON: 7] bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 1 Slot: 0 Port: 8 10G - Level OK - [xxxxxxxx, RMON: 8] bcast=0 mcast=0 0-63b=0 64-127b=5 128-255b=1 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 1 Slot: 0 Port: 9 10G - Level OK - [xxxxxxxx, RMON: 9] bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 1 Slot: 1 Port: 3 10G - Level OK - [xxxxxxxx, RMON: 23] bcast=0 mcast=0 0-63b=0 64-127b=3 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 1 Slot: 1 Port: 4 10G - Level OK - [xxxxxxxx, RMON: 24] bcast=0 mcast=0 0-63b=0 64-127b=3 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 2 Slot: 0 Port: 1 10G - Level OK - [xxxxxxxx, RMON: 25] bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 2 Slot: 0 Port: 10 10G - Level OK - [xxxxxxxx, RMON: 34] bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 2 Slot: 0 Port: 16 10G - Level OK - [xxxxxxxx, RMON: 40] bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec OK RMON Interface Unit: 2 Slot: 0 Port: 19 10G - Level OK - [xxxxxxxx, RMON: 43] bcast=0 mcast=1 0-63b=1 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec [...] OK RMON Interface Unit: 4 Slot: 0 Port: 9 10G - Level OK - [xxxxxxxx, RMON: 77] bcast=0 mcast=0 0-63b=0 64-127b=0 128-255b=0 256-511b=0 512-1023b=0 1024-1518b=0 octets/sec

The enhanced version of the rmon_stats check can be downloaded here along with a slightly beautified version of the accompanying PNP4Nagios template:

Enhanced version of the rmon_stats check

Patch to enhance the original verion of the rmon_stats check

Slightly beautified version of the rmon_stats PNP4Nagios template

Patch to beautify the rmon_stats PNP4Nagios template

Finally, the following two screenshots show examples of PNP4Nagios graphs using the modified version of the PNP4Nagios template:

The sources to both, the enhanced version of the rmon_stats check and the beautified version of the rmon_stats PNP4Nagios template, can be found on GitHub in my Check_MK Plugins repository.

2015-10-01 // Integration of Dell PowerConnect M-Series Switches with RANCID

An – almost – out of the box integration of Dell PowerConnect M-Series switches (specifically M6348 and M8024-k) with version 3.2 of the popular, open source switch and router configuration management tool RANCID.

RANCID has, in the previous version 2.3, already been able to integrate Dell PowerConnect switches through the use of the custom ''dlogin'' and ''drancid'' script addons. There are already several howtos on the net on how to use those addons and also slightly tweaked versions of them here and here.

With version 3.2 of RANCID, either build from source or installed pre-packaged e.g. from Debian testing (stretch), everything has gotten much more straight forward. The only modification needed now is a small patch to the script ''/usr/lib/rancid/bin/srancid'', adapting its output postprocessing part to those particular switch models. This modification is necessary in order to remove constantly changing output – in this case uptime and temperature information – from the commands show version and show system, which are issued by srancid for those particular switch models. For the purpose of clarification, here are output samples from the show version:

switch1# show version System Description................ Dell Ethernet Switch System Up Time.................... 90 days, 04h:48m:41s System Contact.................... <contact email> System Name....................... <system name> System Location................... <location> Burned In MAC Address............. F8B1.566E.4AFB System Object ID.................. 1.3.6.1.4.1.674.10895.3041 System Model ID................... PCM8024-k Machine Type...................... PowerConnect M8024-k unit image1 image2 current-active next-active ---- ----------- ----------- -------------- -------------- 1 5.1.3.7 5.1.8.2 image2 image2 2 5.1.3.7 5.1.8.2 image2 image2

and show system:

switch1# show system

System Description: Dell Ethernet Switch

System Up Time: 90 days, 04h:48m:19s

System Contact: <contact email>

System Name: <system name>

System Location: <location>

Burned In MAC Address: F8B1.566E.4AFB

System Object ID: 1.3.6.1.4.1.674.10895.3041

System Model ID: PCM8024-k

Machine Type: PowerConnect M8024-k

Temperature Sensors:

Unit Description Temperature Status

(Celsius)

---- ----------- ----------- ------

1 System 39 Good

2 System 39 Good

Power Supplies:

Unit Description Status

---- ----------- -----------

NA NA NA

NA NA NA

commands issued on a stacked pair of Dell PowerConnect M8024-k switches. Without the patch to srancid, the lines starting with System Up Time as well as the lines for each temperature sensor unit would always trigger a configuration change in RANCID, even if there was no real change in configuration. The provided patch adds handling and the subsequent removal of those ever-changing values from the configuration which is stored in the RCS used by RANCID.

dell (see man router.db). This device type is intended to be used with D-Link switches OEMed by Dell and will not work with Dell PowerConnect switch models. The correct device type for Dell PowerConnect switch models is smc, though.

For the sake of completeness, here a full step-by-step configuration example for Dell PowerConnect M6348 and M8024-k switches:

Add the Debian