2013-07-30 // Ganglia Fibre Channel Power/Attenuation Monitoring on AIX and VIO Servers

Although usually only available upon request via IBM support, efc_power is quite the handy tool when it comes to debugging or narrowing down fibre channel link issues. It provides information about the transmit and receive, power and attenuation values for a given FC port on a AIX or VIO server. Fortunately the output of efc_power:

$ /opt/freeware/bin/efc_power /dev/fscsi2 TX: 1232 -> 0.4658 mW, -3.32 dBm RX: 10a9 -> 0.4265 mW, -3.70 dBm

is very parser-friendly, so it can very easily be read by a script for further processing. In this case further processing means a continuous Ganglia monitoring of the fibre channel transmit and receive, power and attenuation values for each FC port on a AIX or VIO server. This is accomplished by the two RPM packages ganglia-addons-aix and ganglia-addons-aix-scripts:

The package ganglia-addons-aix-scripts is to be installed on the AIX or VIO server which has the FC adapter installed. It depends on the aaa_base package for the efc_power binary and on the ganglia-addons-base package, specifically on the cronjob (/opt/freeware/etc/run_parts/conf.d/ganglia-addons.sh) defined by this package. In the context of this cronjob all avaliable scripts in the directory /opt/freeware/libexec/ganglia-addons/ are executed. For this specific Ganglia addon an iteration over all fscsi devices in the system is done and efc_power is called for each fscsi device. Devices can be excluded by assigning a regex pattern to the BLACKLIST variable in the configuration file /opt/freeware/etc/ganglia-addons/ganglia-addons-efc_power.cfg. The output of each efc_power call is parsed and via the gmetric command fed into a Ganglia monitoring system that has to be already set up.

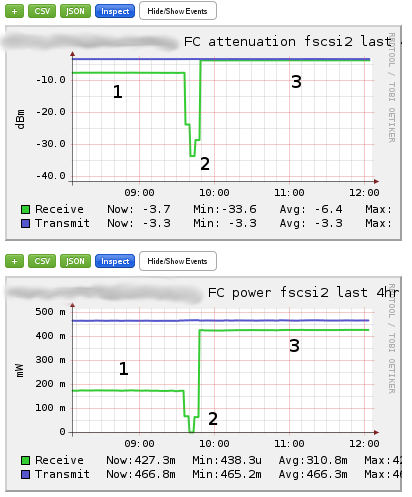

The package ganglia-addons-aix is to be installed on the host running the Ganglia webinterface. It contains templates for the customization of the FC power and attenuation metrics within the Ganglia Web 2 interface. See the README.templates file for further installation instructions. Here are samples of the two graphs created with those Ganglia monitoring templates:

In the section “1” of the graphs, the receive attenuation on FC port fscsi2 was about -7.7 dBm, which means that of the 476.6 uW sent from the Brocade switchport:

$ sfpshow 1/10 Identifier: 3 SFP Connector: 7 LC Transceiver: 540c404000000000 200,400,800_MB/s M5,M6 sw Short_dist Encoding: 1 8B10B Baud Rate: 85 (units 100 megabaud) Length 9u: 0 (units km) Length 9u: 0 (units 100 meters) Length 50u: 5 (units 10 meters) Length 62.5u:2 (units 10 meters) Length Cu: 0 (units 1 meter) Vendor Name: BROCADE Vendor OUI: 00:05:1e Vendor PN: 57-1000012-01 Vendor Rev: A Wavelength: 850 (units nm) Options: 003a Loss_of_Sig,Tx_Fault,Tx_Disable BR Max: 0 BR Min: 0 Serial No: UAF1112600001JW Date Code: 110619 DD Type: 0x68 Enh Options: 0xfa Status/Ctrl: 0x82 Alarm flags[0,1] = 0x5, 0x40 Warn Flags[0,1] = 0x5, 0x40 Alarm Warn low high low high Temperature: 41 Centigrade -10 90 -5 85 Current: 7.392 mAmps 1.000 17.000 2.000 14.000 Voltage: 3264.9 mVolts 2900.0 3700.0 3000.0 3600.0 RX Power: -4.0 dBm (400.1 uW) 10.0 uW 1258.9 uW 15.8 uW 1000.0 uW TX Power: -3.2 dBm (476.6 uW) 125.9 uW 631.0 uW 158.5 uW 562.3 uW

only about 200 uW actually made it to the FC port fscsi2 on the VIO server. Section “2” shows even worse values during the time the FC connections and cables were checked, which basically means that the FC link was down during that time period. Section “3” shows the values after the bad cable was found and replaced. Receive attenuation on FC port fscsi2 went down to about -3.7 dBm, which means that of the now 473.6 uW sent from the Brocade switchport, 427.3 uW actually make it to the FC port fscsi2 on the VIO server.

The goal with the continuous monitoring of the fibre channel transmit and receive, power and attenuation values is to catch slowly deterioration situations early on, before they become a real issue or even a service interruption. As shown above, this can be accomplished with Ganglia and the two RPM packages ganglia-addons-aix and ganglia-addons-aix-scripts. For ad hoc checks, e.g. during the debugging of the components in a suspicious FC link, efc_power is still best to be called directly from the AIX or VIO server command line.

2013-06-15 // Ganglia Performance Monitoring on IBM Power with AIX and LPM

Ganglia is a great open source performance monitoring tool. Like many others (e.g. Cacti, Munin, etc.) it uses RRDtool as a consolidated data storage tool. Unlike others, the main focus of Ganglia is on efficiently monitoring large scale distributed environments. Ganglia can very easily be used to do performance monitoring in IBM Power environments running AIX, Linux/PPC and VIO servers. This is thanks to the great effort of Michael Perzl, who maintains pre-build Ganglia RPM packages for AIX which also cover some of the AIX specific metrics. Ganglia can very easily be utilized to also do application specific performance monitoring, since this is a very extensive subject it'll be discussed in upcoming articles.

Since Ganglia is usually used in environments where there is a “grid” of one or more “clusters” containing a larger number of individual “hosts” to be monitored, it applies the same semantics to the way it builds its hierarchical views. Unfortunately there is no exact equivalent to those classification terms in the IBM Power world. I found the following mapping of terms to be the most useful:

| Ganglia Terminology | IBM Power Terminology | Comment |

|---|---|---|

| Grid | Grid | Container entity of 1+ clusters or managed systems |

| Cluster | Managed System | Container entity of 1+ hosts or LPARs |

| Host | Host, LPAR | Individual host running an OS |

On the one hand this mapping makes sense, since you usually are either interested in the perfomance metrics of an individual host or in the relation between individual host metrics within the context of the managed system that is running those hosts. E.g. how much CPU time is a individual host using versus how much CPU time are all hosts on a managed system using and in what distribution.

On the other hand this mapping turns out to be a bit problematic, since Ganglia expects a rather static assignment of hosts to clusters. In a traditional HPC environment a host is seldomly moved from one cluster to another, making the necessary configuration work a manageable amount of administrative overhead. In a IBM Power environment with the above mapping of terms applied, a LPAR could – and with the introduction of LPM even more – easily be moved between different clusters. To reduce the administrative overhead, the necessary configuration changes should be done automatically. Historical performance data of a host should be preserved, even when moving between different clusters.

Also, by default Ganglia uses IP multicast for the necessary communication between the hosts and the Ganglia server. While this may be a viable method for large cluster setups in flat, unsegmented networks, it does not do so well in heavily segmented or firewalled network environments. Ganglia can be configured to instead use IP unicast for the communication between the hosts and the Ganglia server, but this also has some effect on the cluster to host mapping described above.

The Ganglia setup described below will meet the following design criteria:

Use of IP unicast communication with predefined UDP ports.

A “firewall-friendly” behaviour with regard to the Ganglia network communication.

Automatic reconfiguration of Ganglia in case a LPAR is moved from one managed system to another.

Preservation of the historical performance data in case a LPAR is moved from one managed system to another.

Prerequisites

For a Ganglia setup to fullfill the above design goals, some prerequisites have to be met:

A working basic Ganglia server, with the following Ganglia RPM packages installed:

ganglia-gmetad-3.4.0-1 ganglia-gmond-3.4.0-1 ganglia-gweb-3.5.2-1 ganglia-lib-3.4.0-1

If the OS of the ganglia server is also to be monitored as a Ganglia client, install the Ganglia modules you see fit. In my case e.g.:

ganglia-mod_ibmame-3.4.0-1 ganglia-p6-mod_ibmpower-3.4.0-1

A working Ganglia client setup on the hosts to be monitored, with the following minimal Ganglia RPM packages installed:

ganglia-gmond-3.4.0-1 ganglia-lib-3.4.0-1

In addition to the minimal Ganglia RPM packages, you can install any number of additional Ganglia modules you might need. In my case e.g. for all the fully virtualized LPARs:

ganglia-mod_ibmame-3.4.0-1 ganglia-p6-mod_ibmpower-3.4.0-1

and for all the VIO servers and LPARs that have network and/or fibre channel hardware resources assigned:

ganglia-gmond-3.4.0-1 ganglia-lib-3.4.0-1 ganglia-mod_ibmfc-3.4.0-1 ganglia-mod_ibmnet-3.4.0-1 ganglia-p6-mod_ibmpower-3.4.0-1

A convention of which UDP ports will be used for the communication between the Ganglia clients and the Ganglia server. Each managed system in your IBM Power environment will get its own

gmondprocess on the Ganglia server. For the Ganglia clients on a single managed system to be able to communicate with the Ganglia server, an individual and unique UDP port will be used.Model name, serial number and name of all managed systems in your IBM Power environment.

If necessary, a set of firewall rules that allow communication between the Ganglia clients and the Ganglia server along the lines of the previously defined UDP port convention.

With the information from the above items 3 and 4, create a three-way mapping table like this:

| Managed System Name | Model Name_Serial Number | Ganglia Unicast UDP Port |

|---|---|---|

| P550-DC1-R01 | 8204-E8A_0000001 | 8301 |

| P770-DC1-R05 | 9117-MMB_0000002 | 8302 |

| P770-DC2-R35 | 9117-MMB_0004711 | 8303 |

The information in this mapping table will be used in the following examples, so here's a bit more detailed explaination:

Three columns, “Managed System Name”, “Model Name_Serial Number” and “Ganglia Unicast UDP Port”.

Each row contains the information for one IBM Power managed system.

The field “Managed System Name” contains the name of the managed system, which is later on displayed in Ganglia. For ease of administration it should ideally be consistent with the name used in the HMC. In this example the naming convention is “Systemtype-Datacenter-Racknumber”.

The field “Model Name_Serial Number” contains the model and the S/N of the managed system, which are concatenated with “_” to a single string containing no whitespaces. Model and S/N are substrings of the values that the command:

$ lsattr -El sys0 -a systemid -a modelname systemid IBM,020000001 Hardware system identifier False modelname IBM,8204-E8A Machine name False

reports.

The field “Ganglia Unicast UDP Port” contains the UDP port which was assigned to a specific IBM Power managed system by the convention mentioned above. In this example the UDP ports were simply allocated in a incremental, sequential order, starting at port 8301. It can be any UDP port that is available in your environment, you just have to be consistent about its use and be careful to have no duplicate assignments.

Configuration

Create a filesystem and a directory hierarchy for the Ganglia RRD database files to be stored in. In this example the already existing

/gangliafilesystem is used to create the following directories:/ganglia /ganglia/rrds /ganglia/rrds/LPARS /ganglia/rrds/P550-DC1-R01 /ganglia/rrds/P770-DC1-R05 /ganglia/rrds/P770-DC2-R35 ... /ganglia/rrds/<Managed System Name>

Make sure all the directories are owned by the user under which the

gmondprocesses will be running. In my case this is the usernobody.Later on, the directory

/ganglia/rrds/LPARSwill contain subdirectories for each LPAR, which in turn contain the RRD files storing the performance data for each LPAR. The directories/ganglia/rrds/<Managed System Name>will only contain a symbolic link for each LPAR, pointing to the actual LPAR directory within/ganglia/rrds/LPARS, e.g.:$ ls -ald /ganglia/rrds/*/saixtest.mydomain drwxr-xr-x 2 nobody [...] /ganglia/rrds/LPARS/saixtest.mydomain lrwxrwxrwx 1 nobody [...] /ganglia/rrds/P550-DC1-R01/saixtest.mydomain -> ../LPARS/saixtest.mydomain lrwxrwxrwx 1 nobody [...] /ganglia/rrds/P770-DC1-R05/saixtest.mydomain -> ../LPARS/saixtest.mydomain lrwxrwxrwx 1 nobody [...] /ganglia/rrds/P770-DC2-R35/saixtest.mydomain -> ../LPARS/saixtest.mydomain

In addition – and this is the actual reason for the whole symbolic link orgy – Ganglia will automatically create a subdirectory

/ganglia/rrds/<Managed System Name>/__SummaryInfo__, which contains the RRD files used to generate the aggregated summary performance metrics of each managed system. If the above setup would be simplified by placing the symbolic links one level higher in the directory hierarchy, each managed system would use the same__SummaryInfo__subdirectory, rendering the RRD files in it useless for summary purposes.This directory and symbolic link setup might seem strange or even unnecessary at first, but it plays a major role in getting Ganglia to play along with LPM. So it's important to set this up accordingly.

Setup a

gmondserver process for each managed system in your IBM Power environment. In this example we need three processes for the three managed systems mentioned above. On the Ganglia server, create agmondconfiguration file for each managed system that can send performance data to the Ganglia server. Note that only the essential parts of thegmondconfiguration are shown in the following code snippets:- /etc/ganglia/gmond-p550-dc1-r01.conf

globals { ... mute = yes ... } cluster { name = "P550-DC1-R01" ... } udp_recv_channel { port = 8301 } tcp_accept_channel { port = 8301 }

- /etc/ganglia/gmond-p770-dc1-r05.conf

globals { ... mute = yes ... } cluster { name = "P770-DC1-R05" ... } udp_recv_channel { port = 8302 } tcp_accept_channel { port = 8302 }

- /etc/ganglia/gmond-p770-dc2-r35.conf

globals { ... mute = yes ... } cluster { name = "P770-DC2-R35" ... } udp_recv_channel { port = 8303 } tcp_accept_channel { port = 8303 }

Copy the stock

gmondinit script for eachgmondserver process:$ for MSYS in p550-dc1-r01 p770-dc1-r05 p770-dc2-r35; do cp -pi /etc/rc.d/init.d/gmond /etc/rc.d/init.d/gmond-${MSYS} done

and edit the following lines:

- /etc/rc.d/init.d/gmond-p550-dc1-r01

... PIDFILE=/var/run/gmond-p550-dc1-r01.pid ... GMOND_CONFIG=/etc/ganglia/gmond-p550-dc1-r01.conf ...

- /etc/rc.d/init.d/gmond-p770-dc1-r05

... PIDFILE=/var/run/gmond-p770-dc1-r05.pid ... GMOND_CONFIG=/etc/ganglia/gmond-p770-dc1-r05.conf ...

- /etc/rc.d/init.d/gmond-p770-dc2-r35

... PIDFILE=/var/run/gmond-p770-dc2-r35.pid ... GMOND_CONFIG=/etc/ganglia/gmond-p770-dc2-r35.conf ...

and start the

gmondserver processes. Check for running processes and UDP ports being listened on:$ for MSYS in p550-dc1-r01 p770-dc1-r05 p770-dc2-r35; do /etc/rc.d/init.d/gmond-${MSYS} start done $ ps -ef | grep gmond- nobody 4260072 [...] /opt/freeware/sbin/gmond -c /etc/ganglia/gmond-p770-dc2-r35.conf -p /var/run/gmond-p770-dc2-r35.pid nobody 6094872 [...] /opt/freeware/sbin/gmond -c /etc/ganglia/gmond-p770-dc1-r05.conf -p /var/run/gmond-p770-dc1-r05.pid nobody 6291514 [...] /opt/freeware/sbin/gmond -c /etc/ganglia/gmond-p550-dc1-r01.conf -p /var/run/gmond-p550-dc1-r01.pid $ netstat -na | grep 830 tcp4 0 0 *.8300 *.* LISTEN tcp4 0 0 *.8301 *.* LISTEN tcp4 0 0 *.8302 *.* LISTEN udp4 0 0 *.8218 *.* udp4 0 0 *.8222 *.* udp4 0 0 *.8223 *.*

If you want the

gmondserver processes to be startet automatically on startup of the Ganglia server system, create the appropriate “S” and “K” symbolic links in/etc/rc.d/rc2.d/. E.g.:$ for MSYS in p550-dc1-r01 p770-dc1-r05 p770-dc2-r35; do ln -s /etc/rc.d/init.d/gmond-${MSYS} /etc/rc.d/rc2.d/Sgmond-${MSYS} ln -s /etc/rc.d/init.d/gmond-${MSYS} /etc/rc.d/rc2.d/Kgmond-${MSYS} done

Setup the

gmetadserver process to query eachgmondserver process. On the Ganglia server, edit thegmetadconfiguration file:- /etc/ganglia/gmetad.conf

... data_source "P550-DC1-R01" 60 localhost:8301 data_source "P770-DC1-R05" 60 localhost:8302 data_source "P770-DC2-R35" 60 localhost:8303 ... rrd_rootdir "/ganglia/rrds" ...

and restart the

gmetadprocess.Create a DNS or

/etc/hostsentry namedgangliawhich points to the IP address of your Ganglia server. Make sure the DNS or hostname is resolvable on all the Ganglia client LPARs in your environment.Install the aaa_base and

ganglia-addons-baseRPM packages on all the Ganglia client LPARs in your environment.The

ganglia-addons-basepackage installs several extensions to the stockganglia-gmondpackage which are:a modified

gmondclient init script/opt/freeware/libexec/ganglia-addons-base/gmond.a configuration file

/etc/ganglia/gmond.init.conffor the modifiedgmondclient init script.a configuration template file

/etc/ganglia/gmond.tpl.a run_parts script

800_ganglia_check_links.shfor the Ganglia server to perform a sanity check on the symbolic links in the/ganglia/rrds/<managed system>/directories.

Edit or create

/etc/ganglia/gmond.init.confto reflect your environment and roll it out to all the Ganglia client LPARs. E.g. with the example data from above:- /etc/ganglia/gmond.init.conf

# MSYS_ARRAY: MSYS_ARRAY["8204-E8A_0000001"]="P550-DC1-R01" MSYS_ARRAY["9117-MMB_0000002"]="P770-DC1-R05" MSYS_ARRAY["9117-MMB_0004711"]="P770-DC2-R35" # # GPORT_ARRAY: GPORT_ARRAY["P550-DC1-R01"]="8301" GPORT_ARRAY["P770-DC1-R05"]="8302" GPORT_ARRAY["P770-DC2-R35"]="8303" # # Exception for the ganglia host: G_HOST="ganglia" G_PORT_R="8399"

Stop the currently running

gmondclient process, switch thegmondclient init script to the modified version fromganglia-addons-baseand start a newgmondclient process:$ /etc/rc.d/init.d/gmond stop $ ln -sf /opt/freeware/libexec/ganglia-addons-base/gmond /etc/rc.d/init.d/gmond $ /etc/rc.d/init.d/gmond start

The modified

gmondclient init script will now determine the model an S/N of the managed system the LPAR is running on. It will also evaluate the configuration from/etc/ganglia/gmond.init.confand in conjunction with model an S/N of the managed system determine values for the Ganglia configuration entries “cluster → name”, “host → location” and “udp_send_channel → port”. The new configuration values will be merged with the configuration template file/etc/ganglia/gmond.tpland be written to a temporarygmondconfiguration file. If the temporary and currentgmondconfiguration files differ, the currentgmondconfiguration file will be overwritten by the temporary one and thegmondclient process will be restarted. If the temporary and current gmond configuration files match, the temporary one will be deleted and no further action is taken.The

aaa_basepackage will add a errnotify ODM entry, which will call the shell script/opt/freeware/libexec/aaa_base/post_lpm.sh. This shell script will trigger actions upon the generation of errpt messages with the labelCLIENT_PMIG_DONE, which signify the end of a successful LPM operation. Currently there are two actions performed in the shell script, one of which is to restart thegmondclient process on the LPAR. Since this restart is done by calling thegmondclient init script with therestartparameter, a newgmondconfiguration file will be created along the lines of the sequence explained in the previous paragraph. With the new configuration file, the freshly startedgmondwill now send its performance data to thegmondserver process that is responsible for the managed system the LPAR is now running on.After some time – usually a few minutes – new directories should start to appear under the

/ganglia/rrds/<managed system>/directories on the Ganglia server. To complete the setup those directories have to be moved to/ganglia/rrds/LPARS/and be replaced by appropriate symbolic links. E.g.:$ cd /ganglia/rrds/ $ HST="saixtest.mydomain"; for DR in P550-DC1-R01 P770-DC1-R05 P770-DC2-R35; do pushd ${DR} [[ -d ${HST} ]] && mv -i ${HST} ../LPARS/ ln -s ../LPARS/${HST} ${HST} popd done

There is a helper script

800_ganglia_check_links.shin theganglia-addons-basepackage which should be run daily to bring up possible inconsistencies, but can also be run interactively from a shell on the Ganglia server.If everything was setup correctly, the LPARs should now report to the Ganglia server based on which managed system they currently run on. This should be reflected in the Ganglia web interface by every LPAR being inserted under the appropriate managed system (aka cluster). After a successful LPM operation, the LPAR should – after a short delay – automatically be sorted in under the new managed system (aka cluster) in the Ganglia web interface.

Debugging

If some problem arises or things generally do not go according to plan, here are some hints where to look for issues:

Check if a

gmetadand allgmondserver processes are running on the Ganglia server. Also check withnetstatorlsofif thegmondserver processes are listening on the correct UDP ports.Check if the

gmondconfiguration file on the Ganglia client LPARs is successfully written. Check if the content of/etc/ganglia/gmond.confis accurate with regard to your environment. Especially check if the TCP/UDP ports on the Ganglia client LPAR match the ones on the Ganglia server.Check if the Ganglia client LPARs can reach the Ganglia server (e.g. with

ping). On the Ganglia server check if UDP packets are coming in from the LPAR in question (e.g. withtcpdump). On the Ganglia client LPAR, check if UDP packets are send out to the correct Ganglia server IP address (e.g. withtcpdump).Check if the directory or symbolic link

/ganglia/rrds/<managed system>/<hostname>/exists. Check if there are RRD files in the directory. Check if they've recently been written.Enable logging and increase the debug level on the Ganglia client and server processes, restart them and check for error messages.

Conclusions

With the described configuration changes and procedures, the already existing value of Ganglia as a performance monitoring tool for the IBM Power environment can even be increased. A more “firewall-friendly” Ganglia configuration is possible and has been used for several years in a heavily segmented and firewalled network environment. As new features like LPM are added to the IBM Power environment, increasing its overall flexibility and productivity, measures also have to be taken to ensure that surrounding tools and infrastructure like Ganglia are also up to the task.