2012-07-21 // Apache Logfile Analysis with AWStats and X-Forwarded-For Headers

When running a webserver behind a (reverse) proxy or load-balancer it's often necessary to enable the logging of the original clients IP address by looking at a possible X-Forwarded-For header in the HTTP request. Otherwise one will only see the IP address of the proxy or load-balancer in the webservers access logs. With the Apache webserver this is usually done by changing the LogFormat directive in the httpd.conf from:

LogFormat "%h %l %u %t \"%r\" %>s %O \"%{Referer}i\" \"%{User-Agent}i\"" combined

to something like this:

LogFormat "%{X-Forwarded-For}i %l %u %t \"%r\" %>s %O \"%{Referer}i\" \"%{User-Agent}i\"" combined

Unfortunately this solution fails if your so “lucky” to have a setup where clients can access the webserver both directly and through the proxy/load-balancer. In this particular case the HTTP request of the direct access to the webserver has no X-Forwarded-For header and will be logged with a “-” (dash). There are some solutions to this out on the net, utilizing the Apache SetEnvIf configuration directive.

Since this wasn't really satisfying for me, because it prevents you from knowing which requests were made directly and which were made through the proxy/load-balancer, i just inserted the X-Forwarded-For field right after the regular remote hostname field (%h):

LogFormat "%h %{X-Forwarded-For}i %l %u %t \"%r\" %>s %O \"%{Referer}i\" \"%{User-Agent}i\"" combined

An example of two access log entries looks like this:

10.0.0.1 - - - [21/Jul/2012:18:32:45 +0200] "GET / HTTP/1.1" 200 22043 "-" "User-Agent: Lynx/2.8.4 ..." 192.168.0.1 10.0.0.1 - - [21/Jul/2012:18:32:45 +0200] "GET / HTTP/1.1" 200 22860 "-" "User-Agent: Lynx/2.8.4 ..."

The first one shows a direct access from the client 10.0.0.1 and the second one shows a proxied/load-balanced access from the same client and the proxies/load-balancers IP address 192.168.0.1.

Now if you're also using AWStats to analyse the webservers access logs things get a bit tricky. AWStats has support for X-Forwarded-For entries in access logs, but only for the example in the second code block above as well as the mentioned SetEnvIf solution. This is due to the fact that the responsible AWStats LogFormat strings %host and %host_proxy are mutually exclusive. An AWStats configuration based on the Type 1 LogFormat like:

LogFormat = "%host %host_proxy %other %logname %time1 %methodurl %code %bytesd %refererquot %uaquot"

will not work, since %host_proxy will overwrite %host entries even if the %host_proxy field does not contain an IP address, like in the above direct access example. The result is a lot of hosts named “-” (dash) in your AWStats statistics. This can be fixed by the following simple, quick'n'dirty patch to the AWStats sources:

- awstats.pl

diff -u wwwroot/cgi-bin/awstats.pl.orig wwwroot/cgi-bin/awstats.pl --- wwwroot/cgi-bin/awstats.pl.orig 2012-07-18 19:28:59.000000000 +0200 +++ wwwroot/cgi-bin/awstats.pl 2012-07-18 19:30:52.000000000 +0200 @@ -17685,6 +17685,18 @@ next; } + my $pos_proxy = $pos_host - 1; + if ( $field[$pos_host] =~ /^-$/ ) { + if ($Debug) { + debug( + " Empty field host_proxy, using value " + . $field[$pos_proxy] . " from first host field instead", + 4 + ); + } + $field[$pos_host] = $field[$pos_proxy]; + } + if ($Debug) { my $string = ''; foreach ( 0 .. @field - 1 ) {

Please bear in mind that this is a “works for me”TM kind of solution, which might break AWStats in all kinds of other ways.

2012-06-17 // Webserver - Windows vs. Unix

Recently at work, i was given the task of evaluating alternatives for the current OS platform running the company homepage. Sounds trivial enough, doesn't it? But every subject in a moderately complex corporate environment has some history, lots of pitfalls and a considerable amount of politics attached to it, so why should this particular one be an exception.

The current environment was running a WAMP (Windows, Apache, MySQL, PHP) stack with a PHP-based CMS and was not performing well at all. The systems would cave under even minimal connection load, not to mention user rushes during campaign launches. The situation dragged on for over a year and a half, while expert consultants were brought in, measurements were made, fingers were pointed and even new hardware was purchased. Nothing helped, the new hardware brought the system down even faster, because it could serve more initial user requests thus effectively overrunning the system. IT management drew a lot of fire for the situation, but nontheless stuck with the “Microsoft, our strategic platform” mantra. I guess at some point the pressure got too high for even those guys.

This is where i, the Unix guy with almost no M$ knowledge, got the task of evaluating whether or not an “alternative OS platform” could do the job. Hot potatoe, anyone?

So i went on and set up four different environments that were at least somewhere within the scope of our IT departments supported systems (so no *BSD, no Solaris, etc.):

Linux on the newly purchased x86 hardware mentioned above

Linux on our VMware ESX cluster

Linux as a LPAR on our IBM Power systems

AIX as a LPAR on our IBM Power systems

Apache, MySQL and PHP were all the same version as in the Windows environment. The CMS and content were direct copies from the Windows production systems. Without any special further tweaking i ran some load tests with siege:

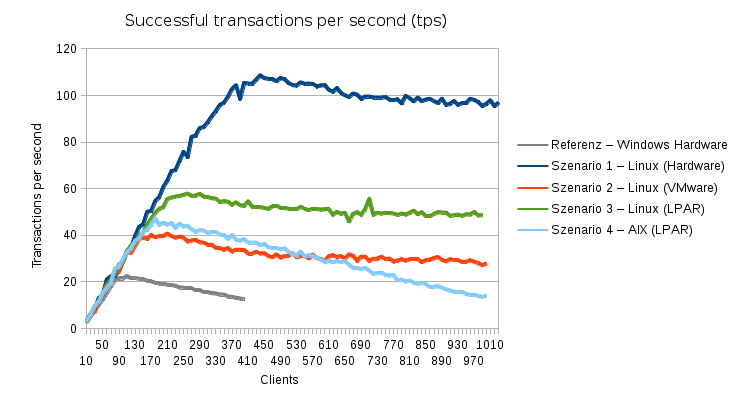

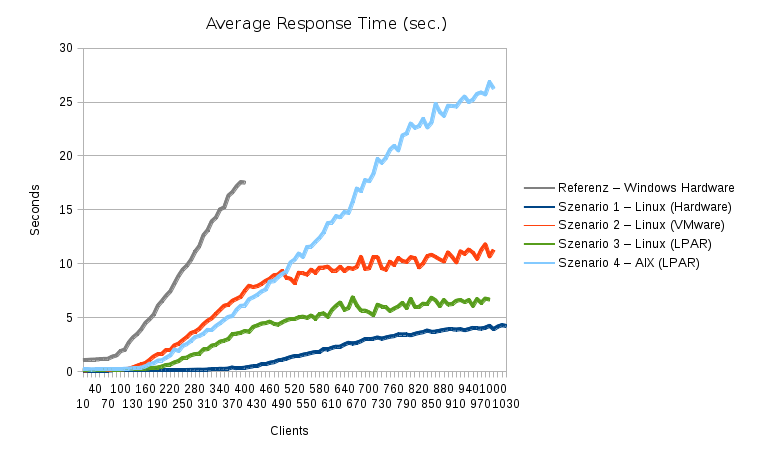

Compared to the Windows environment (gray line), scenario 1 (dark blue line) was giving about 5 times the performance on the exact same hardware. The virtualized scenarios 2, 3 and 4 did not perform so well in absolute values. But since their CPU resources were only about 1/2 of the ones available in scenario 1, their relative performance isn't too bad after all. Also notable is the fact that all scenarios served requests up to the test limit of a thousend parallel clients. Windows started dropping requests after about 300 parallel clients.

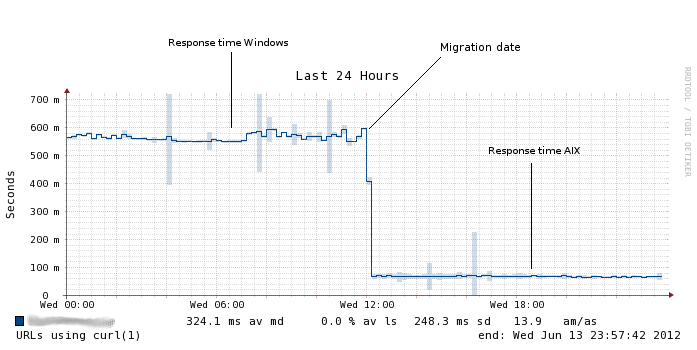

Presented with those numbers, management decided the company webserver environment should be migrated to an “alternative OS platform”. AIX on Power systems was chosen for operational reasons, even though it didn't have the highest possible performance out of the tested scenarios. The go-live of the new webserver environment was wednesday last week at noon, with the switchover of the load-balancing groups. Take a look what happened to the response time measurements around that time:

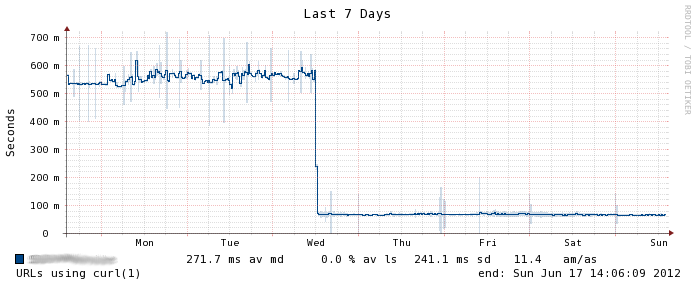

Also very interesting is the weekly graph a few days after the migration:

Note the largely reduced jitter in the response time!