2013-07-20 // AIX RPMs: GestioIP, net-snmp and netdisco-mibs

Following up to the previous posts, regarding the topic of AIX RPM packages, here are three new AIX RPM packages related to network and IP adress management (IPAM):

GestioIP: A web based open source IP address management (IPAM) system (GestioIP). See the

README.AIXfile for further installation instructions.

Net-SNMP: Basically the same as Michael Perzls Net-SNMP package, but build with the IBM XL C/C++ compiler against the Perl package provided here: AIX RPMs: Perl and CPAN Perl Modules.

netdisco-mibs: A substantial collection of SNMP MIB files from a number of different network equipment vendors provided by the Netdisco project.

2013-07-20 // AIX RPMs: Perl and CPAN Perl Modules

Following up to the previous posts, regarding the topic of AIX RPM packages, here are several new AIX RPM packages related to Perl:

Perl: Basically the same as Michael Perzls Perl package, but with a more recent upsteam version and build with the IBM XL C/C++ compiler. Thus it does not depend on libgcc to be installed, but requires all Perl modules to also be build with the IBM XL C/C++ compiler.

CPAN Perl Modules: Several Perl modules from CPAN build with the IBM XL C/C++ compiler and to be used with the above Perl package:

2013-07-20 // AIX RPMs: MySQL, percona-ganglia-mysql and phpMyAdmin

Following up to the previous posts, regarding the topic of AIX RPM packages, here are three new AIX RPM packages related to the MySQL database:

MySQL: A fast, stable, multi-user, multi-threaded, open source SQL database system. Unfortunately at the moment only MySQL versions 5.1.x or lower can be successfully build on AIX. More recent versions of MySQL were developed with a strong focus on Linux rather than a focus on protability, which is why their build fails on AIX.

During the first startup of the MySQL server via the

/etc/rc.d/init.d/mysqlinit script, several initial configuration tasks are performed. The command/opt/freeware/bin/mysql_install_dbis called to initialize the MySQL data directory/srv/mysql/with system databases and tables. Also, the MySQL userprocadmis added. This user is used for maintainance, startup and shutdown tasks, mainly triggered by the init script/etc/rc.d/init.d/mysql.In addition to the stock MySQL files, the simple custom backup script

/opt/freeware/libexec/mysql/mysql_backup.shis provided. After defining a database user with the appropriate permissions to backup all databases, the user and its password should be added to the configuration file/opt/freeware/etc/mysql/mysql_backup.confof the backup script. With the run_parts wrapper scripts:/opt/freeware/etc/run_parts/daily.d/050_mysql.sh /opt/freeware/etc/run_parts/monthly.d/050_mysql.sh /opt/freeware/etc/run_parts/weekly.d/050_mysql.sh

simple daily, weekly and monthly full database backups are done to the filesystem (

/srv/backup/mysql/). From there they can be picked up by a regular filesystem backup with e.g. the TSM BA client.percona-ganglia-mysql: A Ganglia addon provided by Percona, designed to retrieve performance metrics from a MySQL database system and insert them into a Ganglia monitoring system. The package

percona-ganglia-mysql-scriptsis to be installed on the host running the MySQL database. It depends on theganglia-addons-basepackage, specifically on the cronjob (/opt/freeware/etc/run_parts/conf.d/ganglia-addons.sh) defined by this package. In the context of this cronjob all avaliable scripts in the directory/opt/freeware/libexec/ganglia-addons/are executed. For this specific Ganglia addon, a MySQL user with the necessary permissions to access the MySQL performance metrics and the MySQL users password need to be configured:- /opt/freeware/etc/ganglia-addons/ganglia-addons-mysql.cfg

ENABLED="yes" #SS_GET_MYSQL_STATS="/opt/freeware/libexec/percona-ganglia-mysql/ss_get_mysql_stats.php" MY_USER="root" MY_PASS="insert your password here" #MY_HOST="127.0.0.1" #MY_PORT="3306"

The package

percona-ganglia-mysqlis to be installed on the host running the Ganglia webinterface. It contains templates for the customization of the MySQL metrics within the Ganglia Web 2 interface. To use the templates, install the RPM and copy the Ganglia Web 2 (gweb2) templates from/opt/freeware/share/percona-ganglia-mysql/graph.d/*.jsonto the Ganglia Web 2 graph directory. Usually this is/ganglia/graph.d/in the DocumentRoot of the webserver, but may be different in the individual Ganglia Web 2 installation, depending on the setting of the:$conf['gweb_root'] $conf['graphdir']

configuration parameters.

To include the MySQL templates in all host definitions, copy the report view definition

/opt/freeware/share/percona-ganglia-mysql/conf/default.jsonto the Ganglia Web 2 configuration directory. Usually this is/ganglia/conf/in the DocumentRoot of the webserver, but may be different in the individual Ganglia Web 2 installation, depending on the setting of the:$conf['views_dir']

configuration parameter.

To include the MySQL templates only in individual host view configurations for specific hosts, copy the report view definition

/opt/freeware/share/percona-ganglia-mysql/conf/default.jsonto the Ganglia Web 2 configuration directory with the file namehost_<hostname>.json.phpMyAdmin: A web based administration tool for MySQL, MariaDB and Drizzle database systems. After the installation of the RPM package, phpMyAdmin can be used by directing a browser to

http://<hostname>/phpmyadmin/. To enable more advanced phpMyAdmin functions, several additional configuration steps are necessary:Import the phpMyAdmin database schema:

$ mysql -u root -p < /opt/freeware/doc/phpmyadmin-<version>/examples/create_tables.sql

Create a database user for phpMyAdmin and grant the necessary MySQL permissions:

$ mysql -u root -p mysql> GRANT USAGE ON mysql.* TO 'pma'@'localhost' IDENTIFIED BY 'password'; mysql> GRANT SELECT ( Host, User, Select_priv, Insert_priv, Update_priv, Delete_priv, Create_priv, Drop_priv, Reload_priv, Shutdown_priv, Process_priv, File_priv, Grant_priv, References_priv, Index_priv, Alter_priv, Show_db_priv, Super_priv, Create_tmp_table_priv, Lock_tables_priv, Execute_priv, Repl_slave_priv, Repl_client_priv ) ON mysql.user TO 'pma'@'localhost'; mysql> GRANT SELECT ON mysql.db TO 'pma'@'localhost'; mysql> GRANT SELECT ON mysql.host TO 'pma'@'localhost'; mysql> GRANT SELECT (Host, Db, User, Table_name, Table_priv, Column_priv) ON mysql.tables_priv TO 'pma'@'localhost'; mysql> GRANT SELECT, INSERT, UPDATE, DELETE ON phpmyadmin.* TO 'pma'@'localhost';

Configure phpMyAdmin to use the MySQL user and password:

- /opt/freeware/etc/phpmyadmin/config-db.php

<?php $dbname = 'phpmyadmin'; $dbuser = 'pma'; $dbpass = 'password'; ?>

Logout and login from phpMyAdmin or restart your browser.

RPM packages

2013-07-02 // AIX RPMs: AWStats, ganglia-addons-httpd, httpd and mod_perl

Following up to the previous posts, regarding the topic of AIX RPM packages, here are four new AIX RPM packages from the webserver and webserver monitoring category:

AWStats: AWStats is a open source logfile analyzer which can parse and process a number of popular logfile formats and generate graphical reports from the information gathered.

In order to run AWStats on a host, you need to edit the configuration files in

/opt/freeware/etc/awstats/according to your needs and environment. To perform regular updates on the generated statistics, a cronjob – preferably with use of the run_parts wrapper – should be set up. E.g. for an statistics update every ten minutes:8,18,28,38,48,58 * * * * /opt/freeware/libexec/run_parts/run-parts -x /opt/freeware/share/awstats/tools/update.sh

ganglia-addons-httpd: A Ganglia addon to gather performance metrics from the Apache webserver via the Apache

mod_statusmodule. The packageganglia-addons-httpd-scriptsis to be installed on the host running the Apache webserver. It depends on theganglia-addons-basepackage, specifically on the cronjob (/opt/freeware/etc/run_parts/conf.d/ganglia-addons.sh) defined by this package. In the context of this cronjob all avaliable scripts in the directory/opt/freeware/libexec/ganglia-addons/are executed. For this specific Ganglia addon, the Apache configurationmod_statusneeds to be enabled:$ a2enmod status Enabling module status. Run '/etc/rc.d/init.d/httpd restart' to activate new configuration

and the module option set to

ExtendedStatus On:- /opt/freeware/etc/httpd/mods-enabled/status.conf

<Location /server-status> SetHandler server-status Order deny,allow Deny from all Allow from 127.0.0.1 ::1 </Location> # Keep track of extended status information for each request ExtendedStatus On

After those changes the Apache processes need to be restarted:

$ apachectl -t Syntax OK $ /etc/rc.d/init.d/httpd restart

The package

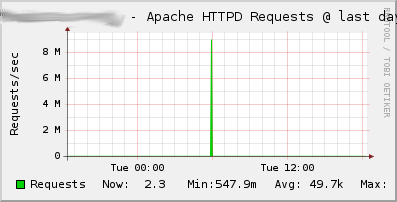

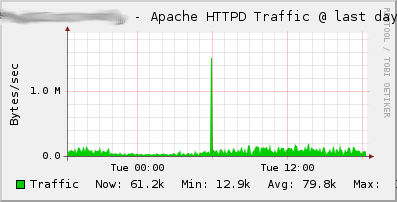

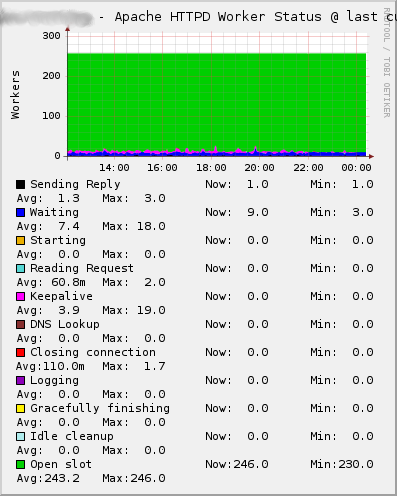

ganglia-addons-httpdis to be installed on the host running the Ganglia webinterface. It contains templates for the customization of the Apache metrics within the Ganglia Web 2 interface. See theREADME.templatesfile for further installation instructions. Here are samples of the three graphs created with those Ganglia monitoring templates:httpd: A slightly modified version of Michael Perzls Apache packages. The packaging has been done in a different way, so that the different MPMs as well as their development files are part of different sub-packages, which can be installed seperately. The contents of the configuration directory

/opt/freeware/etc/httpdare modeled closer to the setup one usually finds on current Debian Linux distributions, namely with the subdirectoriesconf.d,mods-available,mods-enabled,sites-availableandsites-enabled. “mods” (modules) and “sites” are enabled by symlinking the respective files from themods-availableandsites-availabledirectories into the directoriesmods-enabledandsites-enabled. The commandsa2enmod,a2dismod,a2ensiteanda2dissiteknown from current Debian Linux environments have been added to ease the job of creating and removing the necessary symlinks.To run a at least minimal httpd setup, you need to enable some modules after installing the RPM packages:

$ for MOD in alias authz_host log_config log_io mime; do a2enmod ${MOD}; done Enabling module alias. Run '/etc/rc.d/init.d/httpd restart' to activate new configuration! Enabling module authz_host. Run '/etc/rc.d/init.d/httpd restart' to activate new configuration! Enabling module log_config. Run '/etc/rc.d/init.d/httpd restart' to activate new configuration! Enabling module log_io. Run '/etc/rc.d/init.d/httpd restart' to activate new configuration! Enabling module mime. Run '/etc/rc.d/init.d/httpd restart' to activate new configuration! $ apachectl -t Syntax OK $ /etc/rc.d/init.d/httpd start

mod_perl: Basically the same as Michael Perzls mod_perl package, but build with the IBM XL C/C++ compiler against the Perl package provided here: AIX RPMs: Perl and CPAN Perl Modules. Thus it does not need libgcc to be installed.

2013-06-29 // An Example of Flash Storage Performance - IBM Tivoli Storage Manager (TSM)

With all the buzz and hype around flash storage, maybe you already asked yourself if flash based storage is really what it's cracked up to be. Maybe your already convinced, that flash based storage can ease or even solve some of the issues and challanges you're facing within your infrastructure, but you need some numbers to convince upper managment to provide the necessary – and still quite substancial – funding for it. Well, in any case here's a hands on, before-and-after example of the use of flash based storage.

Initial Setup

We're currently running two IBM Tivoli Storage Manager (TSM) servers for our backup infrastructure. They're still on TSM version 5.5.7.0, so the dreaded hard limit of 13GB for the transaction log space of the catalog database applies. The databases on each TSM server are around 100GB in size. The database volumes as well as the transaction log volumes reside on RAID-1 LUNs provided by two IBM DCS3700 storage systems, which are distributed over two datacenters. The LUNs are backed by 300GB 15k RPM SAS disks. Redundancy is provided by TSM database and transaction log volume mirroring over the two storage systems. The two DCS3700 and the TSM server hardware (IBM Power with AIX) are attached to a dual-fabric 8Gbit FC SAN. The following image shows an overview of the whole backup infrastructure, with some additional components not discussed in this context:

Performance Problems

With the increasing number of Windows 2008 servers to be backed up as TSM clients, we noticed very heavy database and transaction log activity on the TSM servers. At busy times, this would even lead to a situation where the 12GB transaction logs would fill up and a manual recovery of the TSM server would be necessary. The strain on the database was so high, that the database backup process triggered to free up transaction log space would just sit there, showing no activity. Sometimes two hours would pass between the start of the database backup process and the first database pages being processed. After some research and raising a PMR with IBM, it turned out the handling of the Windows 2008 system state backup was modeled with the DB2 backed catalog database of TSM version 6 in mind. The TSM version 5 embedded database was apparently not considered any more and just not up to the task (see IBM TSM Flash "Windows system state backup with a V5 Tivoli Storage Manager server"). So the suggested solutions were:

Migration to TSM server version 6.

Spread client backup windows over time and/or setup up additional TSM server instances to take over the load.

Disable Windows system state backup.

For various reasons we ended up with spreading the system state backup of the Windows clients over time, which allowed use to get by for quite some time. But in the end even this didn't help anymore.

Flash Solution

Luckyly, around that time we still had some free space leftover on our four TMS RamSan 630 and 810 systems. After updating the OS of the TSM servers to AIX 6.1.8.2 and installing iFix IV38225, we were able to attach the flash based LUNs with proper multipathing support. We then moved the database and transaction log volume groups over to the flash storage with the AIX migratepv command. The effect was incredible and instantaneous – without any other changes to the client or server environment, the database backup trigger at 50% transaction log space didn't fire even once during the next backup window! Gathering the available historical runtime data of database backup processes and graphing them over time confirmed the increadible performance gain for database backups on both TSM server instances:

Another I/O intensive operation on the TSM server is the expiration of backup and archive objects to be deleted from the catalog database. In our case this process is run on a daily basis on each TSM server. With the above results chances were, that we'd see an improvement in this area too. Like above we gathered the available historical runtime data of expire inventory processes and graphed them over time:

Monitoring the situation for several weeks after the migration to flash based storage volumes, showed us several interesting facts we found to be characteristic in our overall experience of flash vs. disk based storage:

As expected an increased number of I/O operations per second (IOPS) and thus a generally increased throughput.

In this particular case this is reflected by a number of symptoms:

A largely reduced number of unintended database backups that were triggered by a filling transaction log.

A generally lower transaction log usage, which was probably due to more database transactions being able to complete in time due to the increased number of available IOPS.

A largely reduced runtime of the deliberate database backups and the expire inventory processes started as part of the daily TSM server maintenance.

A very low variance of the response time, which is independend of the load on the system. This is especially in contrast to disk based storage systems, where one can observe a snowballing effect of increasing latency under medium and heavy load. In the above example graphs this is represented indirectly by the low level runtime plateau after the migration to flash based storage.

A shift of the performance bottleneck into other areas. Previously the quite convenient excuse on performance issues was disk I/O and the best measure the reduction of the same. With the introduction of flash based storage the focus has shifted and other areas like CPU, memory, network and storage-network latency are now put in the spotlight.