2013-12-28 // Nagios Monitoring - IBM SVC and Storwize

Some time ago i wrote a – rather crude – Nagios plugin to monitor IBM SAN Volume Controller (SVC) systems. The plugin was initially targeted at version 4.3.x of the SVC software on 2145-8F2 nodes, we used back then. Since the initial implementation of the plugin we upgraded the hard- and software of our SVC systems several times and are now at version 7.1.x of the SVC software on 2145-CG8 nodes. Recently we also got some IBM Storwize V3700 storage arrays, which share the same code as the SVC, but are missing some of the features and provide additional other features. A code and functional review of the original plugin for the SVC as well as an adaption for the Storwize arrays seemed to be in order. The result were the two plugins check_ibm_svc.pl and check_ibm_storwize.pl. They share a lot of common code with the original plugin, but are still maintained seperately for the simple reason that IBM might develop the SVC and the Storwize code in slightly different, incompatible directions.

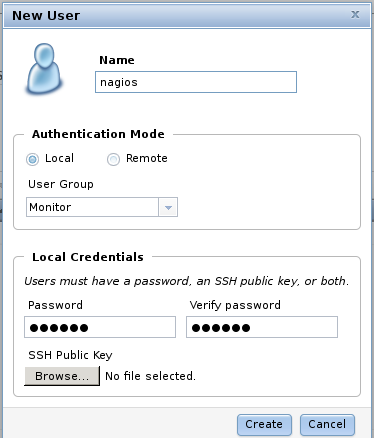

In order to run the plugins, you need to have the command line tool wbemcli from the Standards Based Linux Instrumentation project installed on the Nagios system. In my case the wbemcli command line tool is placed in /opt/sblim-wbemcli/bin/wbemcli. If you use a different path, adapt the configuration hash entry “%conf{'wbemcli'}” according to your environment. The plugins use wbemcli to query the CIMOM service on the SVC or Storwize system for the necessary information. Therefor a network connection from the Nagios system to the SVC or Storwize systems on port TCP/5989 must be allowed and a user with the “Monitor” authorization must be created on the SVC or Storwize systems:

IBM_2145:svc:admin$ mkuser -name nagios -usergrp Monitor -password <password>

Or in the WebUI:

Generic

Optional: Enable SNMP traps to be sent to the Nagios system on each of the SVC or Storwize device. This requires SNMPD and SNMPTT to be already setup on the Nagios system. Login to the SVC or Storwize CLI and issue the command:

IBM_2145:svc:admin$ mksnmpserver -ip <IP adress> -community public -error on -warning on -info on -port 162

Where

<IP>is the IP address of your Nagios system. Or in the SVC or Storwize WebUI navigate to:-> Settings -> Event Notifications -> SNMP -> <Enter IP of the Nagios system and the SNMPDs community string>Verify the port UDP/162 on the Nagios system can be reached from the SVC or Storwize devices.

SAN Volume Controller (SVC)

For SAN Volume Controller (SVC) devices the whole setup looks like this:

Download the Nagios plugin check_ibm_svc.pl and place it in the plugins directory of your Nagios system, in this example

/usr/lib/nagios/plugins/:$ mv -i check_ibm_svc.pl /usr/lib/nagios/plugins/ $ chmod 755 /usr/lib/nagios/plugins/check_ibm_svc.pl

Adjust the plugin settings according to your environment. Edit the following variable assignments:

my %conf = ( wbemcli => '/opt/sblim-wbemcli/bin/wbemcli',Define the following Nagios commands. In this example this is done in the file

/etc/nagios-plugins/config/check_svc.cfg:# check SVC Backend Controller status define command { command_name check_svc_bc command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C BackendController } # check SVC Backend SCSI Status define command { command_name check_svc_btspe command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C BackendTargetSCSIPE } # check SVC MDisk status define command { command_name check_svc_bv command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C BackendVolume } # check SVC Cluster status define command { command_name check_svc_cl command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C Cluster } # check SVC MDiskGroup status define command { command_name check_svc_csp command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C ConcreteStoragePool } # check SVC Ethernet Port status define command { command_name check_svc_eth command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C EthernetPort } # check SVC FC Port status define command { command_name check_svc_fcp command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C FCPort } # check SVC FC Port statistics define command { command_name check_svc_fcp_stats command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C FCPortStatistics } # check SVC I/O Group status and memory allocation define command { command_name check_svc_iogrp command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C IOGroup -w $ARG1$ -c $ARG2$ } # check SVC WebUI status define command { command_name check_svc_mc command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C MasterConsole } # check SVC VDisk Mirror status define command { command_name check_svc_mirror command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C MirrorExtent } # check SVC Node status define command { command_name check_svc_node command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C Node } # check SVC Quorum Disk status define command { command_name check_svc_quorum command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C QuorumDisk } # check SVC Storage Volume status define command { command_name check_svc_sv command_line $USER1$/check_ibm_svc.pl -H $HOSTNAME$ -u <user> -p <password> -C StorageVolume }Replace

<user>and<password>with name and password of the CIMOM user created above.Define a group of services in your Nagios configuration to be checked for each SVC system:

# check sshd define service { use generic-service hostgroup_name svc service_description Check_SSH check_command check_ssh } # check_tcp CIMOM define service { use generic-service-pnp hostgroup_name svc service_description Check_CIMOM check_command check_tcp!5989 } # check_svc_bc define service { use generic-service-pnp hostgroup_name svc service_description Check_Backend_Controller check_command check_svc_bc } # check_svc_btspe define service { use generic-service-pnp hostgroup_name svc service_description Check_Backend_Target check_command check_svc_btspe } # check_svc_bv define service { use generic-service-pnp hostgroup_name svc service_description Check_Backend_Volume check_command check_svc_bv } # check_svc_cl define service { use generic-service-pnp hostgroup_name svc service_description Check_Cluster check_command check_svc_cl } # check_svc_csp define service { use generic-service-pnp hostgroup_name svc service_description Check_Storage_Pool check_command check_svc_csp } # check_svc_eth define service { use generic-service-pnp hostgroup_name svc service_description Check_Ethernet_Port check_command check_svc_eth } # check_svc_fcp define service { use generic-service-pnp hostgroup_name svc service_description Check_FC_Port check_command check_svc_fcp } # check_svc_fcp_stats define service { use generic-service-pnp hostgroup_name svc service_description Check_FC_Port_Statistics check_command check_svc_fcp_stats } # check_svc_iogrp define service { use generic-service-pnp hostgroup_name svc service_description Check_IO_Group check_command check_svc_iogrp!102400!204800 } # check_svc_mc define service { use generic-service-pnp hostgroup_name svc service_description Check_Master_Console check_command check_svc_mc } # check_svc_mirror define service { use generic-service-pnp hostgroup_name svc service_description Check_Mirror_Extents check_command check_svc_mirror } # check_svc_node define service { use generic-service-pnp hostgroup_name svc service_description Check_Node check_command check_svc_node } # check_svc_quorum define service { use generic-service-pnp hostgroup_name svc service_description Check_Quorum check_command check_svc_quorum } # check_svc_sv define service { use generic-service-pnp hostgroup_name svc service_description Check_Storage_Volume check_command check_svc_sv }Replace

generic-servicewith your Nagios service template. Replacegeneric-service-pnpwith your Nagios service template that has performance data processing enabled.Define hosts in your Nagios configuration for each SVC device. In this example its named

svc1:define host { use svc host_name svc1 alias SAN Volume Controller 1 address 10.0.0.1 parents parent_lan }Replace

svcwith your Nagios host template for SVC devices. Adjust theaddressandparentsparameters according to your environment.Define a hostgroup in your Nagios configuration for all SVC systems. In this example it is named

svc. The above checks are run against each member of the hostgroup:define hostgroup { hostgroup_name svc alias IBM SVC Clusters members svc1 }Run a configuration check and if successful reload the Nagios process:

$ /usr/sbin/nagios3 -v /etc/nagios3/nagios.cfg $ /etc/init.d/nagios3 reload

The new hosts and services should soon show up in the Nagios web interface.

Storwize

For Storwize devices the whole setup looks like this:

Download the Nagios plugin check_ibm_storwize.pl and place it in the plugins directory of your Nagios system, in this example

/usr/lib/nagios/plugins/:$ mv -i check_ibm_storwize.pl /usr/lib/nagios/plugins/ $ chmod 755 /usr/lib/nagios/plugins/check_ibm_storwize.pl

Adjust the plugin settings according to your environment. Edit the following variable assignments:

my %conf = ( wbemcli => '/opt/sblim-wbemcli/bin/wbemcli',Define the following Nagios commands. In this example this is done in the file

/etc/nagios-plugins/config/check_storwize.cfg:# check Storwize RAID Array status define command { command_name check_storwize_array command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C Array } # check Storwize Hot Spare coverage define command { command_name check_storwize_asc command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C ArrayBasedOnDiskDrive } # check Storwize MDisk status define command { command_name check_storwize_bv command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C BackendVolume } # check Storwize Cluster status define command { command_name check_storwize_cl command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C Cluster } # check Storwize MDiskGroup status define command { command_name check_storwize_csp command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C ConcreteStoragePool } # check Storwize Disk status define command { command_name check_storwize_disk command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C DiskDrive } # check Storwize Enclosure status define command { command_name check_storwize_enc command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C Enclosure } # check Storwize Ethernet Port status define command { command_name check_storwize_eth command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C EthernetPort } # check Storwize FC Port status define command { command_name check_storwize_fcp command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C FCPort } # check Storwize I/O Group status and memory allocation define command { command_name check_storwize_iogrp command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C IOGroup -w $ARG1$ -c $ARG2$ } # check Storwize Hot Spare status define command { command_name check_storwize_is command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C IsSpare } # check Storwize WebUI status define command { command_name check_storwize_mc command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C MasterConsole } # check Storwize VDisk Mirror status define command { command_name check_storwize_mirror command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C MirrorExtent } # check Storwize Node status define command { command_name check_storwize_node command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C Node } # check Storwize Quorum Disk status define command { command_name check_storwize_quorum command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C QuorumDisk } # check Storwize Storage Volume status define command { command_name check_storwize_sv command_line $USER1$/check_ibm_storwize.pl -H $HOSTNAME$ -u <user> -p <password> -C StorageVolume }Replace

<user>and<password>with name and password of the CIMOM user created above.Define a group of services in your Nagios configuration to be checked for each Storwize system:

# check sshd define service { use generic-service hostgroup_name storwize service_description Check_SSH check_command check_ssh } # check_tcp CIMOM define service { use generic-service-pnp hostgroup_name storwize service_description Check_CIMOM check_command check_tcp!5989 } # check_storwize_array define service { use generic-service-pnp hostgroup_name storwize service_description Check_Array check_command check_storwize_array } # check_storwize_asc define service { use generic-service-pnp hostgroup_name storwize service_description Check_Array_Spare_Coverage check_command check_storwize_asc } # check_storwize_bv define service { use generic-service-pnp hostgroup_name storwize service_description Check_Backend_Volume check_command check_storwize_bv } # check_storwize_cl define service { use generic-service-pnp hostgroup_name storwize service_description Check_Cluster check_command check_storwize_cl } # check_storwize_csp define service { use generic-service-pnp hostgroup_name storwize service_description Check_Storage_Pool check_command check_storwize_csp } # check_storwize_disk define service { use generic-service-pnp hostgroup_name storwize service_description Check_Disk_Drive check_command check_storwize_disk } # check_storwize_enc define service { use generic-service-pnp hostgroup_name storwize service_description Check_Enclosure check_command check_storwize_enc } # check_storwize_eth define service { use generic-service-pnp hostgroup_name storwize service_description Check_Ethernet_Port check_command check_storwize_eth } # check_storwize_fcp define service { use generic-service-pnp hostgroup_name storwize service_description Check_FC_Port check_command check_storwize_fcp } # check_storwize_iogrp define service { use generic-service-pnp hostgroup_name storwize service_description Check_IO_Group check_command check_storwize_iogrp!102400!204800 } # check_storwize_is define service { use generic-service-pnp hostgroup_name storwize service_description Check_Hot_Spare check_command check_storwize_is } # check_storwize_mc define service { use generic-service-pnp hostgroup_name storwize service_description Check_Master_Console check_command check_storwize_mc } # check_storwize_mirror define service { use generic-service-pnp hostgroup_name storwize service_description Check_Mirror_Extents check_command check_storwize_mirror } # check_storwize_node define service { use generic-service-pnp hostgroup_name storwize service_description Check_Node check_command check_storwize_node } # check_storwize_quorum define service { use generic-service-pnp hostgroup_name storwize service_description Check_Quorum check_command check_storwize_quorum } # check_storwize_sv define service { use generic-service-pnp hostgroup_name storwize service_description Check_Storage_Volume check_command check_storwize_sv }Replace

generic-servicewith your Nagios service template. Replacegeneric-service-pnpwith your Nagios service template that has performance data processing enabled.Define hosts in your Nagios configuration for each Storwize device. In this example its named

storwize1:define host { use disk host_name storwize1 alias Storwize Disk Storage 1 address 10.0.0.1 parents parent_lan }Replace

diskwith your Nagios host template for storage devices. Adjust theaddressandparentsparameters according to your environment.Define a hostgroup in your Nagios configuration for all SVC systems. In this example it is named

storwize. The above checks are run against each member of the hostgroup:define hostgroup { hostgroup_name storwize alias IBM Storwize Devices members storwize1 }Run a configuration check and if successful reload the Nagios process:

$ /usr/sbin/nagios3 -v /etc/nagios3/nagios.cfg $ /etc/init.d/nagios3 reload

The new hosts and services should soon show up in the Nagios web interface.

Generic

If the optional step in the “Generic” section above was done, SNMPTT also needs to be configured to be able to understand the incoming SNMP traps from Storwize systems. This can be achieved by the following steps:

Download the IBM SVC/Storwize SNMP MIB matching your software version from ftp://ftp.software.ibm.com/storage/san/sanvc/.

Convert the IBM SVC/Storwize SNMP MIB definitions in

SVC_MIB_<version>.MIBinto a format that SNMPTT can understand.$ /opt/snmptt/snmpttconvertmib --in=MIB/SVC_MIB_7.1.0.MIB --out=/opt/snmptt/conf/snmptt.conf.ibm-svc-710 ... Done Total translations: 3 Successful translations: 3 Failed translations: 0

Edit the trap severity according to your requirements, e.g.:

$ vim /opt/snmptt/conf/snmptt.conf.ibm-svc-710 ... EVENT tsveETrap .1.3.6.1.4.1.2.6.190.1 "Status Events" Critical ... EVENT tsveWTrap .1.3.6.1.4.1.2.6.190.2 "Status Events" Warning ...

Optional: Apply the following patch to the configuration to reduce the number of false positives:

- snmptt.conf.ibm-svc-710

-- /opt/snmptt/conf/snmptt.conf.ibm-svc-710.orig 2013-12-28 21:16:25.000000000 +0100 +++ /opt/snmptt/conf/snmptt.conf.ibm-svc-710 2013-12-28 21:17:55.000000000 +0100 @@ -29,11 +29,21 @@ 16: tsveMPNO 17: tsveOBJN EDESC +# Filter and ignore the following events that are not really warnings +# "Error ID = 980440": Failed to transfer file from remote node +# "Error ID = 981001": Cluster Fabric View updated by fabric discovery +# "Error ID = 981014": LUN Discovery failed +# "Error ID = 982009": Migration complete # +EVENT tsveWTrap .1.3.6.1.4.1.2.6.190.2 "Status Events" Normal +FORMAT tsve information trap $* +MATCH $3: (Error ID = 980440|981001|981014|982009) # +# All remaining events with this OID are actually warnings # EVENT tsveWTrap .1.3.6.1.4.1.2.6.190.2 "Status Events" Warning FORMAT tsve warning trap $* +MATCH $3: !(Error ID = 980440|981001|981014|982009) SDESC tsve warning trap Variables:

Add the new configuration file to be included in the global SNMPTT configuration and restart the SNMPTT daemon:

$ vim /opt/snmptt/snmptt.ini ... [TrapFiles] snmptt_conf_files = <<END ... /opt/snmptt/conf/snmptt.conf.ibm-svc-710 ... END $ /etc/init.d/snmptt reload

Download the Nagios plugin check_snmp_traps.sh and place it in the plugins directory of your Nagios system, in this example

/usr/lib/nagios/plugins/:$ mv -i check_snmp_traps.sh /usr/lib/nagios/plugins/ $ chmod 755 /usr/lib/nagios/plugins/check_snmp_traps.sh

Define the following Nagios command to check for SNMP traps in the SNMPTT database. In this example this is done in the file

/etc/nagios-plugins/config/check_snmp_traps.cfg:# check for snmp traps define command { command_name check_snmp_traps command_line $USER1$/check_snmp_traps.sh -H $HOSTNAME$:$HOSTADDRESS$ -u <user> -p <pass> -d <snmptt_db> }Replace

user,passandsnmptt_dbwith values suitable for your SNMPTT database environment.Add another service in your Nagios configuration to be checked for each SVC:

# check snmptraps define service { use generic-service hostgroup_name svc service_description Check_SNMP_traps check_command check_snmp_traps }or Storwize system:

# check snmptraps define service { use generic-service hostgroup_name storwize service_description Check_SNMP_traps check_command check_snmp_traps }Optional: Define a serviceextinfo to display a folder icon next to the

Check_SNMP_trapsservice check for each SVC:define serviceextinfo { hostgroup_name svc service_description Check_SNMP_traps notes SNMP Alerts #notes_url http://<hostname>/nagios3/nagtrap/index.php?hostname=$HOSTNAME$ #notes_url http://<hostname>/nagios3/nsti/index.php?perpage=100&hostname=$HOSTNAME$ }or Storwize system:

define serviceextinfo { hostgroup_name storwize service_description Check_SNMP_traps notes SNMP Alerts #notes_url http://<hostname>/nagios3/nagtrap/index.php?hostname=$HOSTNAME$ #notes_url http://<hostname>/nagios3/nsti/index.php?perpage=100&hostname=$HOSTNAME$ }device. This icon provides a direct link to the SNMPTT web interface with a filter for the selected host. Uncomment the

notes_urldepending on which web interface (nagtrap or nsti) is used. Replacehostnamewith the FQDN or IP address of the server running the web interface.Run a configuration check and if successful reload the Nagios process:

$ /usr/sbin/nagios3 -v /etc/nagios3/nagios.cfg $ /etc/init.d/nagios3 reload

Optional: If you're running PNP4Nagios v0.6 or later to graph Nagios performance data, you can use the PNP4Nagios template in

pnp4nagios_storwize.tar.bz2andpnp4nagios_svc.tar.bz2to beautify the graphs. Download the PNP4Nagios templates pnp4nagios_svc.tar.bz2 and pnp4nagios_storwize.tar.bz2 and place them in the PNP4Nagios template directory, in this example/usr/share/pnp4nagios/html/templates/:$ tar jxf pnp4nagios_storwize.tar.bz2 $ mv -i check_storwize_*.php /usr/share/pnp4nagios/html/templates/ $ chmod 644 /usr/share/pnp4nagios/html/templates/check_storwize_*.php $ tar jxf pnp4nagios_svc.tar.bz2 $ mv -i check_svc_*.php /usr/share/pnp4nagios/html/templates/ $ chmod 644 /usr/share/pnp4nagios/html/templates/check_svc_*.php

All done, you should now have a complete Nagios-based monitoring solution for your IBM SVC and Storwize systems.

2013-12-16 // Debian Wheezy on IBM Power LPAR with Multipath Support

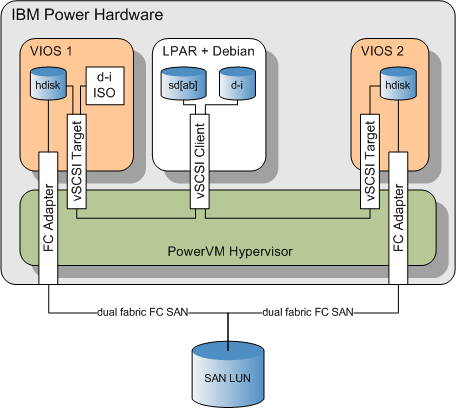

Earlier, in the post Debian Wheezy on IBM Power LPAR, i wrote about installing Debian on an IBM Power LPAR. Admittedly the previous post was a bit sparse, especially about the hoops one has to jump through when installing Debian in a multipathed setup like this:

To adress this here's a more complete, step by step guide on how to successfully install Debian Wheezy with multipathing enabled. The environment is basically the same as described before in Debian Wheezy on IBM Power LPAR:

Prepare an LPAR. In this example the parition ID is “4”. The VSCSI server adapters on the two VIO servers have the adapter ID “2”.

Prepare the VSCSI disk mappings on the two VIO servers. The hdisks are backed by a SAN / IBM SVC setup. In this example the disk used is

hdisk230on both VIO servers, it has the UUID332136005076801918127980000000000032904214503IBMfcp.Disk mapping on VIOS #1:

root@vios1-p730-342:/$ lscfg -vl hdisk230 hdisk230 U5877.001.0080249-P1-C9-T1-W5005076801205A2F-L7A000000000000 MPIO FC 2145 Manufacturer................IBM Machine Type and Model......2145 ROS Level and ID............0000 Device Specific.(Z0)........0000063268181002 Device Specific.(Z1)........0200646 Serial Number...............60050768019181279800000000000329 root@vios1-p730-342:/$ /usr/ios/cli/ioscli mkvdev -vdev hdisk230 -vadapter vhost0 root@vios1-p730-342:/$ /usr/ios/cli/ioscli lsmap -all | less SVSA Physloc Client Partition ID --------------- -------------------------------------------- ------------------ vhost0 U8231.E2D.06AB34T-V1-C2 0x00000004 VTD vtscsi0 Status Available LUN 0x8100000000000000 Backing device hdisk230 Physloc U5877.001.0080249-P1-C9-T1-W5005076801205A2F-L7A000000000000 Mirrored false

Disk mapping on VIOS #2:

root@vios2-p730-342:/$ lscfg -vl hdisk230 hdisk230 U5877.001.0080249-P1-C6-T1-W5005076801305A2F-L7A000000000000 MPIO FC 2145 Manufacturer................IBM Machine Type and Model......2145 ROS Level and ID............0000 Device Specific.(Z0)........0000063268181002 Device Specific.(Z1)........0200646 Serial Number...............60050768019181279800000000000329 root@vios2-p730-342:/$ /usr/ios/cli/ioscli mkvdev -vdev hdisk230 -vadapter vhost0 root@vios2-p730-342:/$ /usr/ios/cli/ioscli lsmap -all | less SVSA Physloc Client Partition ID --------------- -------------------------------------------- ------------------ vhost0 U8231.E2D.06AB34T-V2-C2 0x00000004 VTD vtscsi0 Status Available LUN 0x8100000000000000 Backing device hdisk230 Physloc U5877.001.0080249-P1-C6-T1-W5005076801305A2F-L7A000000000000 Mirrored false

Prepare the installation media, as a virtual target optical (VTOPT) device on one of the VIO servers:

root@vios1-p730-342:/$ /usr/ios/cli/ioscli mkrep -sp rootvg -size 2G root@vios1-p730-342:/$ df -g /var/vio/VMLibrary Filesystem GB blocks Free %Used Iused %Iused Mounted on /dev/VMLibrary 2.00 1.24 39% 7 1% /var/vio/VMLibrary source-system$ scp debian-testing-powerpc-netinst_20131214.iso root@vios1-p730-342:/var/vio/VMLibrary/ root@vios1-p730-342:/$ /usr/ios/cli/ioscli lsrep Size(mb) Free(mb) Parent Pool Parent Size Parent Free 2041 1269 rootvg 32256 5120 Name File Size Optical Access debian-7.2.0-powerpc-netinst.iso 258 None rw root@vios1-p730-342:/$ /usr/ios/cli/ioscli mkvdev -vadapter vhost0 -fbo root@vios1-p730-342:/$ /usr/ios/cli/ioscli loadopt -vtd vtopt0 -disk debian-7.2.0-powerpc-netinst.iso root@vios1-p730-342:/$ /usr/ios/cli/ioscli lsmap -all | less SVSA Physloc Client Partition ID --------------- -------------------------------------------- ------------------ vhost0 U8231.E2D.06AB34T-V1-C2 0x00000004 VTD vtopt0 Status Available LUN 0x8200000000000000 Backing device /var/vio/VMLibrary/debian-7.2.0-powerpc-netinst.iso Physloc Mirrored N/A VTD vtscsi0 Status Available LUN 0x8100000000000000 Backing device hdisk230 Physloc U5877.001.0080249-P1-C9-T1-W5005076801205A2F-L7A000000000000 Mirrored

Boot the LPAR and enter the SMS menu. Select the “SCSI CD-ROM” device as a boot device:

Select “

5. Select Boot Options”:PowerPC Firmware Version AL770_052 SMS 1.7 (c) Copyright IBM Corp. 2000,2008 All rights reserved. ------------------------------------------------------------------------------- Main Menu 1. Select Language 2. Setup Remote IPL (Initial Program Load) 3. Change SCSI Settings 4. Select Console 5. Select Boot Options ------------------------------------------------------------------------------- Navigation Keys: X = eXit System Management Services ------------------------------------------------------------------------------- Type menu item number and press Enter or select Navigation key: 5

Select “

1.Select Install/Boot Device”:PowerPC Firmware Version AL770_052 SMS 1.7 (c) Copyright IBM Corp. 2000,2008 All rights reserved. ------------------------------------------------------------------------------- Multiboot 1. Select Install/Boot Device 2. Configure Boot Device Order 3. Multiboot Startup <OFF> 4. SAN Zoning Support ------------------------------------------------------------------------------- Navigation keys: M = return to Main Menu ESC key = return to previous screen X = eXit System Management Services ------------------------------------------------------------------------------- Type menu item number and press Enter or select Navigation key: 1

Select “

7. List all Devices”:PowerPC Firmware Version AL770_052 SMS 1.7 (c) Copyright IBM Corp. 2000,2008 All rights reserved. ------------------------------------------------------------------------------- Select Device Type 1. Diskette 2. Tape 3. CD/DVD 4. IDE 5. Hard Drive 6. Network 7. List all Devices ------------------------------------------------------------------------------- Navigation keys: M = return to Main Menu ESC key = return to previous screen X = eXit System Management Services ------------------------------------------------------------------------------- Type menu item number and press Enter or select Navigation key: 7

Select “

2. SCSI CD-ROM”:PowerPC Firmware Version AL770_052 SMS 1.7 (c) Copyright IBM Corp. 2000,2008 All rights reserved. ------------------------------------------------------------------------------- Select Device Device Current Device Number Position Name 1. - Interpartition Logical LAN ( loc=U8231.E2D.06AB34T-V4-C4-T1 ) 2. 3 SCSI CD-ROM ( loc=U8231.E2D.06AB34T-V4-C2-T1-L8200000000000000 ) ------------------------------------------------------------------------------- Navigation keys: M = return to Main Menu ESC key = return to previous screen X = eXit System Management Services ------------------------------------------------------------------------------- Type menu item number and press Enter or select Navigation key: 2

Select “

2. Normal Mode Boot”:PowerPC Firmware Version AL770_052 SMS 1.7 (c) Copyright IBM Corp. 2000,2008 All rights reserved. ------------------------------------------------------------------------------- Select Task SCSI CD-ROM ( loc=U8231.E2D.06AB34T-V4-C2-T1-L8200000000000000 ) 1. Information 2. Normal Mode Boot 3. Service Mode Boot ------------------------------------------------------------------------------- Navigation keys: M = return to Main Menu ESC key = return to previous screen X = eXit System Management Services ------------------------------------------------------------------------------- Type menu item number and press Enter or select Navigation key: 2

Select “

1. Yes”:PowerPC Firmware Version AL770_052 SMS 1.7 (c) Copyright IBM Corp. 2000,2008 All rights reserved. ------------------------------------------------------------------------------- Are you sure you want to exit System Management Services? 1. Yes 2. No ------------------------------------------------------------------------------- Navigation Keys: X = eXit System Management Services ------------------------------------------------------------------------------- Type menu item number and press Enter or select Navigation key: 1

At the Debian installer yaboot boot prompt enter “

expert disk-detect/multipath/enable=true” to boot the installer image with multipath support enabled:IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM STARTING SOFTWARE IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM PLEASE WAIT... IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM IBM - Elapsed time since release of system processors: 150814 mins 23 secs Config file read, 1337 bytes Welcome to Debian GNU/Linux wheezy! This is a Debian installation CDROM, built on 20131012-15:01. [...] Welcome to yaboot version 1.3.16 Enter "help" to get some basic usage information boot: expert disk-detect/multipath/enable=true

Go through the following installer dialogs:

Choose language Configure the keyboard Detect and mount CD-ROM

and select the configuration items according to your needs and environment.

In the installer dialog:

Load installer components from CD

select:

+----------------+ [?] Load installer components from CD +----------------+ | | | All components of the installer needed to complete the install will | | be loaded automatically and are not listed here. Some other | | (optional) installer components are shown below. They are probably | | not necessary, but may be interesting to some users. | | | | Note that if you select a component that requires others, those | | components will also be loaded. | | | | Installer components to load: | | | | [*] multipath-modules-3.2.0-4-powerpc64-di: Multipath support | | [*] network-console: Continue installation remotely using SSH | | [*] openssh-client-udeb: secure shell client for the Debian installer | | | | <Go Back> <Continue> | | | +-------------------------------------------------------------------------+

Go through the following installer dialogs:

Detect network hardware Configure the network Continue installation remotely using SSH Set up users and passwords Configure the clock

and configure the debian installer network and user settings according to your environment.

Log into the system via SSH and replace the

find-partitionsbinary on the installer:~ $ /usr/lib/partconf/find-partitions --flag prep Warning: The driver descriptor says the physical block size is 512 bytes, but Linux says it is 2048 bytes. A bug has been detected in GNU Parted. Refer to the web site of parted http://www.gnu.org/software/parted/parted.html for more information of what could be useful for bug submitting! Please email a bug report to bug-parted@gnu.org containing at least the version (2.3) and the following message: Assertion (disk != NULL) at ../../libparted/disk.c:1548 in function ped_disk_next_partition() failed. Aborted ~ $ mv -i /usr/lib/partconf/find-partitions /usr/lib/partconf/find-partitions.orig ~ $ scp user@source-system:~/find-partitions /usr/lib/partconf/ ~ $ chmod 755 /usr/lib/partconf/find-partitions ~ $ /usr/lib/partconf/find-partitions --flag prep

While still in the SSH login to the debian installer, manually load the

multipathkernel module:~ $ cd /lib/modules/3.2.0-4-powerpc64/kernel/drivers/md ~ $ modprobe multipath

Return to the virtual terminal and select the installer dialog:

Detect disks

and acknowledge the question regarding parameters for the

dm-emckernel module.Select the installer dialog:

Partition disks

and select the:

Manual

paritioning method. Then select the entry:

LVM VG mpatha, LV mpatha - 38.7 GB Linux device-mapper (multipath)

Do NOT select one of the entries starting with “SCSI”! If there is no entry offering a “mpath” device path, the most likely cause is that the above step no. 10, loading the multipath kernel module, failed. In this case check the file

/var/log/syslogfor error messages.Select “

Yes”“ to create a new partition table+-----------------------+ [!!] Partition disks +------------------------+ | | | You have selected an entire device to partition. If you proceed with | | creating a new partition table on the device, then all current | | partitions will be removed. | | | | Note that you will be able to undo this operation later if you wish. | | | | Create new empty partition table on this device? | | | | <Go Back> <Yes> <No> | | | +-----------------------------------------------------------------------+

Select ”

msdos“ as a partition table type:+-------------+ [.] Partition disks +--------------+ | | | Select the type of partition table to use. | | | | Partition table type: | | | | aix | | amiga | | bsd | | dvh | | gpt | | mac | | msdos | | sun | | loop | | | | <Go Back> | | | +--------------------------------------------------+

Select the ”

FREE SPACE“ entry on the mpath device to create new partitions:+------------------------+ [!!] Partition disks +-------------------------+ | | | This is an overview of your currently configured partitions and mount | | points. Select a partition to modify its settings (file system, mount | | point, etc.), a free space to create partitions, or a device to | | initialize its partition table. | | | | Guided partitioning | | Configure software RAID | | Configure the Logical Volume Manager | | Configure encrypted volumes | | | | LVM VG mpatha, LV mpatha - 38.7 GB Linux device-mapper (multipath | | pri/log 38.7 GB FREE SPACE | | SCSI1 (0,1,0) (sda) - 38.7 GB AIX VDASD | | SCSI2 (0,1,0) (sdb) - 38.7 GB AIX VDASD | | | | | | <Go Back> | | | +-------------------------------------------------------------------------+

Select ”

Create a new partition“:+-----------+ [!!] Partition disks +-----------+ | | | How to use this free space: | | | | Create a new partition | | Automatically partition the free space | | Show Cylinder/Head/Sector information | | | | <Go Back> | | | +----------------------------------------------+

Enter “8” to create a parition of about 7MB size:

+-----------------------+ [!!] Partition disks +------------------------+ | | | The maximum size for this partition is 38.7 GB. | | | | Hint: "max" can be used as a shortcut to specify the maximum size, or | | enter a percentage (e.g. "20%") to use that percentage of the maximum | | size. | | | | New partition size: | | | | 8____________________________________________________________________ | | | | <Go Back> <Continue> | | | +-----------------------------------------------------------------------+

Select ”

Primary“ as a partition type:+-----+ [!!] Partition disks +------+ | | | Type for the new partition: | | | | Primary | | Logical | | | | <Go Back> | | | +-----------------------------------+

Select ”

Beginning“ as the location for the new parition:+------------------------+ [!!] Partition disks +-------------------------+ | | | Please choose whether you want the new partition to be created at the | | beginning or at the end of the available space. | | | | Location for the new partition: | | | | Beginning | | End | | | | <Go Back> | | | +-------------------------------------------------------------------------+

Select ”

PowerPC PReP boot partition“ as the parition usage and set the ”Bootable flag“ to ”on“:+------------------------+ [!!] Partition disks +-------------------------+ | | | You are editing partition #1 of LVM VG mpatha, LV mpatha. No existing | | file system was detected in this partition. | | | | Partition settings: | | | | Use as: PowerPC PReP boot partition | | | | Bootable flag: on | | | | Copy data from another partition | | Delete the partition | | Done setting up the partition | | | | <Go Back> | | | +-------------------------------------------------------------------------+

Repeat the above steps two more times and create the following parition layout. Then select ”

Configure the Logical Volume Manager“ and acknowledge the partition scheme to be written to disk:+------------------------+ [!!] Partition disks +-------------------------+ | | | This is an overview of your currently configured partitions and mount | | points. Select a partition to modify its settings (file system, mount | | point, etc.), a free space to create partitions, or a device to | | initialize its partition table. | | | | Guided partitioning | | Configure software RAID | | Configure the Logical Volume Manager | | Configure encrypted volumes | | | | LVM VG mpatha, LV mpatha - 38.7 GB Linux device-mapper (multipath | | > #1 primary 7.3 MB B K | | > #2 primary 511.7 MB f ext4 /boot | | > #5 logical 38.1 GB K lvm | | SCSI1 (0,1,0) (sda) - 38.7 GB AIX VDASD | | | | <Go Back> | | | +-------------------------------------------------------------------------+

Select ”

Create volume group“:+----------+ [!!] Partition disks +-----------+ | | | Summary of current LVM configuration: | | | | Free Physical Volumes: 1 | | Used Physical Volumes: 0 | | Volume Groups: 0 | | Logical Volumes: 0 | | | | LVM configuration action: | | | | Display configuration details | | Create volume group | | Finish | | | | <Go Back> | | | +---------------------------------------------+

Enter a volume group name, here ”

vg00“:+-----------------------+ [!!] Partition disks +------------------------+ | | | Please enter the name you would like to use for the new volume group. | | | | Volume group name: | | | | vg00_________________________________________________________________ | | | | <Go Back> <Continue> | | | +-----------------------------------------------------------------------+

Select the device ”

/dev/mapper/mpathap5“ created above as a physical volume for the volume group:+-----------------+ [!!] Partition disks +------------------+ | | | Please select the devices for the new volume group. | | | | You can select one or more devices. | | | | Devices for the new volume group: | | | | [ ] /dev/mapper/mpathap1 (7MB) | | [ ] /dev/mapper/mpathap2 (511MB; ext4) | | [*] /dev/mapper/mpathap5 (38133MB) | | [ ] (38133MB; lvm) | | | | <Go Back> <Continue> | | | +-----------------------------------------------------------+

Create logical volumes according to your needs and environment. See the following list as an example. Note that the logical volume for the root filesystem resides inside the volume group:

+----------------------+ [!!] Partition disks +----------------------+ | | | Current LVM configuration: | | Unallocated physical volumes: | | * none | | | | Volume groups: | | * vg00 (38130MB) | | - Uses physical volume: /dev/mapper/mpathap5 (38130MB) | | - Provides logical volume: home (1023MB) | | - Provides logical volume: root (1023MB) | | - Provides logical volume: srv (28408MB) | | - Provides logical volume: swap (511MB) | | - Provides logical volume: tmp (1023MB) | | - Provides logical volume: usr (4093MB) | | - Provides logical volume: var (2046MB) | | | | <Continue> | | | +--------------------------------------------------------------------+

Assign filesystems to each of the logical volumes created above. See the following list as an example. Note that only the “PowerPC PReP boot partition” and the partition for the ”

/boot“ filesystem resides outside the volume group:+------------------------+ [!!] Partition disks +-------------------------+ | | | This is an overview of your currently configured partitions and mount | | points. Select a partition to modify its settings (file system, mount | | point, etc.), a free space to create partitions, or a device to | | initialize its partition table. | | | | Guided partitioning | | Configure software RAID | | Configure the Logical Volume Manager | | Configure encrypted volumes | | | | LVM VG mpatha, LV mpatha - 38.7 GB Linux device-mapper (multipath | | > #1 primary 7.3 MB B K | | > #2 primary 511.7 MB ext4 | | > #5 logical 38.1 GB K lvm | | LVM VG mpathap1, LV mpathap1 - 7.3 MB Linux device-mapper (linear | | LVM VG mpathap2, LV mpathap2 - 511.7 MB Linux device-mapper (line | | > #1 511.7 MB K ext4 /boot | | LVM VG mpathap5, LV mpathap5 - 38.1 GB Linux device-mapper (linea | | LVM VG vg00, LV home - 1.0 GB Linux device-mapper (linear) | | > #1 1.0 GB f ext4 /home | | LVM VG vg00, LV root - 1.0 GB Linux device-mapper (linear) | | > #1 1.0 GB f ext4 / | | LVM VG vg00, LV srv - 28.4 GB Linux device-mapper (linear) | | > #1 28.4 GB f ext4 /srv | | LVM VG vg00, LV swap - 511.7 MB Linux device-mapper (linear) | | > #1 511.7 MB f swap swap | | LVM VG vg00, LV tmp - 1.0 GB Linux device-mapper (linear) | | > #1 1.0 GB f ext4 /tmp | | LVM VG vg00, LV usr - 4.1 GB Linux device-mapper (linear) | | > #1 4.1 GB f ext4 /usr | | LVM VG vg00, LV var - 2.0 GB Linux device-mapper (linear) | | > #1 2.0 GB f ext4 /var | | SCSI1 (0,1,0) (sda) - 38.7 GB AIX VDASD | | SCSI2 (0,1,0) (sdb) - 38.7 GB AIX VDASD | | | | Undo changes to partitions | | Finish partitioning and write changes to disk | | | | <Go Back> | | | +-------------------------------------------------------------------------+

Select ”

Finish partitioning and write changes to disk“.Select the installer dialog:

Install the base system

Choose a Linux kernel to be installed and select a “targeted” initrd to be created.

Log into the system via SSH again and manually install the necessary multipath packages in the target system:

~ $ chroot /target /bin/bash root@ststdebian01:/$ mount /sys root@ststdebian01:/$ mount /proc root@ststdebian01:/$ apt-get install multipath-tools multipath-tools-boot Reading package lists... Done Building dependency tree Reading state information... Done The following extra packages will be installed: kpartx libaio1 The following NEW packages will be installed: kpartx libaio1 multipath-tools multipath-tools-boot 0 upgraded, 4 newly installed, 0 to remove and 0 not upgraded. Need to get 0 B/258 kB of archives. After this operation, 853 kB of additional disk space will be used. Do you want to continue [Y/n]? y Preconfiguring packages ... Can not write log, openpty() failed (/dev/pts not mounted?) Selecting previously unselected package libaio1:powerpc. (Reading database ... 14537 files and directories currently installed.) Unpacking libaio1:powerpc (from .../libaio1_0.3.109-3_powerpc.deb) ... Selecting previously unselected package kpartx. Unpacking kpartx (from .../kpartx_0.4.9+git0.4dfdaf2b-7~deb7u1_powerpc.deb) ... Selecting previously unselected package multipath-tools. Unpacking multipath-tools (from .../multipath-tools_0.4.9+git0.4dfdaf2b-7~deb7u1_powerpc.deb) ... Selecting previously unselected package multipath-tools-boot. Unpacking multipath-tools-boot (from .../multipath-tools-boot_0.4.9+git0.4dfdaf2b-7~deb7u1_all.deb) ... Processing triggers for man-db ... Can not write log, openpty() failed (/dev/pts not mounted?) Setting up libaio1:powerpc (0.3.109-3) ... Setting up kpartx (0.4.9+git0.4dfdaf2b-7~deb7u1) ... Setting up multipath-tools (0.4.9+git0.4dfdaf2b-7~deb7u1) ... [ ok ] Starting multipath daemon: multipathd. Setting up multipath-tools-boot (0.4.9+git0.4dfdaf2b-7~deb7u1) ... update-initramfs: deferring update (trigger activated) Processing triggers for initramfs-tools ... update-initramfs: Generating /boot/initrd.img-3.2.0-4-powerpc64 cat: /sys/devices/vio/modalias: No such device root@ststdebian01:/$ vi /etc/multipath.conf defaults { getuid_callout "/lib/udev/scsi_id -g -u -d /dev/%n" no_path_retry 10 user_friendly_names yes } blacklist { devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*" devnode "^hd[a-z][[0-9]*]" devnode "^cciss!c[0-9]d[0-9]*[p[0-9]*]" } multipaths { multipath { wwid 360050768019181279800000000000329 } }

Adjust the configuration items in

/etc/multipath.confaccording to your needs and environment, especially the disk UUID value in thewwidfield.Return to the virtual terminal and go through the following installer dialogs:

Configure the package manager Select and install software

and select the configuration items according to your needs and environment.

Select the installer dialog:

Install yaboot on a hard disk

and select

/dev/mapper/mpathap1as the device where the yaboot boot loader should be installed:+-----------------+ [!!] Install yaboot on a hard disk +------------------+ | | | Yaboot (the Linux boot loader) needs to be installed on a hard disk | | partition in order for your system to be bootable. Please choose the | | destination partition from among these partitions that have the | | bootable flag set. | | | | Warning: this will erase all data on the selected partition! | | | | Device for boot loader installation: | | | | /dev/mapper/mpathap1 | | | | <Go Back> | | | +-------------------------------------------------------------------------+

After the yaboot boot loader has been successfully installed, log into the system via SSH again. Change the device path for the boot partition, to reflect the naming scheme that will be present on the target system once it is running:

~ $ chroot /target /bin/bash root@ststdebian01:/$ vi /etc/fstab #/dev/mapper/mpathap2 /boot ext4 defaults 0 2 /dev/mapper/mpatha-part2 /boot ext4 defaults 0 2

Check the initrd for the inclusion of the multipath tools installed in the previous step:

root@ststdebian01:/$ gzip -dc /boot/initrd.img-3.2.0-4-powerpc64 | cpio -itvf - | grep -i multipath -rwxr-xr-x 1 root root 18212 Oct 15 05:16 sbin/multipath -rw-r--r-- 1 root root 392 Dec 16 18:03 etc/multipath.conf -rw-r--r-- 1 root root 328 Oct 15 05:16 lib/udev/rules.d/60-multipath.rules drwxr-xr-x 2 root root 0 Dec 16 18:03 lib/multipath -rw-r--r-- 1 root root 5516 Oct 15 05:16 lib/multipath/libpriohp_sw.so -rw-r--r-- 1 root root 9620 Oct 15 05:16 lib/multipath/libcheckrdac.so -rw-r--r-- 1 root root 9632 Oct 15 05:16 lib/multipath/libpriodatacore.so -rw-r--r-- 1 root root 13816 Oct 15 05:16 lib/multipath/libchecktur.so -rw-r--r-- 1 root root 9664 Oct 15 05:16 lib/multipath/libcheckdirectio.so -rw-r--r-- 1 root root 5516 Oct 15 05:16 lib/multipath/libprioemc.so -rw-r--r-- 1 root root 9576 Oct 15 05:16 lib/multipath/libcheckhp_sw.so -rw-r--r-- 1 root root 9616 Oct 15 05:16 lib/multipath/libpriohds.so -rw-r--r-- 1 root root 9672 Oct 15 05:16 lib/multipath/libprioiet.so -rw-r--r-- 1 root root 5404 Oct 15 05:16 lib/multipath/libprioconst.so -rw-r--r-- 1 root root 9608 Oct 15 05:16 lib/multipath/libcheckemc_clariion.so -rw-r--r-- 1 root root 5516 Oct 15 05:16 lib/multipath/libpriordac.so -rw-r--r-- 1 root root 5468 Oct 15 05:16 lib/multipath/libpriorandom.so -rw-r--r-- 1 root root 9620 Oct 15 05:16 lib/multipath/libprioontap.so -rw-r--r-- 1 root root 5488 Oct 15 05:16 lib/multipath/libcheckreadsector0.so -rw-r--r-- 1 root root 9656 Oct 15 05:16 lib/multipath/libprioweightedpath.so -rw-r--r-- 1 root root 9692 Oct 15 05:16 lib/multipath/libprioalua.so -rw-r--r-- 1 root root 9592 Oct 15 05:16 lib/multipath/libcheckcciss_tur.so -rw-r--r-- 1 root root 43552 Sep 20 06:13 lib/modules/3.2.0-4-powerpc64/kernel/drivers/md/dm-multipath.ko -rw-r--r-- 1 root root 267620 Oct 15 05:16 lib/libmultipath.so.0 -rwxr-xr-x 1 root root 984 Oct 14 22:25 scripts/local-top/multipath -rwxr-xr-x 1 root root 624 Oct 14 22:25 scripts/init-top/multipath

If the multipath tools, which were installed in the previous step, are missing, update the initrd to include them:

root@ststdebian01:/$ update-initramfs -u -k all

Check the validity of the yaboot configuration, especially the configuration items marked with ”

⇐= !!!“:root@ststdebian01:/$ vi /etc/yaboot.conf boot="/dev/mapper/mpathap1" <== !!! partition=2 root="/dev/mapper/vg00-root" <== !!! timeout=50 install=/usr/lib/yaboot/yaboot enablecdboot image=/vmlinux <== !!! label=Linux read-only initrd=/initrd.img <== !!! image=/vmlinux.old <== !!! label=old read-only initrd=/initrd.img.old <== !!!

If changes were made to the yaboot configuration, install the boot loader again:

root@ststdebian01:/$ ybin -v

In order to have the root filesystem on LVM and yaboot still be able to access its necessary configuration, the

yaboot.confhas to be placed in a subdirectory ”/etc/“ on a non-LVM device. See HOWTO-Booting with Yaboot on PowerPC for a detailed explaination which is also true for theyaboot.conf:It's worth noting thatyabootlocates the kernel image within a partition's filesystem without regard to where that partition will eventually be mounted within the Linux root filesystem. So, for example, if you've placed a kernel image or symlink at /boot/vmlinux, but /boot is actually a separate partition on your system, then the image path foryabootwill just beimage=/vmlinux.In this case the kernel and initrd images reside on the ”

/boot“ filesystem, so the path ”/etc/yaboot.conf“ has to be placed below ”/boot“. A symlink pointing from ”/etc/yaboot.conf“ to ”/boot/etc/yaboot.conf“ is added for the convenience of tools that expect it still to be in ”/etc“:root@ststdebian01:/$ mkdir /boot/etc root@ststdebian01:/$ mv -i /etc/yaboot.conf /boot/etc/ root@ststdebian01:/$ ln -s /boot/etc/yaboot.conf /etc/yaboot.conf root@ststdebian01:/$ ls -al /boot/etc/yaboot.conf /etc/yaboot.conf -rw-r--r-- 1 root root 547 Dec 16 18:20 /boot/etc/yaboot.conf lrwxrwxrwx 1 root root 21 Dec 16 18:48 /etc/yaboot.conf -> /boot/etc/yaboot.conf

Select the installer dialog:

Finish the installation

After a successful reboot, change the yaboot ”

boot=“ configuration directive (marked with ”⇐= !!!“) to use the device path name present in the now running system:root@ststdebian01:/$ vi /etc/yaboot.conf boot="/dev/mapper/mpatha-part1" <== !!! partition=2 root="/dev/mapper/vg00-root" timeout=50 install=/usr/lib/yaboot/yaboot enablecdboot image=/vmlinux label=Linux read-only initrd=/initrd.img image=/vmlinux.old label=old read-only initrd=/initrd.img.old root@ststdebian01:/$ ybin -v

Check the multipath status of the newly installed, now running system:

root@ststdebian01:~$ multipath -ll mpatha (360050768019181279800000000000329) dm-0 AIX,VDASD size=36G features='1 queue_if_no_path' hwhandler='0' wp=rw `-+- policy='round-robin 0' prio=0 status=active |- 0:0:1:0 sda 8:0 active ready running `- 1:0:1:0 sdb 8:16 active ready running root@ststdebian01:~$ df -h Filesystem Size Used Avail Use% Mounted on rootfs 961M 168M 745M 19% / udev 10M 0 10M 0% /dev tmpfs 401M 188K 401M 1% /run /dev/mapper/vg00-root 961M 168M 745M 19% / tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 801M 0 801M 0% /run/shm /dev/mapper/mpatha-part2 473M 28M 421M 7% /boot /dev/mapper/vg00-home 961M 18M 895M 2% /home /dev/mapper/vg00-srv 27G 172M 25G 1% /srv /dev/mapper/vg00-tmp 961M 18M 895M 2% /tmp /dev/mapper/vg00-usr 3.8G 380M 3.2G 11% /usr /dev/mapper/vg00-var 1.9G 191M 1.6G 11% /var root@ststdebian01:~$ mount | grep mapper /dev/mapper/vg00-root on / type ext4 (rw,relatime,errors=remount-ro,user_xattr,barrier=1,data=ordered) /dev/mapper/mpatha-part2 on /boot type ext4 (rw,relatime,user_xattr,barrier=1,data=ordered) /dev/mapper/vg00-home on /home type ext4 (rw,relatime,user_xattr,barrier=1,data=ordered) /dev/mapper/vg00-srv on /srv type ext4 (rw,relatime,user_xattr,barrier=1,data=ordered) /dev/mapper/vg00-tmp on /tmp type ext4 (rw,relatime,user_xattr,barrier=1,data=ordered) /dev/mapper/vg00-usr on /usr type ext4 (rw,relatime,user_xattr,barrier=1,data=ordered) /dev/mapper/vg00-var on /var type ext4 (rw,relatime,user_xattr,barrier=1,data=ordered) root@ststdebian01:~$ pvdisplay --- Physical volume --- PV Name /dev/mapper/mpatha-part5 VG Name vg00 PV Size 35.51 GiB / not usable 3.00 MiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 9091 Free PE 0 Allocated PE 9091 PV UUID gO0PTK-xi9b-H4om-BRgj-rEy2-NSzC-UokjEw

Although it is a rather manual and error prone process, it is nonetheless possible to install Debian Wheezy with multipathing enabled from the start. Another and probably easier method would be to map the disk through only one VIO server during the installation phase and add the second mapping as well as the necessary multipath configuration after the installed system has been successfully brought up.

Some of the manual steps above should IMHO be handled automatically by the Debian installer. For example, why the missing packages multipath-tools and multipath-tools-boot aren't installed automatically eludes me. So there seems to be at least some room for improvement.

First tests with the Debian installer of the upcoming “Jessie” release were promising, but the completion of an install fails at the moment due to unresolved package dependencies. But that'll be the subject for future posts …

2013-11-21 // Nagios Monitoring - EMC Centera

Some time ago i wrote a – rather crude – Nagios plugin to monitor EMC Centera object storage or CAS systems. The plugin was targeted at the Gen3 hardware nodes we used back then. Since the initial implementation of the plugin we upgraded our Centera systems to Gen4 hardware nodes. The plugin still works with Gen4 hardware nodes, but unfortunately the newer nodes do not seem to provide certain information like CPU and case temperature values anymore. At the time of implementation there was also a software issue with the CentraStar OS which caused the CLI tools to hang on ending the session (Centera CLI hangs when ends the session with the "quit" command). So instead of using an existing Nagios plugin for the EMC Centera like check_emc_centera.pl i decided to gather the necessary information via the Centera API with a small application written in C, using function calls from the Centera SDK. After the initial attempts it turned out, that gathering the information on the Centera can be a quite time consuming process. In order to adress this issue and keep the Nagios system from waiting a long time for the results i decided on an asynchronous setup, where:

a recurring cron job for each Centera system calls the wrapper script

get_centera_info.sh, which uses the binary/opt/Centera/bin/GetInfoto gather the necessary information via the Centera API and writes the results into a file.the Nagios plugin checks the file to be up-to-date or issues a warning if it isn't.

the Nagios plugin reads and evaluates the different sections of the result file according to the defined Nagios checks.

In order to run the wrapper script get_centera_info.sh and GetInfo binary, you need to have the Centera SDK installed on the Nagios system. A network connection from the Nagios system to the Centera system on port TCP/3218 must also be allowed.

Since the Nagios server in my setup runs on Debian/PPC on a IBM Power LPAR and there is no native Linux/PPC version of the Centera SDK, i had to run those tools through the IBM PowerVM LX86 emulator. If the plugin is run in a x86 environment, the variables POWERVM_PATH and RUNX86 have to be set to an empty value.

The whole setup looks like this:

Verify the user

anonymouson the Centera has theMonitorrole, which is the default. Login to the Centera CLI and issue the following commands:Config$ show profile detail anonymous Centera Profile Detail Report ------------------------------------------------------ Profile Name: anonymous Profile Enabled: yes [...] Cluster Management Roles: Accesscontrol Role: off Audit Role: off Compliance Role: off Configuration Role: off Migration Role: off Monitor Role: on Replication Role: off

Optional: Enable SNMP traps to be sent to the Nagios system from the Centera. This requires SNMPD and SNMPTT to be already setup on the Nagios system. Login to the Centera CLI and issue the following commands:

Config$ set snmp Enable SNMP (yes, no) [yes]: Management station [<IP of the Nagios system>:162]: Community name [<SNMPDs community string>]: Heartbeat trap interval [30 minutes]: Issue the command? (yes, no) [no]: yes Config$ show snmp SNMP enabled: Enabled Management Station: <IP of the Nagios system>:162 Community name: <SNMPDs community string> Heartbeat trap interval: 30 minutes

Verify the port UDP/162 on the Nagios system can be reached from the Centera system.

Install the Centera SDK – only the subdirectories

libandincludeare actually needed – on the Nagios system, in this example in/opt/Centera. Verify the port TCP/3218 on the Centera system can be reached from the Nagios system.Optional: Download the GetInfo source, place it in the source directory of the Centera SDK and compile the

GetInfobinary against the Centera API:$ mkdir /opt/Centera/src $ mv -i getinfo.c /opt/Centera/src/GetInfo.c $ cd /opt/Centera/src/ $ gcc -Wall -DPOSIX -I /opt/Centera/include -Wl,-rpath /opt/Centera/lib \ -L/opt/Centera/lib GetInfo.c -lFPLibrary32 -lFPXML32 -lFPStreams32 \ -lFPUtils32 -lFPParser32 -lFPCore32 -o GetInfo $ mkdir /opt/Centera/bin $ mv -i GetInfo /opt/Centera/bin/ $ chmod 755 /opt/Centera/bin/GetInfo

Download the GetInfo binary and place it in the binary directory of the Centera SDK:

$ mkdir /opt/Centera/bin $ bzip2 -d getinfo.bz2 $ mv -i GetInfo /opt/Centera/bin/ $ chmod 755 /opt/Centera/bin/GetInfo

Download the Nagios plugin check_centera.pl and the cron job wrapper script get_centera_info.sh and place them in the plugins directory of your Nagios system, in this example

/usr/lib/nagios/plugins/:$ mv -i check_centera.pl get_centera_info.sh /usr/lib/nagios/plugins/ $ chmod 755 /usr/lib/nagios/plugins/check_centera.pl $ chmod 755 /usr/lib/nagios/plugins/get_centera_info.sh

Adjust the plugin settings according to your environment. Edit the following variable assignments in

get_centera_info.sh:POWERVM_PATH=/srv/powervm-lx86/i386 RUNX86=/usr/local/bin/runx86

Perform a manual test run of the wrapper script

get_centera_info.shagainst the primary and the secondary IP adress of the Centera system. The IP adresses are in this example10.0.0.1and10.0.0.2and the hostname of the Centera system is in this examplecentera1:$ /usr/lib/nagios/plugins/get_centera_info.sh -p 10.0.0.1 -s 10.0.0.2 -f /tmp/centera1.xml $ ls -al /tmp/centera1.xml -rw-r--r-- 1 root root 478665 Nov 21 21:21 /tmp/centera1.xml $ less /tmp/centera1.xml <?xml version="1.0"?> <health> <reportIdentification type="health" formatVersion="1.7.1" formatDTD="health-1.7.1.dtd" sequenceNumber="2994" reportInterval="86400000" creationDateTime="1385025078472"/> <cluster> [...] </cluster> </health>

If the results file was sucessfully written, define a cron job to periodically update the necessary information from each Centera system:

0,10,20,30,40,50 * * * * /usr/lib/nagios/plugins/get_centera_info.sh -p 10.0.0.1 -s 10.0.0.2 -f /tmp/centera1.xml

Define the following Nagios commands. In this example this is done in the file

/etc/nagios-plugins/config/check_centera.cfg:# check Centera case temperature define command { command_name check_centera_casetemp command_line $USER1$/check_centera.pl -f /tmp/$HOSTNAME$.xml -C caseTemp -w $ARG1$ -c $ARG2$ } # check Centera CPU temperature define command { command_name check_centera_cputemp command_line $USER1$/check_centera.pl -f /tmp/$HOSTNAME$.xml -C cpuTemp -w $ARG1$ -c $ARG2$ } # check Centera storage capacity define command { command_name check_centera_capacity command_line $USER1$/check_centera.pl -f /tmp/$HOSTNAME$.xml -C capacity -w $ARG1$ -c $ARG2$ } # check Centera drive status define command { command_name check_centera_drive command_line $USER1$/check_centera.pl -f /tmp/$HOSTNAME$.xml -C drive } # check Centera node status define command { command_name check_centera_node command_line $USER1$/check_centera.pl -f /tmp/$HOSTNAME$.xml -C node } # check Centera object capacity define command { command_name check_centera_objects command_line $USER1$/check_centera.pl -f /tmp/$HOSTNAME$.xml -C objects -w $ARG1$ -c $ARG2$ } # check Centera power status define command { command_name check_centera_power command_line $USER1$/check_centera.pl -f /tmp/$HOSTNAME$.xml -C power } # check Centera stealthCorruption define command { command_name check_centera_sc command_line $USER1$/check_centera.pl -f /tmp/$HOSTNAME$.xml -C stealthCorruption -w $ARG1$ -c $ARG2$Define a group of services in your Nagios configuration to be checked for each Centera system:

# check case temperature define service { use generic-service-pnp hostgroup_name centera service_description Check_case_temperature check_command check_centera_casetemp!35!40 } # check CPU temperature define service { use generic-service-pnp hostgroup_name centera service_description Check_CPU_temperature check_command check_centera_cputemp!50!55 } # check storage capacity define service { use generic-service-pnp hostgroup_name centera service_description Check_storage_capacity check_command check_centera_capacity!100G!200G } # check drive status define service { use generic-service hostgroup_name centera service_description Check_drive_status check_command check_centera_drive } # check node status define service { use generic-service hostgroup_name centera service_description Check_node_status check_command check_centera_node } # check object capacity define service { use generic-service-pnp hostgroup_name centera service_description Check_object_capacity check_command check_centera_objects!10%!5% } # check power status define service { use generic-service hostgroup_name centera service_description Check_power_status check_command check_centera_power } # check stealthCorruption define service { use generic-service hostgroup_name centera service_description Check_stealth_corruptions check_command check_centera_sc!50!100 }Replace

generic-servicewith your Nagios service template. Replacegeneric-service-pnpwith your Nagios service template that has performance data processing enabled.Define hosts in your Nagios configuration for each IP adress of the Centera system. In this example they are named

centera1-priandcentera1-sec:define host { use disk host_name centera1-pri alias Centera 1 (Primary) address 10.0.0.1 parents parent_lan } define host { use disk host_name centera1-sec alias Centera 1 (Secondary) address 10.0.0.2 parents parent_lan }Replace

diskwith your Nagios host template for storage devices. Adjust theaddressandparentsparameters according to your environment.Define a host in your Nagios configuration for each Centera system. In this example it is named

centera1:define host { use disk host_name centera1 alias Centera 1 address 10.0.0.1 parents centera1-pri, centera1-sec }Replace

diskwith your Nagios host template for storage devices. Adjust theaddressparameter to the IP of the previously defined hostcentera1-priand set the two previously defined hostscentera1-priandcentera1-secas parents.The three host configuration is necessary, since the services are checked against the main system

centera1, but the main system can be reached via both primary and secondary IP adress. Also, the optional SNMP traps originate either from the primary or from the secondary adress for which there must be a host entity to check the SNMP traps against.Define a hostgroup in your Nagios configuration for all Centera systems. In this example it is named

centera1. The above checks are run against each member of the hostgroup:define hostgroup { hostgroup_name centera alias EMC Centera Devices members centera1 }Define a second hostgroup in your Nagios configuration for the primary and secondary IP adresses of all Centera systems. In this example they are named

centera1-priandcentera1-sec.define hostgroup { hostgroup_name centera-node alias EMC Centera Devices members centera1-pri, centera1-sec }Run a configuration check and if successful reload the Nagios process:

$ /usr/sbin/nagios3 -v /etc/nagios3/nagios.cfg $ /etc/init.d/nagios3 reload

The new hosts and services should soon show up in the Nagios web interface.

If the optional step number 2 in the above list was done, SNMPTT also needs to be configured to be able to understand the incoming SNMP traps from Centera systems. This can be achieved by the following steps:

Convert the EMC Centera SNMP MIB definitions in

emc-centera.mibinto a format that SNMPTT can understand.$ /opt/snmptt/snmpttconvertmib --in=MIB/emc-centera.mib --out=/opt/snmptt/conf/snmptt.conf.emc-centera ... Done Total translations: 2 Successful translations: 2 Failed translations: 0

Edit the trap severity according to your requirements, e.g.:

$ vim /opt/snmptt/conf/snmptt.conf.emc-centera ... EVENT trapNotification .1.3.6.1.4.1.1139.5.0.1 "Status Events" Normal ... EVENT trapAlarmNotification .1.3.6.1.4.1.1139.5.0.2 "Status Events" Critical ...

Add the new configuration file to be included in the global SNMPTT configuration and restart the SNMPTT daemon:

$ vim /opt/snmptt/snmptt.ini ... [TrapFiles] snmptt_conf_files = <<END ... /opt/snmptt/conf/snmptt.conf.emc-centera ... END $ /etc/init.d/snmptt reload

Download the Nagios plugin check_snmp_traps.sh and the Nagios plugin check_snmp_traps_heartbeat.sh and place them in the plugins directory of your Nagios system, in this example

/usr/lib/nagios/plugins/:$ mv -i check_snmp_traps.sh check_snmp_traps_heartbeat.sh /usr/lib/nagios/plugins/ $ chmod 755 /usr/lib/nagios/plugins/check_snmp_traps.sh $ chmod 755 /usr/lib/nagios/plugins/check_snmp_traps_heartbeat.sh

Define the following Nagios command to check for SNMP traps in the SNMPTT database. In this example this is done in the file

/etc/nagios-plugins/config/check_snmp_traps.cfg:# check for snmp traps define command { command_name check_snmp_traps command_line $USER1$/check_snmp_traps.sh -H $HOSTNAME$:$HOSTADDRESS$ -u <user> -p <pass> -d <snmptt_db> } # check for EMC Centera heartbeat snmp traps define command { command_name check_snmp_traps_heartbeat command_line $USER1$/check_snmp_traps_heartbeat.sh -H $HOSTNAME$ -S $ARG1$ -u <user> -p <pass> -d <snmptt_db> }Replace

user,passandsnmptt_dbwith values suitable for your SNMPTT database environment.Add another service in your Nagios configuration to be checked for each Centera system:

# check heartbeat snmptraps define service { use generic-service hostgroup_name centera service_description Check_SNMP_heartbeat_traps check_command check_snmp_traps_heartbeat!3630 }Add another service in your Nagios configuration to be checked for each IP adress of the Centera system:

# check snmptraps define service { use generic-service hostgroup_name centera-node service_description Check_SNMP_traps check_command check_snmp_traps }Optional: Define a serviceextinfo to display a folder icon next to the

Check_SNMP_trapsservice check for each Centera system. This icon provides a direct link to the SNMPTT web interface with a filter for the selected host:define serviceextinfo { hostgroup_name centera-node service_description Check_SNMP_traps notes SNMP Alerts #notes_url http://<hostname>/nagios3/nagtrap/index.php?hostname=$HOSTNAME$ #notes_url http://<hostname>/nagios3/nsti/index.php?perpage=100&hostname=$HOSTNAME$ }Uncomment the

notes_urldepending on which web interface (nagtrap or nsti) is used. Replacehostnamewith the FQDN or IP address of the server running the web interface.Run a configuration check and if successful reload the Nagios process:

$ /usr/sbin/nagios3 -v /etc/nagios3/nagios.cfg $ /etc/init.d/nagios3 reload

Optional: If you're running PNP4Nagios v0.6 or later to graph Nagios performance data, you can use the

check_centera_capacity.php,check_centera_casetemp.php,check_centera_cputemp.phpandcheck_centera_objects.phpPNP4Nagios template to beautify the graphs. Download the PNP4Nagios templates check_centera_capacity.php, check_centera_casetemp.php, check_centera_cputemp.php and check_centera_objects.php and place them in the PNP4Nagios template directory, in this example/usr/share/pnp4nagios/html/templates/:$ mv -i check_centera_capacity.php check_centera_casetemp.php check_centera_cputemp.php \ check_centera_objects.php /usr/share/pnp4nagios/html/templates/ $ chmod 644 /usr/share/pnp4nagios/html/templates/check_centera_capacity.php $ chmod 644 /usr/share/pnp4nagios/html/templates/check_centera_casetemp.php $ chmod 644 /usr/share/pnp4nagios/html/templates/check_centera_cputemp.php $ chmod 644 /usr/share/pnp4nagios/html/templates/check_centera_objects.php

The following image shows an example of what the PNP4Nagios graphs look like for a EMC Centera system:

All done, you should now have a complete Nagios-based monitoring solution for your EMC Centera system.

A note on the side: The result file(s) and their XML formatted content are definitely worth to be looked at. Besides the rudimentary information extracted for the Nagios health checks described above, there is an abundance of other promising information in there.

2013-11-15 // Cacti Monitoring Templates for TMS RamSan and IBM FlashSystem

This is another update to the previous posts about Cacti Monitoring Templates and Nagios Plugin for TMS RamSan-630 and Cacti Monitoring Templates for TMS RamSan-630 and RamSan-810. Three major changes were done in this version of the Cacti templates:

After a report from Mike R. of the templates also working for the TMS RamSan-720, i decided to drop the string “TMS RamSan-630” which was used in several places for the more general string “TMS RamSan”.

Mike R. – who is using TMS RamSan-720 systems with InfiniBand host interfaces – was also kind enough to share his adaptions to the Cacti templates for monitoring of the InfiniBand interfaces. Those adaptions have now been merged and incooperated in Cacti templates in order to allow monitoring of pure InfiniBand-based and mixed (FC and IB) systems.

After getting in touch with TMS – and later on IBM

– over the following questions on my side:

[…]

While adapting our Cacti templates to include the new SNMP counters provided in recent firmware versions i noticed, some strange behaviour when comparing the SNMP counter values with the live values via the WebGUI:

- The SNMP counters fcCacheHit, fcCacheMiss and fcCacheLookup seem always to be zero (see example 1 below).

- The SNMP counter fcRXFrames seem to always stay at the same value (see example 2 below) although the WebGUI shows them increasing. Possibly a counter overflow?

- The SNMP couters fcWriteSample* seem always to be zero (see example 3 below) although the WebGUI shows them increasing. The fcReadSample* counters don’t show this behaviour.

[…]

Also, is there some kind of roadmap for other performance counters currently only available via the WebGUI to become accessible via SNMP?

[…]i got the following response from Brian Groff over at “IBM TMS RamSan Support”:

Regarding your inquiry to the SNMP values, here are our findings:

1. The SNMP counters fcCacheHit, fcCacheMiss and fcCacheLookup are deprecated in the new Flash systems. The cache does not exist in RamSan-6xx and later models.

2. The SNMP counter fcRXFrames is intended to remain static and historically used for development purposes. Please do not use this counter for performance or usage monitoring.

3. The SNMP counters fcWriteSample and fcReadSample are also historically used for development purposes and not intended for performance or usage monitoring.

Your best approach to performance monitoring is using IOPS and bandwidth counters on a system, FC interface card, and FC interface port basis.

The list of counters in future products has not been released. We expect them to be similar to the RamSan-8xx model and primarily used to monitor IOPS and bandwidth.Based on this information i decided to remove the counters

fcCacheHit,fcCacheMissandfcCacheLookupfrom the templates as well as their InfiniBand counterpartsibCacheHit,ibCacheMissandibCacheLookup. I decided to keepfcRXFramesandfcWriteSample*in the templates, since their counterpartsfcTXFramesandfcReadSample*seem to produce valid values. This way the users of the Cacti templates can – based on the information provided above – decide on their own whether to graph those counters or not.

To be honest, the contact with TMS/IBM was a bit disappointing and less than enthusiastic. On an otherwise really great product, i'd wish for a bit more open mindedness when it comes to 3rd party monitoring tools through an industry standard like the SNMP protocol. Once again i got the feeling that SNMP was the ugly stepchild, which was only implemented to be able to check it off the “got to have” list in the first place. True, inside the WebGUI all the metrics are there, but that's another one of those beloved isolated monitoring application right there. Is it really that hard to expose the already collected metrics from the WebGUI via SNMP to the outside?

The Nagios plugins and the updated Cacti templates can be downloaded here Nagios Plugin and Cacti Templates.

2013-11-11 // Nagios Monitoring - IBM Power Systems and HMC

We use several IBM Power Systems servers in our datacenters to run mission critical application and database services. Some time ago i wrote a – rather crude – Nagios plugin to monitor the IBM Power Systems hardware via the Hardware Management Console (HMC) appliance, as well as the HMC itself. In order to run the Nagios plugin, you need to have “Remote Command Execution” via SSH activated on the HMC appliances. Also, a network connection from the Nagios system to the HMC appliances on port TCP/22 must be allowed.

The whole setup for monitoring IBM Power Systems servers and HMC appliances looks like this:

Enable remote command execution on the HMC. Login to the HMC WebGUI and navigate to:

-> HMC Management -> Administration -> Remote Command Execution -> Check selection: "Enable remote command execution using the ssh facility" -> OKVerify the port TCP/22 on the HMC appliance can be reached from the Nagios system.

Create a SSH private/public key pair on the Nagios system to authenticate the monitoring user against the HMC appliance:

$ mkdir -p /etc/nagios3/.ssh/ $ chown -R nagios:nagios /etc/nagios3/.ssh/ $ chmod 700 /etc/nagios3/.ssh/ $ cd /etc/nagios3/.ssh/ $ ssh-keygen -t rsa -b 2048 -f id_rsa_hmc Generating public/private rsa key pair. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in id_rsa_hmc. Your public key has been saved in id_rsa_hmc.pub. The key fingerprint is: xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx user@host $ chown -R nagios:nagios /etc/nagios3/.ssh/id_rsa_hmc*

Prepare a SSH authorized keys file and transfer it to the HMC.

Optional Create a separate user on the HMC for monitoring purposes. The new user needs to have permission to run the HMC commands

lsled,lssvcevents,lssyscfgandmonhmc. If a separate user should be used, replacehscrootin the following text with the name of the new user.Optional If there is already a SSH authorized keys file set up on the HMC, be sure to retrieve it first so it doesn't get overwritten:

$ cd /tmp $ scp -r hscroot@hmc:~/.ssh /tmp/ Password: authorized_keys2 100% 600 0.6KB/s 00:00

Insert the SSH public key created above into a SSH authorized keys file and push it back to the HMC:

$ echo -n 'from="<IP of your Nagios system>",no-agent-forwarding,no-port-forwarding,no-X11-forwarding ' \ >> /tmp/.ssh/authorized_keys2 $ cat /etc/nagios3/.ssh/id_rsa_hmc.pub >> /tmp/.ssh/authorized_keys2 $ scp -r /tmp/.ssh hscroot@hmc:~/ Password: authorized_keys2 100% 1079 1.1KB/s 00:00 $ rm -r /tmp/.ssh

Add the SSH host public key from the HMC appliance:

HMC$ cat /etc/ssh/ssh_host_rsa_key.pub ssh-rsa AAAAB3N...[cut]...jxFAz+4O1X root@hmc

to the SSH

ssh_known_hostsfile of the Nagios system:$ vi /etc/ssh/ssh_known_hosts hmc,FQDN,<IP of your HMC> ssh-rsa AAAAB3N...[cut]...jxFAz+4O1X

Verify the login on the HMC appliance is possible from the Nagios system with the SSH public key created above:

$ ssh -i /etc/nagios3/.ssh/id_rsa_hmc hscroot@hmc 'lshmc -V' "version= Version: 7 Release: 7.7.0 Service Pack: 2 HMC Build level 20130730.1 MH01373: Fix for HMC V7R7.7.0 SP2 (08-06-2013) ","base_version=V7R7.7.0"

Download the Nagios plugin check_hmc.sh and place it in the plugins directory of your Nagios system, in this example

/usr/lib/nagios/plugins/:$ mv -i check_hmc.sh /usr/lib/nagios/plugins/ $ chmod 755 /usr/lib/nagios/plugins/check_hmc.sh

Define the following Nagios commands. In this example this is done in the file