2015-05-11 // IBM SVC and experiences with Real-time Compression - VMware and MS SQL Server

Following up on the previous article (IBM SVC and experiences with Real-time Compression) about our experiences with Real-time compression (RTC) in a IBM SVC environment, we did some additional and further testing with regard to RTC being used in a VMware environment hosting soley database instances of Microsoft SQL Server.

With the implementation of the seperation of all of our Microsoft SQL Server instances into an own VMware ESX cluster – which had to be done due to licensing constraints – an opportunity presented itself, to take a bit more detailed look into how well the SVCs RTC feature would perform on this particular type of workload.

Usually, the contents of a VMware datastore are by their very nature opaque to the lower levels of the storage hierarchy like the SVC which is providing the block storage for the datastores. On the one hand an advantage, this lack of transparency on the other hand also prohibits the SVC from providing a fine grained, per VM set of its usual statistics (e.g. the actual amount of storage used with thin-provisioning or RTC enabled, the amount of storage in the individual storage tier classes, etc.) and performance metrics. By not only moving the compute part of the VMs over to the new VMware ESX hosts in a seperate VMware ESX cluster, but also individually moving the storage part of one VM after the other onto new and empty datastores, which in turn were at the SVC based on newly created, empty VDisks with RTC enabled, we were able to measure the impact of RTC on a per VM level. It was a bit of tedious work and the precision of the numbers has to be taken with a grain of salt because I/O was concurrently happening on the database and operating system level, probably causing additional extends to be allocated and thus some inaccuracy to a small extent.

The environment consists of the following four datastores, four VDisks and a total number of 43 VMs running various versions of Microsoft SQL Server:

| VM Datastore | SVC VDisk | Datastore Size (GB) | Number of VMs |

|---|---|---|---|

| san-ssb-sql-oben-35C | sas-4096G-35C | 4096 | 16 |

| san-ssb-sql-unten-360 | sas-4096G-360 | 4096 | 9 |

| san-ssb-sql-unten-361 | sas-2048G-361 | 2048 | 8 |

| san-ssb-sql-oben-362 | sas-2048G-362 | 2048 | 10 |

| Sum | 12288 | 43 |

Usually the VMs are configured with one or two disks or drives for the OS and one or more disks or drives dedicated to the database contents. In some legacy cases, specifically VM #7, #10 and #12 on datastore “san-ssb-sql-oben-35C”, VM #6 on datastore “san-ssb-sql-unten-360” and VM #8 on datastore “san-ssb-sql-unten-361”, there is also a local application instance – in our case different versions of SAP – installed on the system. The operating systems used are Windows Server 2003, 2008R2 and 2012R2.

For each of the four datastores or VDisks, the results of the tests are shown in the following four sections. Each section consists of a table representation of the datastore as it was being populated with VMs in chronological order. The columns of the tables are pretty self-explainatory, with the most interesting columns being “Rel. Compressed Size”, “Rel. Compression Saving” and “Compression Ratio”. If JavaScript is enabled in your browser, click on a tables column headers to sort by that particular column. Each section also contains a graph of the absolute allocation values (table columns “VM Provisioned”, “VM Used” and “SVC Used”) per VM and a graph of the relative allocation values (values from the table column “SVC Used” divided by values from the table column “VM Used” in %) per VM. The latter ones also feature the “Compression Ratio” metric which is graphed against a second y-axis on the right-hand side of the graphs.

Datastore: san-ssb-sql-oben-35C

VDisk: sas-4096G-35CVM VM Provisioned (GB) VM Used (GB) SVC Used (GB) SVC Delta Used (GB) Rel. Compressed Size (%) Rel. Compression Saving (%) Compression Ratio SQL Server Version Comment Empty Datastore – – 6.81 MB 6.81 MB – – – – Number 1 134.11 44.00 20.78 20.10 45.68 54.32 2.19 10.50.4000.0 Number 2 144.11 55.04 48.45 28.35 51.51 48.49 1.94 11.0.5058.0 Number 3 94.18 49.79 75.88 27.43 55.09 44.91 1.82 11.0.5058.0 – – – 76.30 0.42 – – – – Number 4 454.11 52.22 92.47 16.17 30.97 69.03 3.23 10.50.4000.0 Number 5 456.11 391.14 337.74 245.27 62.71 37.29 1.59 10.50.4000.0 SharePoint with file uploads Number 6 238.14 156.29 450.89 113.15 72.40 27.60 1.38 10.50.6000.34 HR Applicant Management Number 7 488.11 304.32 660.05 209.16 68.73 31.27 1.45 10.50.4000.0 SQL Page Compression Number 8 348.09 259.32 703.47 43.42 16.74 83.26 5.97 9.00.4035.00 Number 9 185.24 149.33 744.68 41.21 27.60 72.40 3.62 10.50.4000.0 Number 10 292.12 104.31 804.14 59.46 57.00 43.00 1.75 11.0.3349.0 SQL Page Compression Number 11 214.11 148.73 882.44 78.30 52.65 47.35 1.90 10.50.2500.0 Number 12 496.09 463.15 993.53 111.09 23.99 76.01 4.17 9.00.3228.00 Number 13 202.12 176.96 1058.76 65.23 36.86 63.14 2.71 9.00.4035.00 Number 14 442.41 363.83 1200.11 141.35 38.85 61.15 2.57 11.0.3412.0 SQL Page Compression Number 15 395.38 374.78 1300.00 97.96 26.14 73.86 3.83 11.0.3412.0 SQL Page Compression Number 16 739.31 464.30 1520.00 220.00 47.38 52.62 2.11 11.0.3412.0 SQL Page Compression Sum or Average 5189.63 3513.51 1497.55 42.10 57.90 2.38 Datastore: san-ssb-sql-unten-360

VDisk: sas-4096G-360VM VM Provisioned (GB) VM Used (GB) SVC Used (GB) SVC Delta Used (GB) Rel. Compressed Size (%) Rel. Compression Saving (%) Compression Ratio SQL Server Version Comment Empty Datastore – – 5.47 MB 5.47 MB – – – – Number 1 377.68 241.77 98.13 97.58 40.36 59.64 2.48 11.0.3412.0 SQL Page Compression Number 2 439.29 256.54 198.76 100.63 39.23 60.77 2.55 11.0.3412.0 SQL Page Compression Number 3 776.76 507.24 448.04 249.28 49.14 50.86 2.03 11.0.3412.0 SQL Page Compression Number 4 195.21 154.10 533.52 85.48 55.47 44.53 1.80 11.0.5058.0 Number 5 34.11 23.91 546.66 13.14 54.96 45.04 1.82 9.00.4035.00 Number 6 497.11 394.46 718.35 171.69 43.53 56.47 2.30 10.50.1702.0 SQL Page Compression Number 7 219.91 53.87 740.20 21.85 40.56 59.44 2.47 11.0.3000.0 Number 8 276.11 238.67 850.77 110.57 46.33 53.67 2.16 10.50.2500.0 Number 9 228.11 188.26 986.25 135.48 71.96 28.04 1.39 10.50.2500.0 HR Applicant Management Sum or Average 3044.29 2058.82 986.25 47.90 52.10 2.09 Datastore: san-ssb-sql-unten-361

VDisk: sas-2048G-361VM VM Provisioned (GB) VM Used (GB) SVC Used (GB) SVC Delta Used (GB) Rel. Compressed Size (%) Rel. Compression Saving (%) Compression Ratio SQL Server Version Comment Empty Datastore – – 6.66 MB 6.66 MB – – – – Number 1 188.11 162.98 51.46 51.45 31.57 68.43 3.17 10.50.4000.0 Number 2 180.31 130.82 90.79 39.33 30.06 69.94 3.33 10.50.4000.0 Number 3 178.11 142.63 131.17 40.38 28.31 71.69 3.53 10.50.4000.0 Number 4 156.09 127.26 177.30 46.13 36.25 63.75 2.76 9.00.4035.00 Number 5 100.86 90.40 202.76 25.46 28.16 71.84 3.55 9.00.4035.00 – – – 205.91 3.15 – – – – Number 6 139.09 79.17 233.85 27.94 35.29 64.71 2.83 11.0.5058.0 Number 7 34.11 24.16 246.37 12.52 51.82 48.18 1.93 9.00.4035.00 Number 8 696.32 289.09 402.07 155.07 53.86 46.14 1.86 11.0.5058.0 SQL Page Compression Sum or Average 1673.00 1046.51 402.07 38.42 61.58 2.60 Datastore: san-ssb-sql-oben-362

VDisk: sas-2048G-362VM VM Provisioned (GB) VM Used (GB) SVC Used (GB) SVC Delta Used (GB) Rel. Compressed Size (%) Rel. Compression Saving (%) Compression Ratio SQL Server Version Comment Empty Datastore – – – – – – – – Number 1 218.09 37.19 19.93 19.93 53.59 46.41 1.87 9.00.4035.00 Number 2 122.12 112.53 62.32 42.39 37.67 62.33 2.65 10.50.1600.1 Number 3 273.62 77.70 97.04 34.72 44.68 55.32 2.24 10.50.4000.0 Number 4 143.09 37.98 116.47 19.43 51.16 48.84 1.95 11.0.5058.0 Number 5 137.43 115.29 157.45 40.98 35.55 64.45 2.81 10.50.4000.0 Number 6 80.09 76.04 178.76 21.31 28.02 71.98 3.57 9.00.4035.00 – – – 182.30 3.54 – – – – Number 7 136.09 117.59 229.95 47.65 40.52 59.48 2.47 9.00.4035.00 Number 8 194.11 53.55 253.40 23.45 43.79 56.21 2.28 10.50.2500.0 Number 9 78.11 52.60 273.07 19.67 37.40 62.60 2.67 – Number 10 53.11 31.42 287.33 14.26 45.39 54.61 2.20 10.50.4000.0 Sum or Average 1435.86 711.89 283.82 40.36 59.64 2.48

From the detailed tabular representation of each datastore on a per VM basis that is shown above, an aggregated and summarized view, shown in the table below was created. The columns of the table are again pretty self-explainatory. Some of the less interesting columns from the detailed tables above have been dropped in the aggregated result table. The most interesting columns are again “Rel. Compressed Size”, “Rel. Compression Saving” and “Compression Ratio”. Again, if JavaScript is enabled in your browser, click on a tables column headers to sort by that particular column.

| VM Datastore | SVC VDisk | VM Provisioned (GB) | VM Used (GB) | SVC Used (GB) | Rel. Compressed Size (%) | Rel. Compression Saving (%) | Compression Ratio |

|---|---|---|---|---|---|---|---|

| san-ssb-sql-oben-35C | sas-4096G-35C | 5189.63 | 3513.51 | 1497.55 | 42.10 | 57.90 | 2.38 |

| san-ssb-sql-unten-360 | sas-4096G-360 | 3044.29 | 2058.82 | 986.25 | 47.90 | 52.10 | 2.09 |

| san-ssb-sql-unten-361 | sas-2048G-361 | 976.68 | 757.42 | 243.21 | 32.53 | 67.47 | 3.07 |

| san-ssb-sql-oben-362 | sas-2048G-362 | 1435.86 | 711.89 | 283.82 | 40.36 | 59.64 | 2.48 |

| Sum or Average | 10646.46 | 7041.64 | 3010.83 | 42.76 | 57.24 | 2.34 |

On the whole, the compression results achived with RTC at SVC level are quite good. Considering the fact that VMware already achieved a sizeable amount of storage reduction through its own thin-provisioning algorithms, the average RTC compression ratio of 2.34 – with a minimum of 1.38 and a maximum of 5.97 – at the SVC is even more impressive. This means that on average only 42.76% of the storage space allocated by VMware is actually used on the SVC level to store the data. Taking VMware thin-provisioning into account, the relative amount of actual storage space needed sinks even further down to 28.28%, or in other words a reduction by a factor of 3.54.

Looking at the individual graphs offers several other interesting insights, which can be indicators for further investigations on the database layer. In the graphs showing the relative allocation values and titled “SVC Real-Time Compression Relative Storage Allocation and Compression Ratio on VMware with MS SQL Server”, those data samples with a high “Rel. Compressed Size” value (purple bars) or a low “Compression Ratio” value (red line) are of particular interest in this case. Selecting those systems from the above results, with an – arbitrarily choosen – value of well below 2.00 for the “Compression Ratio” metric, gives us the following list of systems to take a closer look at:

| Sample | Datastore / VDisk | VM # | VM Provisioned (GB) | VM Used (GB) | SVC Delta Used (GB) | Compression Ratio | Comment |

|---|---|---|---|---|---|---|---|

| 1 | san-ssb-sql-oben-35C sas-4096G-35C | 3 | 94.18 | 49.79 | 27.43 | 1.82 | SQLIO benchmark file |

| 2 | 5 | 456.11 | 391.14 | 245.27 | 1.59 | SharePoint with file uploads | |

| 3 | 6 | 238.14 | 156.29 | 113.15 | 1.38 | HR Applicant Management | |

| 4 | 7 | 488.11 | 304.32 | 209.16 | 1.45 | SAP and MSSQL (with SQL Page Compression) on the same VM | |

| 5 | 10 | 292.12 | 104.31 | 59.46 | 1.75 | SAP and MSSQL (with SQL Page Compression) on the same VM | |

| 6 | san-ssb-sql-unten-360 sas-4096G-360 | 4 | 195.21 | 154.10 | 85.48 | 1.80 | SQLIO benchmark file |

| 7 | 5 | 34.11 | 23.91 | 13.14 | 1.82 | ||

| 8 | 9 | 228.11 | 188.26 | 135.48 | 1.39 | HR Applicant Management | |

| 9 | san-ssb-sql-unten-361 sas-2048G-361 | 7 | 34.11 | 24.16 | 12.52 | 1.93 | |

| 10 | 8 | 696.32 | 289.09 | 155.07 | 1.86 | SAP and MSSQL (with SQL Page Compression) on the same VM | |

| 11 | san-ssb-sql-oben-362 sas-2048G-362 | 1 | 218.09 | 37.19 | 19.93 | 1.87 | |

| 12 | 4 | 143.09 | 37.98 | 19.43 | 1.95 |

The reasons for why those particular systems are exhibiting a subaverage compression ratio could be manifold. Together with our DBAs we went over the list and came up with the following, not exhaustive nor exclusive list of explainations which are already hinted in the “Comment” columns of the above table:

Samples 1 and 6: Those systems were recently used to test the influence of 4k vs. 64k NTFS cluster size on the database I/O performance. Over the course of these tests, a number of dummy files were written with the SQLIO benchmark tool. Altough the dummy files were deleted after the tests were concluded, the storage blocks remained allocated with apparently less compressible contents.

Samples 2, 3 and 8: Those systems host the databases for SharePoint (sample #2) and a HR/HCM applicant management and employee training management system (samples #3 and #8). Both applications seem to be designed or configured to store files uploaded to the application as binary blobs into the database. Both usecases suggest that the uploaded file data is to some extent already in some kind of compressed format (e.g. xlsx, pptx, jpeg, png, mpeg, etc.), thus limiting the efficiency of any further compression attempt like RTC. Storing large amounts of unstructured data together with structured data in a database is subject of frequent, ongoing and probably never ending, controversial discussions. There are several points of view to this question and good arguments are being made from either side. Personally and from a purely operational point of view, i'd favour the unstructured binary data not to be stored in a structured database.

Sample 4: This system hosts the legacy design described above, where a local application instance of SAP is installed along Microsoft SQL Server on the same system. The database tables of this SAP release already use the SQL Server page compression feature. Although this could have very well been the cause for the subaverage compression ratio, a look into the OS offered a different plausible explanation. Inside the OS the sum of space allocated to the various filesystems is only approximately 202 GB, while at same time the amount of space allocated by VMware is a little more than 304 GB. It seems that at one point in time there was a lot more data of unknown compressibility present on the system. The data has since been deleted, but the previously allocated storage blocks have not been properly reclaimed.

In order to put this theory to test, the procedure described in VMware KB 2004155 to reclaim the unused, but not de-allocated storage blocks, was applied. The following table shows the storage allocation for the system in sample #4 before and after the reclaim procedure:

Sample Datastore / VDisk VM # VM Provisioned (GB) VM Used (GB) SVC Delta Used (GB) Compression Ratio Comment 4 san-rtc-ssb-sql-unten-366 sas-1024G-366 7 488.11 304.32 209.16 1.45 SAP and MSSQL (with SQL Page Compression) on the same VM - before reclaiming unused storage space 4 7 488.11 172.53 81.29 2.12 SAP and MSSQL (with SQL Page Compression) on the same VM - after reclaiming unused storage space The numbers show that the above assumption was correct. After zeroing unused disk space within the VM and reclaiming unused storage blocks by re-thin-provisioning the virtual disks in VMware, the RTC compression ratio rose from a meager 1.45 to a near average 2.12. Now, only 47.11% – instead of the previous 68.73% – of the storage space allocated by VMware is actually used on the SVC level. Again, taking VMware thin-provisioning into account, the relative amount of actual storage space needed sinks even further down to 16.65%, or in other words a reduction by a factor of 6.00.

Samples 5 and 10: These systems basically share the same circumstances as the previously examined system in sample #4. The only difference is, that there is still some old, probably unused data sitting in the filesystems. In case of sample #5 it's about 20 GB of compressed SAP installation media and in case of sample #10 it's about 84.3 GB of partially compressed SAP installation media and SAP SUM update files. Of course we'd like to also reclaim this storage space and – hopefully – in the course of this some deleted, but up to now not de-allocated storage blocks, too. By this we hope to see equally good end results as in the case of sample #4. We're currently waiting on clearance from our SAP team to dispose of some of the old and probably unused data.

Samples 7, 9, 11 and 12: Systems in this sample category are probably best described as just being “small”. To better illustrate what is meant by this, the following table shows a compiled view of these four samples. The data was taken from the detailed tabular representation of each datastore on a per VM basis that is shown above:

Sample Datastore / VDisk VM # VM Provisioned (GB) VM Used (GB) SVC Delta Used (GB) Rel. Compressed Size (%) Rel. Compression Saving (%) Compression Ratio Windows Version 7 san-ssb-sql-unten-360 sas-4096G-360 5 34.11 23.91 13.14 54.96 45.04 1.82 2003 9 san-ssb-sql-unten-361 sas-2048G-361 7 34.11 24.16 12.52 51.82 48.18 1.93 2003 11 san-ssb-sql-oben-362 sas-2048G-362 1 218.09 37.19 19.93 53.59 46.41 1.87 2012 R2 12 4 143.09 37.98 19.43 51.16 48.84 1.95 2012 R2 Inside the systems there is very little user or installation data besides the base Windows operating system, the SQL Server binaries and some standard management tools which are used in our environment. VMware thin-provisioning already does a significant amount of space reduction. It reduces the storage space that would be used on the SVC level to approximately 24 GB for Windows 2003 and approximately 37.5 GB for Windows 2012 R2 systems. Although the number of samples is rather low, the almost consistent values within each Windows release category suggest that these values represent – in our environment – the minimal amount of storage space needed for each Windows release. The compressed size of approximately 12.8 GB for Windows 2003 and approximately 19.7 GB for Windows 2012 R2 systems as well as the average compression ratio of approximately 1.89 seem to support this theory in the way that they show very similar values within each Windows release category. It appears that – in our environment – these values are the bottom line with regard to minimal allocated storage capacity. There simply seems not to be enough other data with a good compressability in those systems in order to achieve better results with regard to the overall compression ratio.

By and large, we're quite pleased with the results we're seeing from the SVCs real-time compression feature in our VMware and MS SQL server environment. The amount of storage space saved is – especially with VMware thin-provisioning taken into account – significant. Even in those cases where the SQL servers page compression feature is heavily used, we're still seeing quite good compression results with the use of the additional real-time compression at the SVC level. In part, this is very likely due to the fact that real-time compression at the SVC level also covers the VMs data that is outside of the scope which is covered by the SQL servers page compression. On the other hand, this does not entirely suffice to explain the amount of saved storage space – between 50.86% and 73.86%, if we disregard some special cases for which the reasons of a low compression ratio were discussed above – we're seeing in cases where SQL server page compression is used. From the data collected and shown above, it would appear that the algorithms used in SQL server page compression still leave enough redundant data of low entropy for the algorithms used in the RACE at the SVC level to perform rather well.

In general and with regard to performance in particular we have – up to now – not noticed any negative side effects, like e.g. noticeable increases in I/O latency, by using RTC for the various SQL server and SAP systems shown above.

The use of RTC not only promises, but quite matter of factly offers a significant reduction of the storage space needed. Even with seemingly already compressed workloads, like e.g. SQL server page compressed databases, it deals very well. It thus enables a delayed procurement of additional storage resources, lower operational costs (administration, rack space, power, cooling, etc.), a reduced amount of I/O to the backend storage systems and a more efficient use of tier-1 storage like e.g. flash based storage systems.

It is on the other hand also no out-of-the-box, fire-and-forget solution when used sensibly. The selected examples of “interesting” systems, which were shown and discussed above, illustrates very well that there are always usecases which require special attention. They also point out the increased necessity of a good overall, interdisciplinary technical knowledge or a very good and open communication between the organisational units responsible for application, database, operating system, virtualization and storage administration.

Due to the rather old release level of our VMware environment we unfortunately weren't able to cover the interaction of TRIM, UNMAP, VAAI and RTC. It'd be very interesting to see if and how well those storage block reclaimation technologies work together with the SVCs real-time compression feature.

Comments and own experiences are – as always – very welcome!

2015-04-20 // Post-it art: Office window decorations - Pac-Man vs. Space Invaders vs. Spider-Pig

Awesome office window decorations made from colored Post-it stickers, featuring Pac-Man, Space_Invaders, Spider-Pig and others:

Click on the image for a original sized version. Sorry for the bad quality! Taken with my crappy phone camera on 2015/01/23 on my way to work, approximately from this location.

2015-02-06 // DIY - Lifter for a Coffee Pad Tin

As a christmas gift i got a new tin to store a different brand of single portion coffee pads for Senseo machines in. Those are the kind of attentive and useful gifts i really love and appreciate the most! They are used and enjoyed regularly, instead of just collecting dust in some closet. Every time you use them, they remind you in a positive way of the person who gave them to you and put a big, bright smile on your face

The old coffee pad tin consists of three parts:

- A tin can body with about the same diameter as the coffee pads. This is in order to keep a vertical stack of pads from falling over.

- A tin can lid.

- A lifter – see the exclamation mark in the picture below – to ease the retrieval of individual coffee pads from the tin can body. The pads are put in a vertical stack onto the spiral made from wire at the bottom of the lifter. The horizontal top part of the lifter acts as a handle by which the lifter and the vertical stack of pads is pulled from the tin can body.

All three parts are shown in the following picture:

The new coffee pad tin is from a different manufacturer and only consists of two parts – a tin can body and a tin can lid. It was unfortunately missing the very convenient lifter described above – see the question mark in the picture below. So there was nothing to put the coffee pads on and pull them out of the tin can body for an easier individual retrieval. Instead the tin can had to be shaken until one coffee pad fell out or topped over to get the whole stack out. Usually this resulted in the remaining pads not being vertically stacked anymore and instead being wedged together in a pile, which in turn hindered the subsequent retrieval of pads from the tin.

The new coffee pad tin is shown in the following picure:

In order to remedy this deficiency i decided to do a little low-tech DIY. So i picked up a spool of thick metal wire (Gardol Gartendraht Classic, length: 25m, diameter:1,8mm) at the local hardware store for €3,99. Together with the tools shown in the following picture:

i made a replica from the lifter that came with the old coffee pad tin. The original lifter shown on the far right was used as a template. The yarn second to the right was used to measure the lenght of wire needed off the spool of wire, shown on the left side of the picture. This was done by following the contour of the original lifter with the thread and then measuring the length of the straightened thread against a straightened portion of the wire from the spool. A length of about 50cm of wire was used, so the cost of material was about €0,08. The pliers shown second to the left in the picture were used to cut off the measured piece of wire and to bend around the sharp cutoff edges of the wire. They were also used to hold and form the straightened piece of wire while bending it into roughtly the same shape as the template.

The result was a lifter replica with roughtly the same shape as the original. The following picture shows them side by side. The replica on the left side and the original on the right side:

Now, the new coffee pad tin has also three parts, same as the old coffee pad tin:

Two more pictures. The fist one with the lifter replica in action in the empty new coffee pad tin:

And the second one with the lifter replica in action in the new coffee pad tin filled with a few pads:

Enhancing the new coffee pad tin by the simple means of a self-made lifter added even more value to it than what has already been described at the beginning of this post. It provided for a very enjoyable do-it-yourself time, the pleasurable feeling of accomplishment and success and the outcome is something that is very useful in everyday life. Best gift, ever

2015-02-03 // IBM Storwize V3700 out of Memory

Under certain conditions it is possible to inadvertently run into an out of memory situation on IBM Storwize V3700 systems, by simply running a Download Support Package procedure or the respective CLI command. This will – of course – bring all I/O on the affected system to a grinding halt.

A few days ago, the Nagios monitoring plugin introduced in “Nagios Monitoring - IBM SVC and Storwize” reported a failed PSU on one of our IBM Storwize V3700 systems. After raising a PMR with IBM in order to get the seemingly defective PSU replaced, i was told to simply reseat the PSU. According to IBM support this would usually fix this – apparently known – issue.

This was the first WTF moment and it turned out to not be the last one. So either IBM produces and sells subpar components – in this case the PSU – which need to be given a boot – yes, PSUs nowadays have their own firmware too – in order to be persuaded to cooperate again. Or it means IBM produces and sells subpar software which is not at all able to properly detect a component failure and distinguish between a faulty and a good PSU. Or perhaps its an unfortunate combination of both.

In any case, the procdure to reseat the PSU was carried out, which fixed the PSU issue. During the course of the fix procedure the system would become unreachable via TCP/IP for a rather long time, though. Definately over two minutes, but i haven't had a chance for an exact measurement. After the system was reachable again i followed this strange behaviour up and had a look at the systems event log. There were quite a lot of “Error Code: 1370, Error Code Text: SCSI ERP occurred” messages, so i decided to bother the IBM support again and send them a support collection in order to get an analysis with regard to the reachablilty issue as well as the 1370 errors.

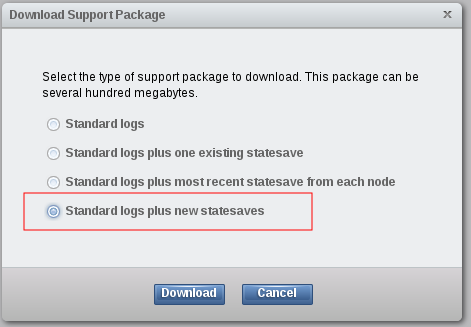

From previous occasions i knew that the IBM support would most likely request a support collection which was run with the “svc_livedump” CLI command or with the “Standard logs plus new statesaves” option from the WebUI. The latter one is marked red in the following screenshot example:

So i decided to pull a support collection with this option. After some time into the support collection process, the SVC sitting in front of the V3700 and other storage systems, started to show very high latencies (~60 sec.) on the primary VDisks backed by the V3700. On other VDisks which “only” had their secondary VDisk-Mirror located on MDiskGroups of the V3700, the latency peak was less dramatic, but still very noticable. Eventually degraded paths to the MDisks located on the V3700 started showing up on the SVC. After the support collection process finished the situation went back to normal. The latency on both primary and secondary VDisks instantly dropped down to the usual values and after running the fix procedures on the SVC, the degraded paths came back online.

The performance issues were in magnitude and duration severe enough to affect several applications pretty badly. Although the immediate issue was resolved, i still needed an analysis and written statement from IBM support for an action plan on how to prevent this kind of situation in the future and for compliance reasons as well. Here is the digest of what – according to IBM – happened:

During the procedure to reseat the reportedly defective PSU, one or more power surges occured.

These power surges apparently caused issues on the internal disk buses, which lead to the 1370 errors to be logged.

The power surges or the resulting 1370 errors are probably the cause for a failover of the config node too. Hence the connectivity issues with the CLI and the WebUI via TCP/IP.

More 1370 errors were logged during the runtime of the subsequent “Standard logs plus new statesaves” support collection process.

The issue of very high latency and MDisk paths becoming degraded was caused by the “Standard logs plus new statesaves” support collection process using up all of the memory – yes, including the data cache – on the V3700 system. This behaviour is specific and limited to the V3700 systems with “only” 4GB of memory.

As one can imagine, the last item was my second WTF moment. Apparently there are no programmatical safeguards to prevent the support collection process at a “Standard logs plus new statesaves” level from using up all of the systems memory. This would normally not be that bad at all, if the “Standard logs plus new statesaves” wasn't the particular level which IBM support would usually request on support cases concerning SVC and Storwize systems. On the phone the IBM support technician admitted that this common practice is in general probably a bad idea. But he also mentioned that he up to now hadn't heard of the known side effects actually occuring, like in this case.

The suggestion on how to prevent this kind of situation in the future was to either upgrade the V3700 systems from 4GB to 8GB memory – a solution i would gladly take provided it came free of charge – or to only run the support collection process only with the “svc_snap” CLI command or the “Standard logs” option from the WebUI. Since the memory upgrade for free isn't likely to happen, i'll stick with the second suggestion for now.

Incidently a third option came up over the last weekend. Looking at the IBM System Storage SAN Volume Controller V7.3.0.9 Release Note, it could be construed that someone at IBM SVC and Storwize development came to the realization that the issue could also be addressed in software, by altering the resource utilization of the support collection process:

HU00636 Livedump prepare fails on V3500 & V3700 systems with 4GB

memory when cache partition fullness is less than 35%

Fingers crossed, this fix really addresses and resolves the issue described above.

2014-12-30 // Diff File Icon for Patch Files in DokuWiki

Essentially “.patch” files are – with regard to their content – not different from “.diff” files. Still, throughout this blog and all of my other documentation i like to and also have grown accustomed to using the “.diff” filename suffix in cases where i want to focus on the semantics of a pure comparison. On the other hand, in cases where i want to focus on the semantics of actually applying a patch to a certain source file, the “.patch” filename suffix is used. I guess it's more of a personal quirk, rather than a well funded scientific theory.

Unfortunately in the stock DokuWiki distribution there is a little deficiency in displaying the appropriate diff file icon not only for “.diff” files, but for “.patch” files also. Here is an example screenshot of what this looks like:

Note the plain white paper sheet icon in front of the filename “sblim-wbemcli-1.6.3_debug.patch”, which is the default file icon in case of an unknown filename suffix.

This behaviour basically exists, because the “.patch” filename suffix has no appropriate icon in the “<dokuwiki-path>/lib/images/fileicons/” directory:

$ find <dokuwiki-path>/lib/images/ -type f -name "patch.*" -ls | wc -l 0

See also the DokuWiki documentation on MIME configuration.

This can be quickly fixed though. By just creating appropriate directory enties for the “.patch” filename suffix via symbolic links to the file icons for the “.diff” filename suffix:

$ ln -s <dokuwiki-path>/lib/images/fileicons/diff.png <dokuwiki-path>/lib/images/fileicons/patch.png $ ln -s <dokuwiki-path>/lib/images/fileicons/32x32/diff.png <dokuwiki-path>/lib/images/fileicons/32x32/patch.png $ find <dokuwiki-path>/lib/images/ -name "patch.*" -ls ... lrwxrwxrwx ... <dokuwiki-path>/lib/images/fileicons/patch.png -> <dokuwiki-path>/lib/images/fileicons/diff.png ... lrwxrwxrwx ... <dokuwiki-path>/lib/images/fileicons/32x32/patch.png -> <dokuwiki-path>/lib/images/fileicons/32x32/diff.png $ touch <dokuwiki-path>/conf/local.php

The final “touch” command is necessary, since the icons are part of the DokuWiki cache and the application logic has to be notified to rebuild the now invalid cache.

After reloading the respective DokuWiki page in the browser, note the now “Diff”-labeled paper sheet icon in front of the filename “sblim-wbemcli-1.6.3_debug.patch”:

This may be just a small change in the display of the DokuWiki page, but in my humble opinion it makes things much more pleasant to look at.