2012-10-23 // Nagios Monitoring - Rittal CMC-TC with LCP

We use Rittal LCP - Liquid Cooling Package units to chill the 19“ racks and equipment in the datacenters. The LCPs come with their own Rittal CMC-TC units for management and monitoring purposes. With check_rittal_health there is already a Nagios plugin to monitor Rittal CMC-TC units. Unfortunately this plugin didn't cover the LCPs, which come with a plethora of built-in sensors. Also the existing plugin didn't allow to set individual monitoring thresholds. Therefor i modified the existing plugin to accomodate our needs. The modified version can be downloaded here check_rittal_health.pl.

The whole setup for monitoring Rittal CMC-TC and LCP with Nagios looks like this:

Configure your CMC-TC unit, see the manual here: CMC-TC Basic CMC DK 7320.111 - Montage, Installation und Bedienung. Essential are the network settings, a user for SNMPv3 access, a SNMP trap receiver (your Nagios server running SNMPTT). Optional, but highly recommended, are the settings for the NTP server, change of the default user passwords and disabling insecure services (Telnet, FTP, HTTP).

Download the Nagios plugin check_rittal_health.pl and place it in the plugins directory of your Nagios system, in this example

/usr/lib/nagios/plugins/:$ mv -i check_rittal_health.pl /usr/lib/nagios/plugins/ $ chmod 755 /usr/lib/nagios/plugins/check_rittal_health.pl

Define the following Nagios commands. In this example this is done in the file

/etc/nagios-plugins/config/check_cmc.cfg:# check Rittal CMC status define command { command_name check_cmc_status command_line $USER1$/check_rittal_health.pl --hostname $HOSTNAME$ --protocol $ARG1$ --username $ARG2$ --authpassword $ARG3$ --customthresholds "$ARG4$" }Verify that a generic check command for a running SNMPD is already present in your Nagios configuration. If not add a new check command like this:

define command { command_name check_snmpdv3 command_line $USER1$/check_snmp -H $HOSTADDRESS$ -o .1.3.6.1.2.1.1.3.0 -P 3 -t 30 -L $ARG1$ -U $ARG2$ -A $ARG3$ }Verify that a generic check command for a SSH service is already present in your Nagios configuration. If not add a new check command like this:

# 'check_ssh' command definition define command { command_name check_ssh command_line /usr/lib/nagios/plugins/check_ssh -t 20 '$HOSTADDRESS$' }Verify that a generic check command for a HTTPS service is already present in your Nagios configuration. If not add a new check command like this:

# 'check_https_port_uri' command definition define command { command_name check_https_port_uri command_line /usr/lib/nagios/plugins/check_http --ssl -I '$HOSTADDRESS$' -p '$ARG1$' -u '$ARG2$' }Define a group of services in your Nagios configuration to be checked for each CMC-TC device:

# check host alive define service { use generic-service-pnp hostgroup_name cmc service_description Check_host_alive check_command check-host-alive } # check sshd define service { use generic-service hostgroup_name cmc service_description Check_SSH check_command check_ssh } # check snmpd define service { use generic-service hostgroup_name cmc service_description Check_SNMPDv3 check_command check_snmpdv3!authNoPriv!<user>!<pass> } # check httpd define service { use generic-service-pnp hostgroup_name cmc service_description Check_service_https check_command check_https_port_uri!443!/ } # check Rittal CMC status define service { use generic-service-pnp servicegroups snmpchecks hostgroup_name cmc service_description Check_CMC_Status check_command check_cmc_status!3!<user>!<pass>!airTemp,15:32,10:35\;coolingCapacity,0:10000,0:15000\;events,0:1,0:2\;fan,450:2000,400:2500\;temp,15:30,10:35\;waterFlow,0:70,0:100\;waterTemp,10:25,5:30 }Replace

generic-service-pnpwith your Nagios service template that has performance data processing enabled. Replace<user>and<pass>with the user credentials configured on the CMC-TC devices for SNMPv3 access. Adjust the sensor threshold settings according to your requirements, see the output ofcheck_rittal_health.pl -hfor an explaination of the threshold settings format.Define a service dependency to run the check

Check_CMC_statusonly if theCheck_SNMPDv3was run successfully:# Rittal CMC SNMPD dependencies define servicedependency { hostgroup_name cmc service_description Check_SNMPDv3 dependent_service_description Check_CMC_Status execution_failure_criteria c,p,u,w notification_failure_criteria c,p,u,w }Define hosts in your Nagios configuration for each CMC-TC device. In this example its named

cmc-host1:define host { use cmc host_name cmc-host1 alias Rittal CMC LPC address 10.0.0.1 parents parent_lan }Replace

cmcwith your Nagios host template for the CMC-TC devices. Adjust theaddressandparentsparameters according to your environment.Define a hostgroup in your Nagios configuration for all CMC-TC devices. In this example it is named

cmc. The above checks are run against each member of the hostgroup:define hostgroup { hostgroup_name cmc alias Rittal CMC members cmc-host1 }Run a configuration check and if successful reload the Nagios process:

$ /usr/sbin/nagios3 -v /etc/nagios3/nagios.cfg $ /etc/init.d/nagios3 reload

The new hosts and services should soon show up in the Nagios web interface.

If the Nagios server is running SNMPTT and was configured as a SNMP trap receiver in step number 1 in the above list, SNMPTT also needs to be configured to be able to understand the incoming SNMP traps from CMC-TC devices. This can be achieved by the following steps:

Convert the Rittal SNMP MIB definitions in

CMC-TC_MIB_v1.1h.txtinto a format that SNMPTT can understand.$ /opt/snmptt/snmpttconvertmib --in=MIB/CMC-TC_MIB_v1.1h.txt --out=/opt/snmptt/conf/snmptt.conf.rittal-cmc-tc ... Done Total translations: 10 Successful translations: 10 Failed translations: 0

The trap severity settings should be pretty reasonable by default, but you can edit them according to your requirements with:

$ vim /opt/snmptt/conf/snmptt.conf.rittal-cmc-tc

Add the new configuration file to be included in the global SNMPTT configuration and restart the SNMPTT daemon:

$ vim /opt/snmptt/snmptt.ini ... [TrapFiles] snmptt_conf_files = <<END ... /opt/snmptt/conf/snmptt.conf.rittal-cmc-tc ... END $ /etc/init.d/snmptt reload

Download the Nagios plugin check_snmp_traps.sh and place it in the plugins directory of your Nagios system, in this example

/usr/lib/nagios/plugins/:$ mv -i check_snmp_traps.sh /usr/lib/nagios/plugins/ $ chmod 755 /usr/lib/nagios/plugins/check_snmp_traps.sh

Define the following Nagios command to check for SNMP traps in the SNMPTT database. In this example this is done in the file

/etc/nagios-plugins/config/check_snmp_traps.cfg:# check for snmp traps define command { command_name check_snmp_traps command_line $USER1$/check_snmp_traps.sh -H $HOSTNAME$:$HOSTADDRESS$ -u <user> -p <pass> -d <snmptt_db> }Replace

user,passandsnmptt_dbwith values suitable for your SNMPTT database environment.Add another service in your Nagios configuration to be checked for each CMC device:

# check snmptraps define service { use generic-service hostgroup_name cmc service_description Check_SNMP_traps check_command check_snmp_traps }Optional: Define a serviceextinfo to display a folder icon next to the

Check_SNMP_trapsservice check for each CMC device. This icon provides a direct link to the SNMPTT web interface with a filter for the selected host:define serviceextinfo { hostgroup_name cmc service_description Check_SNMP_traps notes SNMP Alerts #notes_url http://<hostname>/nagios3/nagtrap/index.php?hostname=$HOSTNAME$ #notes_url http://<hostname>/nagios3/nsti/index.php?perpage=100&hostname=$HOSTNAME$ }Uncomment the

notes_urldepending on which web interface (nagtrap or nsti) is used. Replacehostnamewith the FQDN or IP address of the server running the web interface.Run a configuration check and if successful reload the Nagios process:

$ /usr/sbin/nagios3 -v /etc/nagios3/nagios.cfg $ /etc/init.d/nagios3 reload

Optional: If you're running PNP4Nagios v0.6 or later to graph Nagios performance data, you can use the

check_cmc_status.phpPNP4Nagios template to beautify the graphs. Download the PNP4Nagios template check_cmc_status.php and place it in the PNP4Nagios template directory, in this example/usr/share/pnp4nagios/html/templates/:$ mv -i check_cmc_status.php /usr/share/pnp4nagios/html/templates/ $ chmod 644 /usr/share/pnp4nagios/html/templates/check_cmc_status.php

The following image shows an example of what the PNP4Nagios graphs look like for a Rittal CMC-TC with a LCP-T3+ unit:

All done, you should now have a complete Nagios-based monitoring solution for your Rittal CMC-TC and LCP devices.

2012-10-02 // Nagios Monitoring - EMC Clariion

Some time ago i wrote a - rather crude - Nagios plugin to monitor EMC Clariion storage arrays, specifically the CX4-120 model. The plugin isn't very pretty, but it'll do in a pinch

navicli and naviseccli - which are provided by EMC - installed on the Nagios system and SNMP activated on the SPs of the array. A network connection from the Nagios system to the Clariion device on ports TCP/6389 and UDP/161 must be allowed.

Since the Nagios server in my setup runs on Debian/PPC on a IBM Power LPAR and there is no native Linux/PPC version of navicli or naviseccli, i had to run those tools through the IBM PowerVM LX86 emulator. If the plugin is run in a x86 environment, the variable RUNX86 has to be set to an empty value.

The whole setup looks like this:

Enable SNMP queries on each of the Clariion devices SP. Login to NaviSphere and for each Clariion device navigate to:

-> Storage tab -> Storage Domains -> Local Domain -> <Name of the Clariion device> -> SP A -> Properties -> Network tab -> Check box "Enable / Disable processing of SNMP MIB read requests" -> Apply or OK -> SP B -> Properties -> Network tab -> Check box "Enable / Disable processing of SNMP MIB read requests" -> Apply or OKVerify the port UDP/161 on the Clariion device can be reached from the Nagios system.

Optional: Enable SNMP traps to be sent to the Nagios system on each of the Clariion devices SP. This requires SNMPD and SNMPTT to be already setup on the Nagios system. Login to NaviSphere and for each Clariion device navigate to:

-> Monitors tab -> Monitor -> Templates -> Create New Template Based On ... -> Call_Home_Template_6.28.5 -> General tab -> <Select the events you're interested in> -> SNMP tab -> <Enter IP of the Nagios system and the SNMPDs community string> -> Apply or OK -> SP A -> <Name of the Clariion device> -> Monitor Using Template ... -> <Template name> -> OK -> SP B -> <Name of the Clariion device> -> Monitor Using Template ... -> <Template name> -> OKVerify the port UDP/162 on the Nagios system can be reached from the Clariion devices.

Install

navicliornavisecclion the Nagios system, in this example/opt/Navisphere/bin/navicliand/opt/Navisphere/bin/naviseccli. Verify the port TCP/6389 on the Clariion device can be reached from the Nagios system.Download the Nagios plugin check_cx.sh and place it in the plugins directory of your Nagios system, in this example

/usr/lib/nagios/plugins/:$ mv -i check_cx.sh /usr/lib/nagios/plugins/ $ chmod 755 /usr/lib/nagios/plugins/check_cx.sh

Adjust the plugin settings according to your environment. Edit the following variable assignments:

NAVICLI=/opt/Navisphere/bin/navicli NAVISECCLI=/opt/Navisphere/bin/naviseccli NAVIUSER="adminuser" NAVIPASS="adminpassword" RUNX86=/usr/local/bin/runx86 SNMPGETNEXT_ARGS="-On -v 1 -c public -t 15"

Define the following Nagios commands. In this example this is done in the file

/etc/nagios-plugins/config/check_cx.cfg:# check CX4 status define command { command_name check_cx_status command_line $USER1$/check_cx.sh -H $HOSTNAME$ -C snmp } define command { command_name check_cx_cache command_line $USER1$/check_cx.sh -H $HOSTNAME$ -C cache } define command { command_name check_cx_disk command_line $USER1$/check_cx.sh -H $HOSTNAME$ -C disk } define command { command_name check_cx_faults command_line $USER1$/check_cx.sh -H $HOSTNAME$ -C faults } define command { command_name check_cx_sp command_line $USER1$/check_cx.sh -H $HOSTNAME$ -C sp }Verify that a generic check command for a running SNMPD is already present in your Nagios configuration. If not add a new check command like this:

define command { command_name check_snmpd command_line $USER1$/check_snmp -H $HOSTADDRESS$ -o .1.3.6.1.2.1.1.3.0 -P 1 -C public -t 30 }Define a group of services in your Nagios configuration to be checked for each Clariion device:

# check snmpd define service { use generic-service hostgroup_name cx4-ctrl service_description Check_SNMPD check_command check_snmpd } # check CX status define service { use generic-service hostgroup_name cx4-ctrl service_description Check_CX_status check_command check_cx_status } # check CX cache define service { use generic-service hostgroup_name cx4-ctrl service_description Check_CX_cache check_command check_cx_cache } # check CX disk define service { use generic-service hostgroup_name cx4-ctrl service_description Check_CX_disk check_command check_cx_disk } # check CX faults define service { use generic-service hostgroup_name cx4-ctrl service_description Check_CX_faults check_command check_cx_faults } # check CX sp define service { use generic-service hostgroup_name cx4-ctrl service_description Check_CX_sp check_command check_cx_sp }Replace

generic-servicewith your Nagios service template.Define a service dependency to run the check

Check_CX_statusonly if theCheck_SNMPDwas run successfully:# CX4 SNMPD dependencies define servicedependency { hostgroup_name cx4-ctrl service_description Check_SNMPD dependent_service_description Check_CX_status execution_failure_criteria c,p,u,w notification_failure_criteria c,p,u,w }Define hosts in your Nagios configuration for each SP in the Clariion device. In this example they are named

cx1-spaandcx1-spb:define host { use disk host_name cx1-spa alias CX4-120 Disk Storage address 10.0.0.1 parents parent_lan } define host { use disk host_name cx1-spb alias CX4-120 Disk Storage address 10.0.0.2 parents parent_lan }Replace

diskwith your Nagios host template for storage devices. Adjust theaddressandparentsparameters according to your environment.Define a hostgroup in your Nagios configuration for all Clariion devices. In this example it is named

cx4-ctrl. The above checks are run against each member of the hostgroup:define hostgroup { hostgroup_name cx4-ctrl alias CX4 Disk Storages members cx1-spa, cx1-spb }Run a configuration check and if successful reload the Nagios process:

$ /usr/sbin/nagios3 -v /etc/nagios3/nagios.cfg $ /etc/init.d/nagios3 reload

The new hosts and services should soon show up in the Nagios web interface.

If the optional step number 2 in the above list was done, SNMPTT also needs to be configured to be able to understand the incoming SNMP traps from Clariion devices. This can be achieved by the following steps:

Convert the EMC Clariion SNMP MIB definitions in

emc-clariion.mibinto a format that SNMPTT can understand.$ /opt/snmptt/snmpttconvertmib --in=MIB/emc-clariion.mib --out=/opt/snmptt/conf/snmptt.conf.emc-clariion ... Done Total translations: 5 Successful translations: 5 Failed translations: 0

Edit the trap severity according to your requirements, e.g.:

$ vim /opt/snmptt/conf/snmptt.conf.emc-clariion ... EVENT EventMonitorTrapWarn .1.3.6.1.4.1.1981.0.4 "Status Events" Warning ... EVENT EventMonitorTrapFault .1.3.6.1.4.1.1981.0.6 "Status Events" Critical ...

Optional: Apply the following patch to the configuration to reduce the number of false positives:

- snmptt.conf.emc-clariion

diff -u snmptt.conf.emc-clariion_1 snmptt.conf.emc-clariion --- snmptt.conf.emc-clariion.orig 2012-10-02 19:04:15.000000000 +0200 +++ snmptt.conf.emc-clariion 2009-07-21 10:28:44.000000000 +0200 @@ -54,8 +54,31 @@ # # # +EVENT EventMonitorTrapError .1.3.6.1.4.1.1981.0.5 "Status Events" Major +FORMAT An Error EventMonitorTrap is generated in $* +MATCH MODE=and +MATCH $*: !(( Power [AB] : Faulted|Disk Array Enclosure .Bus [0-9] Enclosure [0-9]. is faulted)) +MATCH $X: !(0(2:5|3:0|3:1)[0-9]:[0-9][0-9]) +SDESC +An Error EventMonitorTrap is generated in +response to a user-specified event. +Details can be found in Variables data. +Variables: + 1: hostName + 2: deviceID + 3: eventID + 4: eventText + 5: storageSystem +EDESC +# +# Filter and ignore the following events +# 02:50 - 03:15 Navisphere Power Supply Checks +# EVENT EventMonitorTrapError .1.3.6.1.4.1.1981.0.5 "Status Events" Normal FORMAT An Error EventMonitorTrap is generated in $* +MATCH MODE=and +MATCH $*: (( Power [AB] : Faulted|Disk Array Enclosure .Bus [0-9] Enclosure [0-9]. is faulted)) +MATCH $X: (0(2:5|3:0|3:1)[0-9]:[0-9][0-9]) SDESC An Error EventMonitorTrap is generated in response to a user-specified event.

The reason for this is, the Clariion performs a power supply check every friday around 3:00 am. This triggers a SNMP trap to be sent, even if the power supplies check out fine. In my opinion this behaviour is defective, but a case opened on this issue showed that EMC tends to think otherwise. Since there was very little hope for EMC to come to at least some sense, i just did the above patch to the SNMPTT configuration file. What it does is, it basically lowers the severity for all “Major” traps that are power supply related and sent around 3:00 am to “Normal”. All other “Major” traps keep their original severity.

Add the new configuration file to be included in the global SNMPTT configuration and restart the SNMPTT daemon:

$ vim /opt/snmptt/snmptt.ini ... [TrapFiles] snmptt_conf_files = <<END ... /opt/snmptt/conf/snmptt.conf.emc-clariion ... END $ /etc/init.d/snmptt reload

Download the Nagios plugin check_snmp_traps.sh and place it in the plugins directory of your Nagios system, in this example

/usr/lib/nagios/plugins/:$ mv -i check_snmp_traps.sh /usr/lib/nagios/plugins/ $ chmod 755 /usr/lib/nagios/plugins/check_snmp_traps.sh

Define the following Nagios command to check for SNMP traps in the SNMPTT database. In this example this is done in the file

/etc/nagios-plugins/config/check_snmp_traps.cfg:# check for snmp traps define command{ command_name check_snmp_traps command_line $USER1$/check_snmp_traps.sh -H $HOSTNAME$:$HOSTADDRESS$ -u <user> -p <pass> -d <snmptt_db> }Replace

user,passandsnmptt_dbwith values suitable for your SNMPTT database environment.Add another service in your Nagios configuration to be checked for each Clariion device:

# check snmptraps define service { use generic-service hostgroup_name cx4-ctrl service_description Check_SNMP_traps check_command check_snmp_traps }Optional: Define a serviceextinfo to display a folder icon next to the

Check_SNMP_trapsservice check for each Clariion device. This icon provides a direct link to the SNMPTT web interface with a filter for the selected host:define serviceextinfo { hostgroup_name cx4-ctrl service_description Check_SNMP_traps notes SNMP Alerts #notes_url http://<hostname>/nagios3/nagtrap/index.php?hostname=$HOSTNAME$ #notes_url http://<hostname>/nagios3/nsti/index.php?perpage=100&hostname=$HOSTNAME$ }Uncomment the

notes_urldepending on which web interface (nagtrap or nsti) is used. Replacehostnamewith the FQDN or IP address of the server running the web interface.Run a configuration check and if successful reload the Nagios process:

$ /usr/sbin/nagios3 -v /etc/nagios3/nagios.cfg $ /etc/init.d/nagios3 reload

All done, you should now have a complete Nagios-based monitoring solution for your EMC Clariion devices.

2012-10-01 // Nagios Performance Tuning

I'm running Nagios in a bit of an unusual setup, namely on a Debian/PPC system, which runs in a LPAR on an IBM Power System, using a dual VIOS setup for access to I/O resources (SAN disks and networks). The Nagios server runs the stock Debian “Squeeze” packages:

nagios-nrpe-plugin 2.12-4 Nagios Remote Plugin Executor Plugin nagios-nrpe-server 2.12-4 Nagios Remote Plugin Executor Server nagios-plugins 1.4.15-3squeeze1 Plugins for the nagios network monitoring and management system nagios-plugins-basic 1.4.15-3squeeze1 Plugins for the nagios network monitoring and management system nagios-plugins-standard 1.4.15-3squeeze1 Plugins for the nagios network monitoring and management system nagios3 3.2.1-2 A host/service/network monitoring and management system nagios3-cgi 3.2.1-2 cgi files for nagios3 nagios3-common 3.2.1-2 support files for nagios3 nagios3-core 3.2.1-2 A host/service/network monitoring and management system core files nagios3-doc 3.2.1-2 documentation for nagios3 ndoutils-common 1.4b9-1.1 NDOUtils common files ndoutils-doc 1.4b9-1.1 Documentation for ndoutils ndoutils-nagios3-mysql 1.4b9-1.1 This provides the NDOUtils for Nagios with MySQL support pnp4nagios 0.6.12-1~bpo60+1 Nagios addon to create graphs from performance data pnp4nagios-bin 0.6.12-1~bpo60+1 Nagios addon to create graphs from performance data (binaries) pnp4nagios-web 0.6.12-1~bpo60+1 Nagios addon to create graphs from performance data (web interface)

It monitors about 220 hosts, which are either Unix systems (AIX or Linux), storage systems (EMC Clariion, EMC Centera, Fujitsu DX, HDS AMS, IBM DS, IBM TS), SAN devices (Brocade 48000, Brocade DCX, IBM SVC) or other hardware devices (IBM Power, IBM HMC, Rittal CMC). About 5000 services are checked in a 5 minute interval, mostly with NRPE, SNMP, TCP/UDP and custom plugins utilizing vendor specific tools. The server also runs Cacti, SNMPTT, DokuWiki and several other smaller tools, so it has always been quite busy.

In the past i had already implemented some performance optimization measures in order to mitigate the overall load on the system and to get all checks done in the 5 minute timeframe. Those were - in no specific order:

Use C/C++ based plugins. Try to avoid plugins that depend on additional, rather large runtime environments (e.g. Perl, Java, etc.).

If Perl based plugins are still necessary, use the Nagios embedded Perl interpreter to run them. See Using The Embedded Perl Interpreter for more information and Developing Plugins For Use With Embedded Perl on how to develop and debug plugins for the embedded Perl interpreter.

When using plugins based on shell scripts, try to minimize the number of calls to additional command line tools by using the shells built-in facilities. For example bash and ksh93 have a built-in syntax for manipulating strings, which can be used instead of calling

sedorawk.Create a ramdisk with a filesystem on it to hold the I/O heavy files and directories. In my case

/etc/fstabcontains the following line:# RAM disk for volatile Nagios files none /var/ram/nagios3 tmpfs defaults,size=256m,mode=750,uid=nagios,gid=nagios 0 0

preparing an empty ramdisk based filesystem at boot time. The init script

/etc/init.d/nagios-prepareis run before the Nagios init script. It creates a directory tree on the ramdisk and copies the necessary files from the disk based, non-volatile filesystems:Source Destination /var/log/nagios3/retention.dat /var/ram/nagios3/log/retention.dat /var/log/nagios3/nagios.log /var/ram/nagios3/log/nagios.log /var/cache/nagios3/ /var/ram/nagios3/cache/ over to the ramdisk via rsync. The following Nagios configuration stanzas have been altered to use the new ramdisk:

check_result_path=/var/ram/nagios3/spool/checkresults log_file=/var/ram/nagios3/log/nagios.log object_cache_file=/var/ram/nagios3/cache/objects.cache state_retention_file=/var/ram/nagios3/log/retention.dat temp_file=/var/ram/nagios3/cache/nagios.tmp temp_path=/var/ram/nagios3/tmp

To prevent loss of data in the event of a system crash, a cronjob is run every 5 minutes to rsync some files back from the ramdisk to the disk based, non-volatile filesystems:

Source Destination /var/ram/nagios3/log/ /var/log/nagios3/ /var/ram/nagios3/cache/ /var/cache/nagios3/ This job should also be run before the system is shut down or rebooted.

Disable

free()ing of child process memory (see: Nagios Main Configuration File Options - free_child_process_memory) with:free_child_process_memory=0

in the main Nagios configuration file. This can safely be done since the Linux OS will take care of that once the

fork()ed child exits.Disable

fork()ing twice when creating a child process (see: Nagios Main Configuration File Options - child_processes_fork_twice) with:child_processes_fork_twice=0

in the main Nagios configuration file.

Recently, after “just” adding another process to be monitored, i ran into a strange problem, where suddenly all checks would fail with error messages similar to this example:

[1348838643] Warning: Return code of 127 for check of service 'Check_process_gmond' on host

'host1' was out of bounds. Make sure the plugin you're trying to run actually exists

[1348838643] SERVICE ALERT: host1;Check_process_gmond;CRITICAL;SOFT;1;(Return code of 127 is

out of bounds - plugin may be missing)

Even checks totally unrelated to the new process to be monitored. After removing the new check from some hostgroups everything was be fine again. So the problem wasn't with the check per se, but rather with the number of checks, which felt like i was hitting some internal limit of the Nagios process. It turned out to be exactly that. The Nagios macros which are exported to the fork()ed child process had reached the OS limit for a processes environment. After checking all plugins for any usage of the Nagios macros in the process environment i decided to turn this option off (see Nagios Main Configuration File Options - enable_environment_macros) with:

enable_environment_macros=0

in the main Nagios configuration file. Along with the two above options i had now basically reached what is also done by the single use_large_installation_tweaks configuration option (see: Nagios Main Configuration File Options - use_large_installation_tweaks).

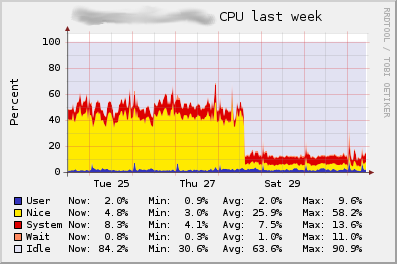

After reloading the Nagios process not only did the new process checks now work for all hostgroups, but there was also a very noticable drop in the systems CPU load:

2012-09-05 // AIX RPMs: aaa_base, logrotate and run_parts

Like announced earlier, here are the first three AIX RPM packages:

aaa_base: The AFW Package Management base package. This package installs several important configuration files, directories, programs and scripts. Currently there's only a script handling some post-LPM actions via an errnotify ODM method. More features will be added in the future.

logrotate: A slightly modified version of Michael Perzls logrotate package. The

logrotate.ddirectory has been moved into/opt/freeware/etc/logrotate/to avoid cluttering of the/opt/freeware/etc/directory. An integration with run_parts (see below) with a daily job has been added.run_parts: Like its Linux equivalent run_parts implements a wrapper for the execution of cron jobs. Among its features are:

Sets up a useful environment for the execution of cronjobs.

Guarantees only one instance of a job is active at any time.

Is able to execute jobs in a predictable sequence one by one.

Sends mails with any output of the wrapped script to a configurable mail adress (root by default).

Provides meaningfull mail subject lines, depending on the exit code of the wrapped script.

Clearly indicates reason of exit, exit code and run time.

Upon package installation several cronjobs for hourly, daily, weekly and monthly execution are added. The exact execution times and dates are randomized within different ranges upon package installation in order to avoid a kind of Thundering herd problem that can occur in larger and time synchronized environments. The time ranges are:

Run hourly jobs at a random minute not equal to the full hour.

Run daily jobs every day between 03:01am and 05:59am.

Run weekly jobs on saturday, between 00:01am and 02:59am.

Run monthly jobs on the first day of the month, between 03:01am and 05:59am.

Jobs for hourly, daily, weekly or monthly execution can be added by placing them or symlinking them into the corresponding directories:

/opt/freeware/etc/run_parts/hourly.d/ /opt/freeware/etc/run_parts/daily.d/ /opt/freeware/etc/run_parts/weekly.d/ /opt/freeware/etc/run_parts/monthly.d/

Jobs for other execution intervals need to be sheduled individually via the usual crontab entries, but can be prefixed by the run_parts wrapper (

/opt/freeware/libexec/run_parts/run-parts) to still gain some of the advantages listed above.

2012-08-31 // AIX RPMs: Introduction

Traditionally AIX and Open Source Software (OSS) have a more “distant” relationship than one would like them to have. This is oddly enough, considering how much IBM is contributing in other OSS areas (Linux kernel, Samba, OpenOffice, etc.). Over the years and in discussions with others, i've come up with a list of reasons – in no particular order and in now way exhaustive – as to why this is:

Linux or BSD on x86 hardware or virtual machines are just more common these days. Thus if you want to use, deploy or even develop some OSS tool you'll probably end up using one of those platforms with a mature and ready to use OSS distribution.

Development environments on other platforms are more readily available. See the previous bullet point. But also, IBM has done a far better job than e.g. Sun Microsystems or HP in keeping used hardware off the streets. This has effectively prevented the formation of a significant hobbyist user base or breeding ground of people using OSS on AIX and contributing their gained experiences about running OSS on AIX back to the OSS projects. Admittedly IBM has its own community platform with DeveloperWorks, but it lacks freely accessible build or test hosts like HP used to have for its HP-UX and VMS platforms.

IBM has – at least here in europe – not that much of a close traditional connection to the scientific or educational community like the one e.g. Sun Microsystems had. A lot of the early startups during the first internet hype used Sun Solaris on SPARC as a platform, since the people starting to work there already knew the platfrom from their time at college or university. The experiences gained from those early internet days had a lot of influence on the resulting OSS projects.

AIX is and has always been a bit of an oddball of all the Unixes. Some concepts seem strange at first, but once you get to know them aren't so bad at all (e.g. the shared library concept) or you'll find you'll hate them forever (e.g. the screwed up startup script mess with a mixture of initttab, rc-Scripts and SRC).

IBM has – IMHO in a very snobby way – always branded AIX as a “enterprise class Unix”, thus discarding a lot potential users and customers along the way. It sometimes seems and feels to the user, that one should consider himself lucky to be allowed to run AIX at all. Running it with anything else than a pure IBM software stack (e.g. DB2, WebSphere, Tivoli, Domino, etc.) seems almost blasphemous.

Compared to the development and innovation speed of other platforms like e.g. Linux, BSD or even Solaris 10 and its later versions, AIX appears to progress at a speed of tectonic motion. This is partly due to the previous point and the targeted enterprise market segment. Customers in this particular range want first and foremost RAS features, which on the other hand limit the amount of possible product innovation and renovation.

AIX users are mostly still typical IBM customers in a way that there's not much of a DYI mentality like the one that can be found in the Linux or BSD user base. IBM customers are trained to play by the book and if that doesn't work out, call in for the support they pay their monthly support fee for. I regularly experience this when opening a PMR, skipping right through support levels 0 to 2 because i already tried what they would have suggested and ending up in level 3 support (lab). What is a big annoyance to me, shows on the other hand how the majority of the AIX user base – around which IBM has build its support infrastructure – seems to work.

IBM is still “lawyer town USA”, meaning all the previous efforts (e.g. AIX Toolbox for Linux Applications) to make AIX more OSS-friendly and build at least a modest OSS infrastructure for AIX have been choked to death by the concerns and fear of legal, licensing and liability issues.

Despite all this, Michael Perzl has done a really great job in maintaining and expanding his AIX Open Source Packages which build upon and aim to replace the abandoned AIX Toolbox for Linux Applications. I would very much like to see IBM sponsoring his effort and/or even turning this into a fully fledged community driven project!

To support this effort and to promote AIX in the OSS community, i'll be posting about my own RPMs that are currently missing from Michael Perzls collection, about patches and bug reports submitted to the upstream projects, and about general information and ideas around the OSS on AIX subject.